AI vs. Traditional Catalyst Development: A 2025 Comparison of Speed, Cost, and Innovation in Drug Discovery

This article provides a comprehensive analysis for researchers and drug development professionals on the paradigm shift from traditional, trial-and-error catalyst development to AI-driven approaches.

AI vs. Traditional Catalyst Development: A 2025 Comparison of Speed, Cost, and Innovation in Drug Discovery

Abstract

This article provides a comprehensive analysis for researchers and drug development professionals on the paradigm shift from traditional, trial-and-error catalyst development to AI-driven approaches. We explore the foundational principles of both methods, detail the application of machine learning and automated robotics in modern catalyst design, and address key challenges like data quality and model interpretability. Through a comparative validation of real-world case studies and performance metrics, we demonstrate how AI is accelerating timelines, reducing R&D costs, and enabling the discovery of novel catalytic materials, ultimately shaping the future of biomedical research.

The Catalyst Development Paradigm Shift: From Intuition to Algorithm

Traditional catalyst development has long been characterized by a research paradigm deeply rooted in iterative, human-led experimentation. This methodology relies almost exclusively on the specialized knowledge and intuition of experienced researchers, who manually design experiments based on established chemical principles and historical data. The process is fundamentally guided by empirical relationships and linear free energy relationships (LFERs)—such as the Brønsted catalysis law, Hammett equation, and Taft equation—which provide simplified, quantitative insights into structure-activity relationships based on limited, curated datasets [1]. Before the advent of sophisticated computational planning tools, chemists depended heavily on database search engines like Reaxys and SciFinder to retrieve published reaction information, a process limited to previously recorded transformations and unable to guide the discovery of novel catalysts or unreported synthetic routes [1].

The core scientific challenge within this traditional research paradigm lies in the immense complexity and high dimensionality of the search space, which encompasses virtually limitless variables related to catalyst composition, structure, reactants, and synthesis conditions [2]. Other significant limitations include the general lack of data standardization and the inherently lengthy research cycles, which not only consume substantial manpower and material resources but also introduce considerable uncertainty into research outcomes [2]. This article provides a detailed comparison of this established approach against emerging AI-driven methodologies, examining their respective experimental protocols, performance data, and practical implications for research efficiency.

Core Methodologies and Workflows

The Traditional Experimental Workflow

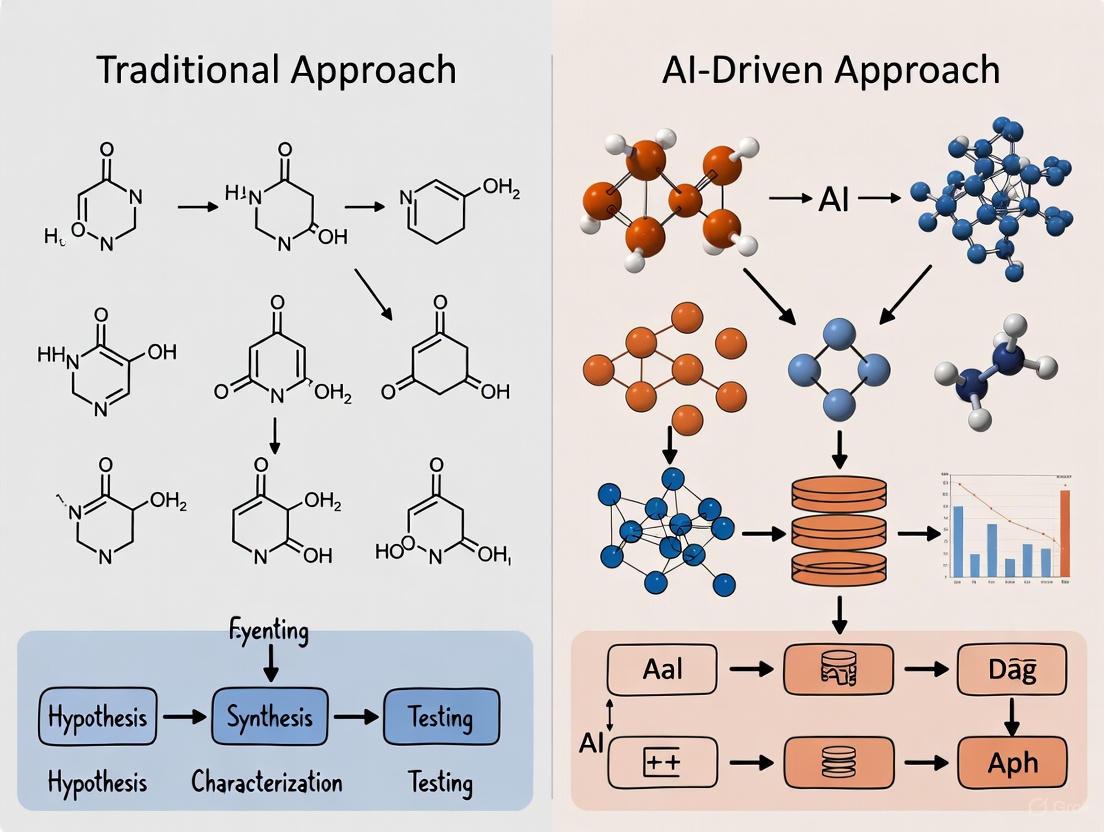

The conventional pathway for developing catalysts is a sequential, labor-intensive process that progresses through distinct, manually-executed stages. Figure 1 illustrates this iterative cycle, which is heavily dependent on human intervention at every step.

Diagram Title: Traditional Catalyst Development Workflow

As shown in Figure 1, the process begins with Hypothesis Formulation, where researchers design catalyst candidates based on prior literature, chemical intuition, and known descriptor-property relationships [2] [1]. This is followed by Manual Catalyst Design, focusing on optimizing composition and structure to achieve target activity and stability.

The Trial-and-Error Synthesis stage involves manually testing factors like precursor selection, temperature, time, solvent, and atmospheric environment. These factors significantly influence the final product's composition, structure, and morphology, and their interplay makes controlled synthesis particularly challenging [2]. Subsequently, Performance Evaluation assesses catalytic activity, selectivity, and stability, while Data Analysis and Interpretation relies on researcher expertise to derive insights. The loop repeats based on these findings, creating a Lengthy Feedback Loop that typically spans months or even years [2] [3].

The AI-Driven Experimental Workflow

In contrast, AI-driven catalyst development employs a closed-loop, autonomous workflow that integrates artificial intelligence, robotics, and real-time data analysis. Figure 2 outlines this accelerated, data-rich process, which minimizes human intervention.

Diagram Title: AI-Driven Catalyst Development Workflow

As shown in Figure 2, the AI-driven process starts with AI Model Prediction, where machine learning models virtually screen millions of potential compositions and structures by identifying patterns in large datasets to predict promising candidates [2] [3]. This enables Automated High-Throughput Synthesis using robotic systems and self-driving laboratories to synthesize shortlisted candidates [2] [4]. The Automated Characterization and Performance Testing stage employs integrated analytical instruments and real-time monitoring (e.g., NMR) for high-throughput evaluation [5]. Finally, Real-Time Data Processing and Machine Learning Analysis automatically processes results to refine AI models, creating a Rapid Feedback Loop that can take just hours or days [5] [4].

Performance Comparison: Quantitative Data Analysis

Key Performance Metrics

The fundamental differences between traditional and AI-driven methodologies become strikingly apparent when comparing their performance across key metrics. Table 1 summarizes quantitative data from direct comparisons and representative case studies.

Table 1: Performance Comparison of Traditional vs. AI-Driven Catalyst Development

| Performance Metric | Traditional Approach | AI-Driven Approach | Experimental Context |

|---|---|---|---|

| Development Timeline | Years to decades [3] | Months to years [3] | Discovery of new catalyst materials [2] |

| Experimental Throughput | 30 tests/day (manual) [6] | 30 tests/day (automated) [6] | High-throughput catalyst testing [6] |

| Number of Candidates Screened | ~1,000 candidates [3] | >19,000 candidates [6] | Virtual screening for HER catalysts [6] |

| Success Rate in Synthesis | Not explicitly stated | <25% of targets matched [6] | Mixed-metal catalyst synthesis [6] |

| Resource Optimization | High (manual resource use) | 9.3x improvement in power density per dollar [4] | Fuel cell catalyst discovery [4] |

| Heat Duty Reduction | Baseline (100%) | 38% of baseline [7] | Catalyst-aided CO₂ desorption [7] |

Detailed Experimental Protocols

Traditional Protocol: Catalyst-Aided CO₂ Desorption

This established protocol for testing solid acid catalysts in CO₂ desorption exemplifies the traditional, sequential experimental approach [7].

- Objective: To evaluate the performance of solid acid catalysts (γ-Al₂O₃ and HZSM-5) in reducing the energy required for CO₂ desorption from loaded amine solvents in a pilot plant setting.

- Materials & Setup:

- Solvent System: Blended 5M monoethanolamine (MEA) and 2M methyl diethanolamine (MDEA), compared to benchmark 5M MEA.

- Catalysts: Industrial solid acid catalysts γ-Al₂O₃ (Lewis acid) and HZSM-5 (Bronsted acid).

- Reactor: Packed-bed desorber column operated at 1 atm.

- Setup: Modified desorption process with a heat exchanger replacing the traditional reboiler, enabling operation at temperatures below 100°C.

- Procedure:

- The rich amine solvent (pre-loaded with CO₂) is fed into the desorber column at a constant flow rate (60 mL/min).

- The solvent flows through the packed bed containing the solid acid catalyst.

- Desorption is carried out at atmospheric pressure (1 bar) and temperatures below 100°C.

- The lean CO₂ loading of the solvent exiting the desorber is measured.

- The CO₂ production rate is quantified and used to calculate the heat duty (energy requirement).

- Key Outcome: The combined action of the catalysts with the blended solvent decreased the heat duty from the baseline of 100% to 38%, significantly reducing energy consumption [7].

AI-Driven Protocol: Self-Driving Laboratory for Fuel Cell Catalysts

This protocol describes the AI-driven, closed-loop workflow used by the CRESt platform to discover advanced fuel cell catalysts [4].

- Objective: To autonomously discover a multielement catalyst for a direct formate fuel cell that achieves high power density while minimizing precious metal content.

- Materials & Setup:

- AI Platform: CRESt (Copilot for Real-world Experimental Scientists) integrating multimodal data and robotic equipment.

- Robotic Equipment: Liquid-handling robot, carbothermal shock synthesizer, automated electrochemical workstation.

- Characterization Tools: Automated electron microscopy, X-ray diffraction.

- Precursors: Up to 20 different precursor molecules and substrates included in the search space.

- Procedure:

- AI Prediction: The system searches scientific literature and uses active learning to propose promising catalyst recipes.

- Automated Synthesis: Robotic systems execute the synthesis of proposed catalysts.

- Automated Testing & Characterization: The performance of each catalyst is tested in a fuel cell setup, with parallel characterization of its structure.

- Real-Time Analysis: Data from experiments are fed back into the AI models, which use Bayesian optimization to refine the search space and suggest the next set of experiments.

- Iteration: Steps 1-4 are repeated autonomously over hundreds of cycles.

- Key Outcome: After exploring over 900 chemistries and conducting 3,500 tests, CRESt discovered an 8-element catalyst that achieved a 9.3-fold improvement in power density per dollar compared to pure palladium [4].

The Scientist's Toolkit: Essential Research Reagents and Materials

The experimental approaches, both traditional and AI-driven, rely on a specific set of chemical reagents, catalysts, and instrumentation. Table 2 details these key research solutions and their functions.

Table 2: Key Research Reagent Solutions and Materials

| Item Name | Type/Classification | Primary Function in Experimentation |

|---|---|---|

| Monoethanolamine (MEA) | Solvent (Primary Amine) | Benchmark absorbent for CO₂ in post-combustion capture; forms carbamate ions with CO₂ [7]. |

| Methyl Diethanolamine (MDEA) | Solvent (Tertiary Amine) | Used in blended amine solvents to promote bicarbonate ion formation, improving overall capture and desorption performance [7]. |

| γ-Al₂O₃ | Catalyst (Lewis Acid Solid Acid) | Facilitates desorption by replacing the role of bicarbonate in the reaction mechanism, lowering energy requirements [7]. |

| HZSM-5 | Catalyst (Bronsted Acid Solid Acid) | Provides protons to aid in the breakdown of carbamate during CO₂ desorption [7]. |

| Palladium (Pd) | Catalyst (Precious Metal) | Benchmark precious metal catalyst for hydrogenation and fuel cell reactions; expensive but highly active [4] [6]. |

| VSP-P1 Printer | Instrumentation (Synthesizer) | Automated device that vaporizes metal rods to create nanoparticles of desired composition for high-throughput catalyst synthesis [6]. |

| Periodic Open-Cell Structures (POCS) | Reactor Component (Structured Reactor) | 3D-printed architectures (e.g., Gyroids) that provide superior heat and mass transfer compared to conventional packed beds [5]. |

| Benzene-1,4-dicarboxylate | Ligand (Linker in MOFs) | Common organic linker used in the synthesis of Metal-Organic Frameworks (MOFs) for catalytic applications [2]. |

The comparative analysis clearly demonstrates a paradigm shift in catalyst development. The traditional approach, while built on a deep foundation of chemical expertise and historical data, is inherently limited by its sequential nature, low throughput, and extensive reliance on manual effort. This results in prolonged development cycles spanning years or decades and a constrained ability to explore complex, multi-element chemical spaces [2] [3].

In contrast, the AI-driven approach represents a transformative advancement. By integrating machine learning, robotics, and high-throughput experimentation, it enables the rapid screening of thousands to millions of candidates, the identification of non-intuitive catalyst compositions, and a drastic reduction in development time and cost [4] [6]. The integration of AI is not merely an incremental improvement but a fundamental re-engineering of the research workflow, paving the way for accelerated discovery of advanced catalysts critical to addressing pressing challenges in energy and sustainability.

The field of catalysis research is undergoing a profound transformation, moving from traditional trial-and-error approaches to data-driven, artificial intelligence (AI)-powered methodologies. Catalysts, which accelerate chemical reactions without being consumed, are fundamental to modern industry, playing critical roles in energy production, pharmaceutical development, and environmental protection [2] [3]. Historically, catalyst development has been a time-consuming and resource-intensive process, often relying on empirical observations, intuition, and sequential experimentation that can span years [2] [8]. This traditional paradigm faces significant challenges in navigating the highly complex and multidimensional search spaces of catalyst composition, structure, and synthesis conditions [2].

The integration of machine learning (ML) is sharply transforming this research paradigm, offering unique advantages in tackling highly complex issues across every aspect of catalyst synthesis [2]. AI provides powerful new capabilities for identifying descriptors for catalyst screening, processing massive computational data, fitting potential energy surfaces with exceptional accuracy, and uncovering mathematical laws for chemical and physical interpretability [2]. This article provides a comprehensive comparison between traditional and AI-driven catalyst development approaches, examining their respective methodologies, performance metrics, and implications for research efficiency and catalyst performance.

Comparative Analysis: Traditional vs. AI-Driven Catalyst Development

Table 1: Fundamental Characteristics of Traditional and AI-Driven Catalyst Development Approaches

| Aspect | Traditional Approach | AI-Driven Approach |

|---|---|---|

| Core Methodology | Trial-and-error experimentation, empirical observations, sequential testing [2] [8] | Data-driven prediction, virtual screening, algorithmic optimization [2] [3] |

| Development Timeline | Years to decades [3] | Months to years [3] |

| Primary Resource Investment | Laboratory equipment, reagents, human labor [2] | Computational infrastructure, data acquisition, specialized expertise [2] [9] |

| Data Handling | Limited, often inconsistent datasets; reliance on published literature and isolated experiments [2] [10] | Large-scale, standardized datasets; high-throughput experimentation generating thousands of data points [2] [10] |

| Key Limitations | High cost, lengthy cycles, cognitive biases, difficulty optimizing multiple parameters simultaneously [2] [10] | Data quality dependencies, model generalizability challenges, "black box" interpretability issues [3] [9] |

| Optimization Capability | Limited to few variables at a time; local optimization [10] | High-dimensional parameter space navigation; global optimization [2] [10] |

Table 2: Performance and Outcome Comparison

| Performance Metric | Traditional Approach | AI-Driven Approach |

|---|---|---|

| Experimental Efficiency | Low: Testing 1,000 catalysts requires synthesizing all 1,000 candidates [3] | High: AI narrows field to 10 most promising candidates from 1,000 possibilities [3] |

| Success Rate Prediction | Limited to empirical trends and theoretical models with simplified systems [10] | Enhanced: 92% accuracy demonstrated in knowledge extraction tasks [9] |

| Multi-Objective Optimization | Challenging: Difficulty balancing activity, selectivity, stability simultaneously [3] | Promising: ML models can predict trade-offs between multiple performance descriptors [2] [10] |

| Discovery of Novel Materials | Serendipitous or incremental improvements based on existing knowledge [8] | Systematic exploration of chemical space; prediction of entirely new catalytic systems [11] [9] |

| Scalability | Limited by manual processes [2] | High: Enabled by automated high-throughput systems [2] |

| Knowledge Extraction | Manual literature review; limited integration of disparate studies [9] | Automated: Natural language processing of scientific literature [11] [9] |

Experimental Protocols in AI-Driven Catalyst Research

The Catal-GPT Framework for Catalyst Design

A pioneering example of AI implementation in catalysis is the Catal-GPT framework, which employs a large language model (LLM) specifically fine-tuned for catalyst design [9]. The experimental protocol involves:

Data Collection and Curation: A specialized web crawler navigates academic databases to extract chemical data from scientific abstracts, which is then cleaned and encoded into a model-readable format [11] [9]. When conflicting parameters appear for the same catalytic system, priority is given to preparation parameters from authoritative publications with the highest reported frequency [9].

Model Architecture and Training: The system uses the open-source qwen2:7b LLM, deployed locally with a specialized database on the oxidative coupling of methane (OCM) reaction. The architecture is modular, comprising data storage, foundation model, agent, and feedback learning modules [9].

Knowledge Extraction and Validation: The model undergoes task evaluations for knowledge extraction and research assistance. In testing, it achieved 92% accuracy in knowledge extraction and could propose complete catalyst preparation processes, including required chemical reagents and detailed synthesis parameters [9].

Iterative Optimization: The system incorporates feedback from experimental results or industrial applications to continuously refine its recommendation strategy, creating a dynamic learning loop [9].

Machine Learning with Experimental Descriptors

For experimental catalysis research, ML models utilize descriptors encompassing catalyst composition, synthesis variables, and reaction conditions [10]. The protocol typically involves:

Descriptor Selection: Input features may include catalyst composition (presence of metals, functional groups), synthesis parameters (calcination temperature, precursor selection), and reaction conditions (temperature, pressure) [10].

Model Training: Using tree-based algorithms (decision trees, random forests, XGBoost) for classification tasks and regression algorithms (linear regression, gradient boost decision tree) for predicting continuous variables like faradaic efficiency [10].

Feature Importance Analysis: Determining the relative significance of experimental factors through techniques like descriptor importance analysis, which examines prominence and frequency during the decision process of tree-based models [10].

Iterative Design: Using ML predictions to guide subsequent experimental rounds, progressively narrowing the search space and refining catalyst formulations [10].

AI-Driven Catalyst Discovery Workflow: This diagram illustrates the iterative, data-driven cycle of AI-assisted catalyst development, from initial data collection through model training, prediction, experimental validation, and continuous refinement.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Research Reagents and Computational Tools in AI-Driven Catalysis

| Tool/Reagent | Function/Application | Role in AI-Driven Research |

|---|---|---|

| High-Throughput Synthesis Systems (e.g., AI-EDISON, Fast-Cat) [2] | Automated preparation of catalyst libraries | Generates large, consistent datasets essential for training robust ML models [2] |

| Specialized LLMs (e.g., Catal-GPT, ChemCrow, ChemLLM) [9] | Natural language processing of scientific literature | Extracts knowledge from vast research corpus; suggests catalyst formulations [11] [9] |

| Descriptor Libraries [10] | Mathematical representations of catalyst properties | Encodes complex chemical information into machine-readable features for model input [10] |

| Web Crawlers & Data Extraction Tools [11] | Automated mining of scientific databases | Builds comprehensive datasets from published literature for AI training [11] |

| Robotic AI Chemists [2] | Autonomous synthesis and testing | Enables closed-loop experimentation with minimal human intervention [2] |

| Transfer Learning Frameworks [11] | Application of knowledge across chemical domains | Enhances predictive capabilities when experimental data is limited [11] |

The comparison between traditional and AI-driven catalyst development approaches reveals a complementary relationship rather than a simple replacement scenario. While AI methodologies offer unprecedented speed in screening catalyst candidates and ability to navigate complex parameter spaces, traditional experimental expertise remains crucial for validating predictions, interpreting results, and guiding model development [2] [8]. The most promising path forward involves the integration of physical knowledge and mechanistic understanding with data-driven AI approaches, creating a synergistic workflow that leverages the strengths of both paradigms [10].

The future of catalysis research lies in increasingly autonomous systems, with AI not only suggesting catalyst compositions but also planning and executing synthetic routes, performing characterizations, and iterating based on experimental outcomes [2]. As these technologies mature, they promise to significantly accelerate the development of catalysts for critical applications including renewable energy, environmental protection, and sustainable chemical production [3] [8].

The field of catalyst development is undergoing a profound transformation, moving from a tradition steeped in empirical methods to one increasingly guided by data-driven prediction. For decades, the discovery and optimization of catalysts have relied heavily on trial-and-error experimentation—a resource-intensive process constrained by human intuition, time, and cost. This approach, while responsible for many critical advances, is inherently limited when navigating the vast complexity of chemical and biological catalyst spaces. In contrast, a new paradigm is emerging, one that integrates high-throughput experimentation, large-scale data generation, and artificial intelligence (AI) to predict catalytic behavior and design novel systems rationally. This guide objectively compares these two core philosophies, examining their fundamental principles, methodologies, performance, and practical implications for researchers and scientists in drug development and related fields.

Fundamental Principles: A Philosophical Divide

The traditional and data-driven approaches are founded on fundamentally different philosophies for navigating scientific discovery.

The Trial-and-Error Philosophy: The traditional approach is largely empirical and iterative. It relies on the chemist's intuition and prior knowledge to formulate initial hypotheses about promising catalysts or reaction conditions. Experiments are then designed and executed sequentially. The outcome of each experiment informs the next, creating a slow, cyclical process of refinement. This method is inherently local in its exploration; researchers typically make small, incremental changes to known systems (e.g., slightly modifying a ligand or a reaction temperature) rather than venturing into entirely uncharted chemical territory. The process is often described as resource-intensive, with success heavily dependent on researcher experience and serendipity [12] [13].

The Predictive, Data-Driven Philosophy: This modern approach treats catalyst discovery as a global optimization problem within a vast, multidimensional space. Its core principle is that patterns embedded in large, high-quality datasets can be used to build models that accurately predict catalytic performance. Instead of relying solely on chemical intuition, this method uses machine learning (ML) to identify complex, non-linear relationships between catalyst features (descriptors) and their functional outcomes (e.g., activity, selectivity). The goal is to shift the experimental burden from blind screening to targeted validation of computationally prioritized candidates, fundamentally accelerating the discovery process [12] [14] [13].

Methodological Comparison: Experimental Protocols in Practice

The practical implementation of these two philosophies differs significantly in workflow, techniques, and tools.

Traditional Trial-and-Error Workflow

The classical protocol is linear and iterative [13]:

- Hypothesis Formulation: Based on literature review and expert knowledge, a chemist identifies a potential catalyst or a set of reaction conditions for a target transformation.

- Sequential Experimentation: The lead candidate is synthesized and tested in the lab. This process is often low-throughput, with one reaction or a small batch of reactions run at a time.

- Analysis and Interpretation: Results (e.g., yield, conversion, selectivity) are analyzed.

- Iterative Refinement: The chemist uses the results to make an educated guess for the next experiment, perhaps by tuning a single variable (e.g., ligand concentration, solvent). This process loops back to step 2.

- Optimization and Scale-Up: Once a promising candidate is identified, further rounds of experimentation optimize its performance before moving to larger-scale synthesis.

This workflow is visualized in the following diagram:

Data-Driven Design Workflow

The AI-driven approach creates a closed-loop, cyclical system that integrates computation and experimentation [12] [14]:

- Data Acquisition and Curation: A large, high-quality dataset is assembled. This can be from historical records, high-throughput experiments, or computational simulations. For example, in biocatalysis, this might involve screening hundreds of enzymes against a library of substrates to create a dataset like BioCatSet1 [12].

- Descriptor Engineering and Feature Selection: Raw data (e.g., molecular structures, protein sequences) is converted into numerical descriptors that a machine can process. Feature selection techniques may be used to identify the most relevant parameters.

- Model Training and Validation: A machine learning algorithm (e.g., Gradient Boosted Decision Trees, Random Forest) is trained on the dataset to learn the mapping between input descriptors and output performance. The model is rigorously validated on unseen data.

- Prediction and Candidate Prioritization: The trained model is used to screen a vast virtual library of potential catalysts, predicting their performance and generating a ranked list of the most promising candidates.

- Targeted Experimental Validation: Only the top-predicted candidates are synthesized and tested in the lab, drastically reducing experimental workload.

- Data Feedback and Model Retraining: Results from validation experiments are fed back into the dataset, retraining and improving the model for the next iteration in a continuous improvement cycle.

This workflow is visualized in the following diagram:

Quantitative Performance Comparison

The following tables summarize experimental data and performance metrics from case studies that directly or indirectly compare the efficiency and outcomes of the two approaches.

Table 1: Comparative Efficiency in Biocatalyst Discovery (CATNIP Case Study) [12]

| Performance Metric | Traditional High-Throughput Screening | AI-Guided Prediction (CATNIP Model) |

|---|---|---|

| Initial Experimental Scale | 314 enzymes × 111 substrates (~34,854 reactions) | N/A (Leverages prior data) |

| Hit Identification Rate | Baseline (Random) | 7x higher than random screening |

| Key Experimental Step | Test all combinations | Validate only top 10 model-predicted enzymes |

| Validation Success Rate | N/A (Discovery method) | 70-80% (7 out of 10 predicted enzymes were active) |

| Exploration Nature | Broad but shallow "fishing expedition" | Targeted "spear fishing" in chemical space |

Table 2: Performance of AI-Driven Workflows in Catalyst Design (Selected Examples)

| Application / Model | Key Performance Metric | Traditional/DFT Method | AI/Data-Driven Method |

|---|---|---|---|

| SurFF Surface Model [15] | Computational Efficiency for Surface Energy | Density Functional Theory (DFT) | ~100,000x faster than DFT |

| CaTS Framework [15] | Transition State Search Efficiency | Standard DFT Calculation | ~10,000x faster than DFT |

| CO₂ to Methanol SAC Screening [15] | Catalyst Screening Throughput | Low (DFT bottleneck) | Screening of 3,000+ candidates; discovery of new high-performance SACs |

| CATNIP (Enzyme → Substrate) [12] | Discovery of Novel Reactions | Limited to known enzyme functions | Successful prediction and validation of multiple unprecedented biocatalytic reactions |

The Scientist's Toolkit: Essential Research Reagents & Materials

This section details key reagents, software, and materials essential for implementing the data-driven design workflow, as featured in the cited research.

Table 3: Key Reagent Solutions for Data-Driven Catalyst Development

| Item Name | Function/Description | Example from Research |

|---|---|---|

| Enzyme Library (aKGLib1) [12] | A diverse collection of biological catalysts for high-throughput experimental screening to generate training data. | A library of 314 NHI enzymes with average sequence identity of 13.7%, ensuring high diversity. |

| Substrate Library [12] | A collection of diverse small molecules used to probe the catalytic activity and specificity of catalysts. | A library of >100 compounds, including chemical building blocks, natural products, and drug molecules. |

| Functional Monomers [16] | Building blocks for data-driven polymer design, selected to represent classes of amino acids. | Six monomers representing hydrophobic, nucleophilic, acidic, cationic, amide, and aromatic classes. |

| Sequence Similarity Network (SSN) [12] | A bioinformatics tool to visualize and analyze sequence relationships, used for selecting diverse enzyme candidates. | Used to select the 314 enzymes for aKGLib1 from a pool of 265,632 sequences. |

| Machine Learning Model (e.g., GBM) [12] | The algorithmic core that learns from data to make predictions; GBM was used in CATNIP. | Gradient Boosted Decision Tree model for linking chemical and protein sequence spaces. |

| MORFEUS Software [12] | Computational chemistry tool for calculating molecular "fingerprints" or descriptors for small molecules. | Used to compute a set of 21 parameters for each substrate as input for the ML model. |

The contrast between traditional trial-and-error and predictive, data-driven design marks a pivotal shift in scientific methodology for catalyst development. The empirical approach, while foundational, is constrained by its sequential nature, high resource costs, and limited capacity to explore vast chemical spaces. In contrast, the data-driven paradigm, powered by AI and high-throughput experimentation, offers a powerful strategy for global exploration and predictive accuracy. It does not seek to eliminate experimentation but to make it profoundly more efficient and insightful by guiding it with intelligent prediction.

For researchers and drug development professionals, the implication is clear: integrating data-driven approaches into the R&D pipeline can dramatically accelerate discovery timelines, reduce costs associated with failed experiments, and unlock novel catalytic functions that might remain hidden under traditional methodologies. The future of catalyst design lies in the continued refinement of this closed-loop paradigm—"experiment-data-AI"—where each cycle of prediction and validation generates deeper, more actionable scientific understanding [12] [14].

For researchers and scientists in drug development and chemical synthesis, the traditional approach to catalyst design has long been a critical bottleneck. The conventional trial-and-error methodology, reliant on empirical observations and sequential experimentation, consumes substantial resources while delivering incremental progress. This paradigm is now being fundamentally transformed by artificial intelligence, which offers a new framework for catalyst discovery and optimization. As the catalyst market continues to expand—projected to reach USD 76.7 billion by 2033—the imperative for more efficient development approaches becomes increasingly urgent across research institutions and industrial laboratories [17]. This comparison guide examines the quantitative and methodological distinctions between traditional and AI-driven catalyst development, providing experimental data and protocols to inform research direction and resource allocation.

Quantitative Comparison: Traditional vs. AI-Driven Catalyst Development

Table 1: Performance Metrics Comparison Between Traditional and AI-Driven Catalyst Development

| Performance Metric | Traditional Approach | AI-Driven Approach | Experimental Basis |

|---|---|---|---|

| Discovery Timeline | Years to decades | Months to weeks | AI systems explored 900+ chemistries in 3 months [4] |

| Experimental Throughput | 10-100 samples manually | 3,500+ tests automated | Robotic platforms enabled 3,500 electrochemical tests [4] |

| Resource Consumption | High (reagents, labor) | Reduced by 90%+ | AI targets 10 most promising from 1,000 candidates [3] |

| Success Rate Optimization | Incremental improvements | 9.3x performance improvement | Record power density in fuel cells with reduced precious metals [4] |

| Data Utilization | Limited, experiential | Multimodal integration | Combines literature, experimental data, and characterization [4] |

Table 2: Economic and Operational Impact Analysis

| Impact Area | Traditional Approach | AI-Driven Approach | Supporting Data |

|---|---|---|---|

| Development Cost | High (extensive lab work) | Significant reduction | AI reduces experiments, lowering reagent and labor costs [3] |

| Return on Investment | Long-term, uncertain | $3.70 return per $1 invested | Demonstrated in generative AI applications [18] |

| Personnel Requirements | Large teams | Smaller, specialized teams | 32% of organizations expect AI-related workforce changes [19] |

| Scale-up Transition | High failure rate | Improved prediction | Digital twins simulate industrial conditions [3] |

| Environmental Impact | Higher waste generation | Greener processes | Enables lower temperature/pressure reactions [3] |

Experimental Protocols and Methodologies

Protocol 1: AI-Driven High-Throughput Catalyst Screening

Objective: Rapid identification of novel catalyst formulations with target properties using integrated AI-robotic systems.

Materials and Equipment:

- Liquid-handling robot for precise precursor dispensing

- Carbothermal shock system for rapid synthesis

- Automated electrochemical workstation for performance testing

- Scanning electron microscope with automated image analysis

- X-ray diffraction equipment for structural characterization

- Computational infrastructure for machine learning models

Methodology:

- Goal Definition: Researchers input target catalytic properties (e.g., activity, selectivity) through natural language interface [4]

- Literature Mining: AI system scans scientific literature and databases to identify potential elements and molecular configurations [4]

- Knowledge Embedding: Creates multidimensional representations of catalyst recipes based on prior knowledge [4]

- Design Space Reduction: Applies principal component analysis to identify most promising experimental regions [4]

- Robotic Synthesis: Automated systems prepare catalyst candidates across identified compositional space [2]

- High-Throughput Characterization: Parallel testing of catalytic performance, structure, and morphology [20]

- Active Learning Loop: Experimental results feed back into AI models to refine subsequent experimental designs [4]

Data Analysis:

- Machine learning models correlate compositional and structural features with performance metrics

- Computer vision algorithms analyze characterization data (SEM, XRD) for quality assessment

- Bayesian optimization identifies promising regions for subsequent experimentation

Protocol 2: Traditional Catalyst Optimization

Objective: Systematic improvement of catalyst formulations through sequential experimentation.

Materials and Equipment:

- Standard laboratory glassware and reactors

- Manual catalyst synthesis equipment

- Analytical instruments (GC-MS, HPLC)

- Performance testing apparatus

Methodology:

- Hypothesis Formation: Based on researcher experience and literature review

- Bench-Scale Synthesis: Manual preparation of catalyst candidates

- Performance Testing: Sequential evaluation of activity, selectivity, and stability

- Characterization: Structural analysis to understand performance characteristics

- Iterative Refinement: Modification of synthesis parameters based on results

- Scale-up Studies: Transition from laboratory to pilot scale

Data Analysis:

- Statistical analysis of experimental results

- Empirical correlation of synthesis parameters with performance

- Expert interpretation of characterization data

Workflow Visualization: Traditional vs. AI-Driven Approaches

AI-Driven vs Traditional Catalyst Development Workflow

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Research Reagents and Materials for AI-Driven Catalyst Development

| Reagent/Material | Function in Research | Application Examples | AI Integration |

|---|---|---|---|

| Precious Metals (Pd, Pt) | Active catalytic sites for key reactions | Fuel cells, emission control, pharmaceutical synthesis | ML models optimize loading and distribution [2] |

| Base Metals (Fe, Ni, Cu) | Cost-effective catalytic elements | Ammonia production, bulk chemicals | AI identifies optimal coordination environments [4] |

| Zeolites & MOFs | High-surface-area catalyst supports | Petrochemical refining, selective oxidation | ML guides morphology engineering [2] |

| Metal Oxides & Nitrides | Stable catalytic materials for harsh conditions | Water splitting, environmental catalysis | AI predicts stability and activity [14] |

| Enzyme Biocatalysts | Selective biological catalysts | Pharmaceutical intermediates, fine chemicals | AI models protein structures for enhanced activity [21] |

| Bimetallic Nanomaterials | Enhanced activity and selectivity | Fuel cells, specialized chemical synthesis | AI optimizes elemental combinations [2] |

| Robotic Synthesis Platforms | Automated catalyst preparation | High-throughput experimentation | Executes AI-designed experiments [4] |

| Multimodal AI Systems | Integrated data analysis and prediction | Catalyst design across applications | Processes literature, experimental data, characterization [4] |

The comparative analysis presented in this guide demonstrates a fundamental shift in catalyst development paradigms. AI-driven approaches consistently outperform traditional methods across critical metrics: reducing discovery timelines from years to months, improving resource efficiency through targeted experimentation, and enabling more predictive scale-up transitions. The integration of multimodal AI systems with robotic experimentation platforms represents a particularly significant advancement, creating closed-loop discovery systems that continuously refine their experimental strategies based on real-time results [4]. For research organizations and drug development professionals, the adoption of AI-enhanced catalyst development is transitioning from competitive advantage to operational necessity. This transition requires not only technological investment but also methodological adaptation—embracing workflow redesign, data-driven decision making, and interdisciplinary collaboration between domain experts and data scientists. As AI capabilities continue to advance, particularly in areas of interpretability and physical insight integration, the efficiency gains in catalyst development are likely to accelerate, potentially transforming not only how catalysts are designed but also what chemical transformations become economically viable.

Inside the AI-Driven Lab: Machine Learning, Automation, and Real-World Workflows

The field of catalyst development is undergoing a profound transformation, moving from traditional, intuition-guided experimental methods to artificial intelligence (AI)-driven, data-centric approaches. Traditional catalyst discovery has historically relied on trial-and-error experimentation, a process that is often slow, resource-intensive, and limited in its ability to explore vast compositional and structural spaces [2]. In stark contrast, AI-driven methodologies leverage machine learning (ML), generative models, and high-throughput computational screening to predict catalyst composition, structure, and activity with unprecedented speed and accuracy [2] [22] [23]. This paradigm shift is not merely an incremental improvement but a fundamental change in research methodology, enabling the discovery of novel, high-performance catalysts for applications in energy, sustainability, and pharmaceuticals at an accelerated pace. This guide provides a comparative analysis of these two approaches, supported by experimental data and detailed methodologies.

Comparative Analysis: Traditional vs. AI-Driven Catalyst Development

The following table summarizes the core differences between the traditional and AI-driven catalyst development paradigms.

Table 1: Core Differences Between Traditional and AI-Driven Catalyst Development

| Aspect | Traditional Approach | AI-Driven Approach |

|---|---|---|

| Core Methodology | Trial-and-error experimentation, literature guidance, and linear hypothesis testing [2]. | Data-driven prediction, high-throughput virtual screening, and generative design [2] [22]. |

| Exploration Speed | Months to years for a single discovery cycle; limited by manual synthesis and testing [2]. | Days to weeks; capable of screening millions of candidates computationally [23] [3]. |

| Data Utilization | Relies on limited, localized datasets; knowledge often remains within individual research groups [24]. | Leverages large, shared databases (e.g., >400,000 data points) and learns from every cycle in a closed-loop system [24]. |

| Key Capabilities | Density Functional Theory (DFT) calculations, standard characterization techniques [2]. | Machine learning interatomic potentials (MLIPs), generative adversarial networks (GANs), variational autoencoders (VAEs), and transformer models [2] [22]. |

| Primary Limitations | High cost, low throughput, inability to efficiently navigate vast design spaces, and lengthy research cycles [2] [3]. | Dependency on data quality and quantity, challenges in model interpretability ("black box" issue), and integration with experimental validation [2] [3]. |

Performance and Outcome Comparison

Quantitative data from recent studies highlights the dramatic performance advantages of AI-driven catalyst design.

Table 2: Quantitative Performance Comparison of Catalyst Discovery Methods

| Metric | Traditional & Computational | AI-Driven Method | Result |

|---|---|---|---|

| Materials Discovered | ~28,000 materials discovered via computational approaches over a decade [23]. | 2.2 million new crystals predicted by GNoME; 380,000 classified as stable [23]. | ~80x increase in discovered stable materials. |

| Discovery Rate | ~50% accuracy in stability prediction from earlier models [23]. | Over 80% prediction accuracy achieved by GNoME via active learning [23]. | ~60% relative improvement in prediction accuracy. |

| Efficiency Gain | Standard computational screening methods [25]. | New ML method predicts material structure with five times the efficiency of the previous standard [25]. | 5x more efficient in structure prediction. |

| Layered Compounds | ~1,000 known graphene-like layered compounds [23]. | GNoME discovered 52,000 new layered compounds [23]. | 52x more potential candidates for electronics. |

Experimental Protocols in AI-Driven Catalyst Design

The Closed-Loop Workflow of the Digital Catalysis Platform (DigCat)

The DigCat platform exemplifies a comprehensive, cloud-based AI workflow for autonomous catalyst design [24].

- AI-Driven Proposal: A researcher submits a query (e.g., "design a new catalyst"). The platform's AI agent, integrating large language models (LLMs) and material databases, generates initial candidate compositions and structures [24].

- Stability and Cost Screening: Candidates undergo automated stability analysis, including surface Pourbaix diagram and aqueous stability assessment, to filter for practical applicability [24].

- Activity Prediction: Machine learning regression models predict adsorption energies and activities. Candidates are screened using traditional thermodynamic volcano plot models and further evaluated with machine learning force fields [24].

- Performance Validation: Selected candidates are input into pH-dependent microkinetic models for reactions like oxygen reduction (ORR) or CO2 reduction (CO2RR). These models account for electric field-pH coupling, kinetic barriers, and solvation effects [24].

- Automated Synthesis and Testing: The most promising candidates are automatically synthesized by robotic platforms (e.g., at partner institutions like Tohoku University). High-throughput experimental devices collect performance and characterization data [24].

- Closed-Loop Feedback: Experimental results are fed back into the DigCat platform. The AI agent uses this data to update its machine learning and physical models, improving the accuracy of subsequent design cycles [24].

Generative Design of Surfaces and Adsorbates

Generative models create novel catalyst structures by learning from existing data.

- Data Collection: A dataset of crystal structures, surfaces, and/or adsorption geometries is compiled, either from public databases (e.g., Materials Project) or via custom global structure searches [22] [26].

- Model Training: A generative model, such as a Crystal Diffusion Variational Autoencoder (CDVAE) or a diffusion model, is trained on the dataset. The model learns the underlying probability distribution of stable atomic arrangements [22].

- Conditional Generation: The trained model generates new structures. This can be guided by desired properties (e.g., low adsorption energy for a specific intermediate) by conditioning the generation process on these properties [22].

- Stability and Activity Assessment: The generated structures are evaluated for stability using DFT calculations or MLIPs. Their catalytic activity is predicted via surrogate models or detailed microkinetic modeling [22].

- Experimental Validation: Promising generated candidates are synthesized and tested. For example, a study using a CDVAE and optimization algorithm generated 250,000 candidates, leading to the synthesis and validation of five new alloy catalysts for CO2 reduction, two with Faradaic efficiencies near 90% .

Workflow Visualization

The following diagram illustrates the integrated, closed-loop nature of a modern AI-driven catalyst discovery platform.

The Scientist's Toolkit: Essential Research Reagents and Solutions

This table details key computational and experimental tools essential for conducting research in AI-driven catalyst design.

Table 3: Essential Research Reagents and Solutions for AI-Driven Catalyst Design

| Tool / Solution | Function | Application in Workflow |

|---|---|---|

| Density Functional Theory (DFT) | Provides high-fidelity calculations of electronic structure, energies, and reaction barriers [22] [3]. | Generating training data for ML models; final validation of AI-predicted candidates. |

| Machine Learning Interatomic Potentials (MLIPs) | Surrogate models that provide DFT-level accuracy at a fraction of the computational cost [22]. | Accelerating molecular dynamics simulations and structure relaxation during virtual screening. |

| Generative Models (VAEs, GANs, Diffusion) | AI models that create novel molecular and crystal structures from learned data distributions [22]. | Inverse design of new catalyst compositions and surface structures with targeted properties. |

| Graph Neural Networks (GNNs) | ML architectures that operate on graph data, naturally representing atomic connectivity [23]. | Predicting material stability and functional properties directly from crystal structure (e.g., in GNoME). |

| Microkinetic Modeling Software | Simulates the detailed kinetics of catalytic reactions over a surface, accounting for all elementary steps [24]. | Predicting the overall activity and selectivity of candidate catalysts under realistic conditions. |

| Automated Synthesis Robotics | Robotic platforms that execute material synthesis protocols without human intervention [24] [2]. | High-throughput synthesis of AI-predicted catalyst candidates for experimental validation. |

| High-Throughput Characterization | Automated equipment for rapid performance testing (e.g., activity, selectivity) and structural analysis [24] [2]. | Providing rapid experimental feedback to close the AI design loop. |

The field of molecular catalysis is undergoing a profound transformation, moving from a discipline historically guided by chemist intuition and trial-and-error to one increasingly driven by data-driven artificial intelligence (AI) approaches [1]. This shift is particularly critical for navigating complex reaction conditions, where multidimensional variables—including temperature, pressure, catalyst composition, and solvent systems—interact in ways that often challenge conventional optimization strategies [14]. The comparison between traditional and AI-driven methodologies represents more than a simple technological upgrade; it constitutes a fundamental reimagining of the catalyst development workflow with substantial implications for efficiency, cost, and discovery rates across chemical industries including pharmaceutical development [27].

Traditional catalyst development has relied heavily on established principles such as linear free energy relationships (LFERs), including the Brønsted catalysis law and Hammett equation, which provided elegant but simplified structure-activity relationships based on limited datasets [1]. While these approaches have yielded significant successes over decades of research, they struggle to address the intricate interplay of factors in complex catalytic systems. In contrast, AI-driven approaches leverage machine learning (ML) to identify patterns and predict outcomes directly from high-dimensional, complex datasets, enabling researchers to explore vast chemical spaces with unprecedented efficiency and precision [1] [14]. This comparative analysis examines the performance, methodologies, and practical implementation of these competing paradigms, providing researchers with objective data to inform their experimental strategies.

Comparative Performance: Traditional vs. AI-Driven Catalyst Development

Quantitative Performance Metrics

Rigorous evaluation of both traditional and AI-driven approaches reveals significant differences in efficiency, accuracy, and resource allocation. The table below summarizes key performance indicators derived from recent research implementations:

Table 1: Performance Comparison of Traditional vs. AI-Driven Catalyst Development

| Performance Metric | Traditional Approach | AI-Driven Approach | Experimental Context |

|---|---|---|---|

| Screening Efficiency | 10-100 candidates/month [14] | 10,000+ candidates/in silico cycle [14] | High-throughput catalyst screening |

| Prediction Accuracy | ~60-70% for novel systems [1] | 85-95% for target properties [14] | Catalyst activity/selectivity prediction |

| Optimization Cycle Time | 3-6 months per development cycle [27] | 1-4 weeks per iteration [1] | Reaction condition optimization |

| Resource Utilization | High (specialized equipment, materials) [28] | Primarily computational [14] | Catalyst development cost analysis |

| Success Rate for Novel Discovery | <5% for de novo design [1] | 12-25% for validated discoveries [14] | Experimental validation of predictions |

Experimental Validation Data

The theoretical advantages of AI-driven approaches are substantiated by experimental data across diverse catalytic applications. In retrosynthetic planning, AI tools like ASKCOS and AiZynthFinder have demonstrated the capability to design viable synthetic routes for complex molecules with success rates exceeding 80% in experimental validation studies [1]. For catalyst design specifically, ML models predicting catalytic activity have achieved correlation coefficients (R²) of 0.85-0.95 with experimental validation data, significantly outperforming traditional descriptor-based models that typically achieve R² values of 0.60-0.75 [14].

In autonomous experimentation systems integrating AI with robotics, researchers have demonstrated the optimization of complex reaction conditions in hours or days—a process that traditionally required months. One documented study achieved a 15% yield improvement for a challenging catalytic transformation within 72 hours of autonomous optimization, compared to an average of 4 months using traditional sequential optimization approaches [1]. These performance differentials become particularly pronounced for systems with high-dimensional parameter spaces, where AI methods can simultaneously optimize 5-10 variables compared to the practical limit of 2-3 variables using traditional one-variable-at-a-time (OVAT) approaches [14].

Methodological Comparison: Experimental Protocols and Workflows

Traditional Catalyst Development Workflow

Traditional catalyst development follows a linear, hypothesis-driven approach grounded in chemical intuition and established principles. The typical workflow consists of the following standardized protocol:

- Literature Review and Mechanism Analysis: Researchers conduct extensive manual review of published literature on analogous catalytic systems, applying established linear free-energy relationships and mechanistic principles to formulate initial hypotheses [1].

- Catalyst Library Design: Based on periodic table trends, known structure-activity relationships, and ligand effects, a limited library of 10-50 candidate catalysts is designed, often focusing on structural analogs of known performers [28].

- Sequential Experimental Testing: Candidates are synthesized and tested sequentially using one-variable-at-a-time (OVAT) methodology, where reaction conditions (temperature, solvent, concentration) are varied individually while keeping other parameters constant [28] [29].

- Product Analysis and Characterization: Reaction products are characterized using standardized analytical techniques (GC-MS, HPLC, NMR) to determine key performance metrics (yield, conversion, selectivity) [29].

- Mechanistic Studies: For promising candidates, additional kinetic studies, isotopic labeling, and spectroscopic analysis are conducted to elucidate reaction mechanisms and deactivation pathways [28].

- Iterative Optimization: Based on results, slight structural modifications are made over multiple cycles (3-10 iterations), with each cycle requiring complete re-synthesis and re-testing [1].

This traditional workflow, while methodologically sound, inherently limits the exploration of chemical space due to practical constraints on time and resources [14].

AI-Driven Catalyst Development Workflow

AI-driven catalyst development employs an integrated, data-driven workflow that fundamentally reengineers the discovery process. The standardized protocol encompasses:

- Data Curation and Feature Engineering: Existing experimental data (literature, in-house databases) is aggregated and standardized. Molecular descriptors are computed, including electronic, structural, and topological features, with feature selection algorithms identifying the most relevant parameters [14].

- Model Selection and Training: Appropriate machine learning algorithms (random forest, neural networks, Gaussian process regression) are selected based on dataset size and complexity. The model is trained on historical data to learn complex relationships between catalyst features and performance metrics [14].

- In Silico Catalyst Screening: The trained model predicts performance for thousands of virtual candidates in the chemical space, prioritizing the most promising candidates for experimental validation [1] [14].

- Automated Experimental Validation: Robotic high-throughput systems synthesize and test top-predicted candidates (10-100 compounds) in parallel, generating standardized performance data [1].

- Active Learning Loop: Experimental results are fed back to refine the AI model, which then suggests the next round of candidates or optimal conditions, creating a continuous improvement cycle [14].

- Mechanistic Interpretation: Advanced interpretation techniques (SHAP analysis, sensitivity analysis) identify which molecular features most strongly influence performance, providing mechanistic insights [14].

This iterative, data-driven workflow enables more efficient exploration of chemical space and faster convergence on optimal solutions [1].

Workflow Visualization

The fundamental differences between these approaches are visualized in the following workflow diagrams:

Diagram 1: Traditional Catalyst Development Workflow

Diagram 2: AI-Driven Catalyst Development Workflow

The Scientist's Toolkit: Essential Research Reagent Solutions

Implementation of both traditional and AI-driven approaches requires specific research tools and platforms. The following table details essential solutions currently employed in the field:

Table 2: Essential Research Reagent Solutions for Catalyst Development

| Tool/Category | Specific Examples | Function & Application | Compatibility |

|---|---|---|---|

| Retrosynthesis Software | ASKCOS [1], AiZynthFinder [1], Chemitica [1] | Computer-aided synthesis planning; de novo route design for catalyst precursors & target molecules | Both approaches |

| Descriptor Calculation | RDKit [1], Dragon, COMSI | Computes molecular features (electronic, topological) as inputs for QSAR models & AI algorithms | Primarily AI-driven |

| Machine Learning Platforms | Scikit-learn [14], TensorFlow [14], PyCXTM | Builds predictive models for catalyst properties & reaction outcomes | Primarily AI-driven |

| High-Throughput Experimentation | Automated liquid handlers, flow chemistry reactors [1], parallel pressure reactors | Accelerates experimental validation of AI predictions; enables rapid data generation | Primarily AI-driven |

| Catalyst Libraries | Commercial metal salt collections, ligand libraries (e.g., Solvias, Sigma-Aldrich) | Provides physical compounds for traditional screening & initial training data for AI | Both approaches |

| Data Management Systems | Electronic Lab Notebooks (ELNs), Chemical Information Systems (e.g., Reaxys, SciFinder) | Manages experimental data, literature knowledge, and historical results | Both approaches |

The comparative analysis reveals that AI-driven and traditional approaches to catalyst development offer complementary strengths rather than representing mutually exclusive alternatives. AI methodologies demonstrate clear superiority in screening efficiency, optimization speed, and handling high-dimensional parameter spaces [1] [14]. However, traditional approaches provide essential mechanistic understanding, validate AI predictions, and offer intuitive guidance that remains valuable for interpreting complex chemical phenomena [28] [1].

The emerging paradigm for optimizing complex reaction conditions involves strategic integration of both approaches, leveraging AI for rapid exploration and initial optimization while employing traditional methods for mechanistic verification and refinement of top-performing candidates [1] [14]. This hybrid model represents the most promising path forward, combining the scale and efficiency of data-driven discovery with the deep chemical insight of traditional catalysis research. As AI tools continue to evolve—particularly in areas of interpretability and integration with automated experimental platforms—this synergistic approach is poised to dramatically accelerate the development of advanced catalytic systems for pharmaceutical synthesis and other chemical applications [1] [14].

The field of chemical research is undergoing a profound transformation, shifting from traditional, labor-intensive trial-and-error approaches toward intelligent, autonomous discovery engines. Autonomous laboratories, which integrate robotic platforms with artificial intelligence (AI), are closing the traditional "predict-make-measure" discovery loop, enabling accelerated exploration of chemical space with minimal human intervention [30]. These self-driving laboratories represent the convergence of several advanced technologies: chemical science databases, large-scale AI models, automated experimental platforms, and integrated management systems that work synergistically to create a seamless research environment [30]. In catalyst development—a field historically constrained by extensive experimentation—this paradigm shift is particularly impactful, offering the potential to dramatically compress development timelines from years to weeks while optimizing for performance, cost, and sustainability [9].

This comparison guide examines the fundamental differences between traditional and AI-driven catalyst development approaches, providing researchers and drug development professionals with a comprehensive analysis of performance metrics, experimental methodologies, and the essential technological toolkit required for modern autonomous research. By objectively comparing these paradigms through quantitative data and detailed protocols, we aim to illuminate both the transformative potential and current limitations of autonomous workflow systems in chemical research.

Performance Comparison: Traditional vs. AI-Driven Catalyst Development

The quantitative advantages of AI-driven autonomous workflows become evident when comparing key performance metrics across multiple dimensions of catalyst development. The following table synthesizes experimental data from recent implementations of closed-loop systems.

Table 1: Performance Comparison of Catalyst Development Approaches

| Performance Metric | Traditional Approach | AI-Driven Autonomous Approach | Experimental Context |

|---|---|---|---|

| Development Timeline | Months to years [9] | Weeks to months [9] | Oxidative Coupling of Methane (OCM) catalyst development [9] |

| Compounds Synthesized per Candidate | Thousands [31] | Hundreds [31] | CDK7 inhibitor program [31] |

| Design Cycle Efficiency | Baseline | ~70% faster cycles [31] | Exscientia's small-molecule design platform [31] |

| Knowledge Extraction Accuracy | Manual literature review | 92% accuracy [9] | Qwen2:7b LLM on OCM catalyst data [9] |

| Success Rate | Industry standard | 10x fewer compounds synthesized [31] | AI-designed clinical candidates [31] |

| Data Utilization | Limited, unstructured | Multimodal, structured, real-time [30] | Autonomous laboratory platforms [30] |

The performance differential stems from fundamental operational distinctions. Traditional catalyst development relies heavily on researcher intuition and sequential experimentation, where each iteration requires manual intervention, data interpretation, and hypothesis generation [9]. In contrast, autonomous systems implement continuous design-make-test-analyze cycles where AI models rapidly propose new experiments based on all accumulated data, robotic platforms execute these experiments with high precision and reproducibility, and the results immediately inform subsequent cycles [30]. This closed-loop operation not only accelerates the empirical screening process but also enables more efficient exploration of complex parameter spaces through Bayesian optimization and other machine learning algorithms that strategically prioritize the most promising experimental directions [30] [9].

Experimental Protocols in Autonomous Catalyst Development

Catal-GPT Workflow for OCM Catalyst Optimization

A representative experimental protocol for AI-driven catalyst development is demonstrated by the Catal-GPT system for oxidative coupling of methane (OCM) catalysts, which employs a structured, iterative workflow [9]:

Data Curation and Knowledge Base Construction: The process begins with assembling a comprehensive dataset of OCM catalyst formulations, preparation parameters, and performance metrics extracted from scientific literature. In the Catal-GPT implementation, this involved collecting data on catalyst synthesis, characterization, and application, followed by data cleaning and encoding to fit the model's input format. Conflicting parameters for the same catalytic system were resolved by prioritizing preparation parameters from authoritative publications with the highest reported frequency [9].

Natural Language Interface and Query Processing: Researchers interact with the system through natural language queries (e.g., "Suggest a La2O3-based catalyst with high C2 selectivity"). The large language model (qwen2:7b in this case) processes these queries by extracting relevant knowledge from the structured database and generating specific formulation suggestions [9].

Hypothesis Generation and Experimental Planning: The AI model proposes complete catalyst preparation processes, including specific chemical reagents, concentrations, calcination temperatures, and other critical parameters. For example, the system might recommend a La2O3/CaO catalyst with precise molar ratios and calcination at 800°C based on optimized patterns learned from the training data [9].

Robotic Execution and Synthesis: Automated robotic platforms execute the suggested synthesis protocols. While the Catal-GPT study focused on the AI component, integrated platforms like the University of Science and Technology of China's autonomous laboratory employ robotic systems for actual catalyst preparation, handling powder processing, mixing, heating, and other synthesis steps with minimal human intervention [30].

Characterization and Performance Testing: The synthesized catalysts undergo automated characterization and testing. For OCM catalysts, this typically includes catalytic testing in continuous-flow reactors with online gas chromatography to measure methane conversion, C2+ selectivity, and yield under standardized conditions [9].

Data Integration and Model Refinement: Experimental results are fed back into the database, creating a learning loop where the AI model continuously refines its predictions based on empirical evidence. This feedback learning module allows the system to progressively improve its recommendation accuracy over multiple iterations [9].

Autonomous Workflow Orchestration

The execution of autonomous experiments relies on formal workflow models that ensure robust operation. These workflows are typically represented as state machines: F=(S,s0,T,δ)F = (S, s0, T, \delta), where SS represents possible states, s0s0 the start state, TT terminal states, and δ\delta the transition function between states based on observations [32]. Advanced systems implement dynamic scheduling where task readiness is determined by: ready(t)⟺∀p∈parents(t),status(p)=Doneready(t) \iff \forall p \in parents(t),\ status(p)=\mathrm{Done}, ensuring proper dependency management throughout the experimental sequence [32].

System Architecture and Workflow Visualization

The operational framework of an autonomous laboratory can be visualized as an integrated system where digital intelligence continuously directs physical experimentation. The following diagram illustrates the core closed-loop workflow and its key components.

Autonomous Laboratory Closed-Loop Workflow

This architecture creates a self-optimizing system where each component plays a critical role. The chemical science database serves as the foundational knowledge base, integrating multimodal data from proprietary databases, open-access platforms, and scientific literature, often structured using knowledge graphs for efficient retrieval [30]. Large-scale intelligent models, including both specialized algorithms like Bayesian optimization and genetic algorithms, and large language models like Catal-GPT, provide the cognitive engine for experimental planning and prediction [30] [9]. Automated experimental platforms physically execute the experiments through robotic systems that handle synthesis, formulation, and characterization tasks with precision and reproducibility [30]. Finally, management and decision systems orchestrate the entire workflow, dynamically allocating resources, managing experimental queues, and ensuring fault tolerance through checkpointing and automatic retries [32].

The Researcher's Toolkit: Essential Technologies and Platforms

Implementing autonomous workflows requires a sophisticated technology stack that spans computational, robotic, and data infrastructure. The following table details key solutions and their functions in enabling closed-loop research.

Table 2: Essential Research Reagent Solutions for Autonomous Chemistry

| Technology Category | Representative Platforms/Tools | Primary Function | Application in Autonomous Workflows |

|---|---|---|---|

| AI/LLM Platforms | Catal-GPT [9], ChemCrow [9], ChemLLM [9] | Catalyst formulation design, knowledge extraction, reaction prediction | Generating executable catalyst preparation methods; extracting knowledge from literature |

| Workflow Orchestration | AlabOS [32], Globus Flows [32], Emerald [32] | Experimental workflow management, resource scheduling, fault tolerance | Orchestrating complex experimental sequences with dynamic resource allocation |

| Robotic Automation | Automated robotic platforms [30], AutomationStudio [31] | High-throughput synthesis, sample processing, characterization | Physically executing chemical synthesis and analysis with minimal human intervention |

| Data Management | Chemical science databases [30], SAC-KG framework [30] | Structured data storage, knowledge graph construction, data retrieval | Organizing multimodal chemical data for AI model training and retrieval |

| Simulation & Modeling | Density functional theory (DFT) [30], Molecular dynamics [9] | Theoretical calculation, property prediction, mechanism elucidation | Providing prior knowledge and validation for AI-generated hypotheses |

| Cloud Infrastructure | Amazon Web Services (AWS) [31], Cloud offloading frameworks [32] | Scalable computing, data storage, platform integration | Hosting AI models and providing computational resources for data analysis |

This technology stack enables the implementation of increasingly sophisticated autonomous systems. For example, the integration of Catal-GPT for catalyst design with AlabOS for workflow orchestration and robotic automation platforms creates a cohesive system that can autonomously propose, execute, and optimize catalyst development campaigns [9] [32]. The emergence of cloud-native platforms like Exscientia's AWS-integrated system demonstrates how scalable infrastructure supports the substantial computational demands of these workflows, particularly when incorporating foundation models and large-scale data analysis [31].

The comparison between traditional and AI-driven catalyst development approaches reveals a fundamental shift in research methodology with profound implications for efficiency, cost, and discovery potential. Autonomous workflows consistently demonstrate superior performance across multiple metrics, particularly in accelerating development timelines, reducing material requirements, and enabling more systematic exploration of complex chemical spaces [30] [9] [31]. However, these systems face ongoing challenges including data quality requirements, model generalization limitations, and the need for specialized expertise to implement and maintain the complex technology stack [9].

The trajectory of autonomous laboratories points toward increasingly integrated and intelligent systems. Future developments will likely focus on enhancing AI models through deeper integration with physical simulations like density functional theory, establishing standardized knowledge graphs for improved data extraction, and creating more sophisticated multi-agent architectures where specialized AI modules collaborate on complex research problems [9] [32]. As these technologies mature, autonomous workflows are poised to transition from specialized implementations to mainstream research infrastructure, potentially redistributing human researcher roles from routine experimentation to higher-level strategic planning, interpretation, and innovation [30] [33]. This evolution promises to not only accelerate catalyst development but fundamentally expand the boundaries of explorable chemical space, opening new frontiers in materials science, drug discovery, and sustainable energy technologies.

The field of oncology therapeutic development stands at a transformative crossroads, marked by the convergence of traditional drug discovery methodologies with cutting-edge artificial intelligence technologies. Traditional drug discovery has long been characterized by extensive timelines averaging 10-15 years, exorbitant costs exceeding $1-2.6 billion, and dauntingly low success rates, with only 4-7% of investigational new drug applications ultimately gaining approval [34]. This inefficient paradigm has created significant bottlenecks in delivering life-saving treatments to cancer patients, particularly for those with rare or treatment-resistant malignancies. The integration of AI technologies is fundamentally reshaping this landscape, accelerating discovery timelines, improving success rates, and enabling the targeting of previously undruggable pathways [34] [35].

The underlying transformation represents a fundamental shift from "experience-driven" to "data-driven" research paradigms, mirroring similar revolutions occurring across scientific disciplines. In catalyst design, for instance, machine learning has demonstrated the capability to accelerate computational screening by factors of up to 10⁵ times compared to traditional density functional theory calculations [36]. Similarly, in oncology drug discovery, AI platforms are now compressing the initial discovery timeline from target identification to clinical candidate selection from 4-5 years to as little as 18-24 months [35]. This case study examines the quantitative performance differences between traditional and AI-driven approaches, analyzes the experimental methodologies enabling these advances, and explores the implications for the future of oncology therapeutics development.

Quantitative Comparison: Traditional vs. AI-Driven Drug Discovery

Table 1: Performance Metrics Comparison Between Traditional and AI-Driven Drug Discovery Approaches

| Performance Metric | Traditional Approach | AI-Driven Approach | Improvement Factor |

|---|---|---|---|

| Discovery Timeline | 4-5 years [35] | 18-24 months [35] | 60-70% reduction |

| Cost per Candidate | ~$1-2.6 billion [34] [37] | Significant reduction [35] | Not fully quantified |

| Phase 1 Success Rate | Industry standard: ~40-50% [38] | AI-designed molecules: 80-90% [38] | 2x improvement |

| Target Identification | Limited to known pathways | Hundreds to thousands of novel targets [37] | Order of magnitude increase |

| Molecular Screening | Months for limited libraries | Hours for billions of molecules [37] | 10⁵ times acceleration [36] |

| Clinical Trial Recruitment | Manual screening, slow enrollment | AI-matching, reduced screening time [38] | Significant efficiency gains |

Table 2: AI Platform Performance in Specific Oncology Drug Discovery Applications

| AI Platform/Technology | Application | Reported Performance | Clinical Stage |

|---|---|---|---|

| Exscientia - Centaur Chemist | OCD treatment (DSP-1181) | First AI-designed drug to reach trials [35] | Phase 1 |

| Insilico Medicine - Pharma.AI | TNIK inhibitor for IPF (ISM001-055) | Target identification to PCC in 18 months [35] | Phase IIa |

| Recursion - Phenotypic Screening | CCM disease (REC-994) | Identified novel compound for rare disease [35] | Phase II (terminated) |

| Schrödinger - Physics-Based AI | TYK2 inhibitor (NDI-034858) | $4B licensing deal with Takeda [35] | Phase III |

| BenevolentAI - Knowledge Graphs | Baricitinib for COVID-19 | AI-predicted drug repurposing [35] | Approved for COVID-19 |

| University of Chicago/Argonne - IDEAL | Ovarian cancer targets | Screening billions of molecules in hours [37] | Preclinical |