Artificial Neural Networks in Catalysis: Bridging Experimental Data and Theoretical Models for Accelerated Discovery

This article provides a comprehensive review of Artificial Neural Networks (ANNs) as transformative tools in catalysis research, addressing four key intents for a scientific audience.

Artificial Neural Networks in Catalysis: Bridging Experimental Data and Theoretical Models for Accelerated Discovery

Abstract

This article provides a comprehensive review of Artificial Neural Networks (ANNs) as transformative tools in catalysis research, addressing four key intents for a scientific audience. We explore the foundational principles of ANNs tailored for catalytic data, examining how they learn from both experimental datasets and theoretical simulations. We detail methodological workflows for constructing, training, and applying ANNs to core catalytic challenges such as predicting activity, selectivity, and optimal reaction conditions. The guide tackles common pitfalls in model development, including data scarcity and overfitting, offering practical optimization strategies. Finally, we critically evaluate model performance against traditional methods and theoretical benchmarks, discussing validation protocols and the path toward trustworthy, deployable models. This synthesis aims to equip researchers with a roadmap for leveraging ANNs to accelerate catalyst design and discovery.

Understanding ANNs in Catalysis: Core Concepts and Data Synergy Between Lab and Simulation

The Biological Neuron: Inspiration for Artificial Networks

The development of Artificial Neural Networks (ANNs) is fundamentally inspired by the structure and function of the biological brain. A biological neuron receives electrochemical signals from other neurons via dendrites. If the integrated input surpasses a certain threshold, the neuron fires an action potential down its axon, releasing neurotransmitters across synapses to subsequent neurons. This process of weighted signal integration and nonlinear response is the core concept abstracted into computational models.

An ANN transforms an input vector X into an output Y through a series of hierarchical, nonlinear transformations. Each artificial neuron performs the operation: a = f(w·x + b), where w are weights, x are inputs, b is a bias, and f is a nonlinear activation function (e.g., ReLU, sigmoid). Layers of these neurons form a network capable of approximating complex functions, a property known as the universal approximation theorem.

Training ANNs: The Optimization Engine

Networks learn by optimizing their parameters (weights and biases) to minimize a loss function L quantifying prediction error. This is achieved via backpropagation and gradient descent algorithms. The gradient of the loss with respect to each parameter, ∇L, is computed and parameters are updated: w_new = w_old - η∇L, where η is the learning rate. This iterative process on large datasets allows ANNs to discover intricate patterns.

ANN Paradigms for Catalysis Research

Within catalysis research, different ANN architectures serve distinct purposes:

- Multilayer Perceptrons (MLPs): For predicting catalytic activity, selectivity, or optimal reaction conditions from descriptor vectors.

- Convolutional Neural Networks (CNNs): For analyzing spatial data, such as microscope images of catalysts or spectral data (FTIR, Raman).

- Graph Neural Networks (GNNs): For modeling molecular and catalyst structures as graphs, where atoms are nodes and bonds are edges, enabling property prediction from structure.

- Recurrent Neural Networks (RNNs)/Long Short-Term Memory (LSTM): For modeling time-series data from reaction kinetics or operando studies.

Quantitative Data: ANN Performance in Catalytic Property Prediction

Recent studies demonstrate the predictive power of ANNs in catalysis. The table below summarizes key performance metrics.

Table 1: Performance of ANN Models in Catalysis Prediction Tasks

| Catalytic Property | ANN Architecture | Dataset Size | Key Metric | Reported Performance | Reference |

|---|---|---|---|---|---|

| Methane Activation Energy | Dense Feedforward | ~15,000 DFT data | Mean Absolute Error (MAE) | < 0.15 eV | Li et al., 2022 |

| CO2 Reduction Product Selectivity | Graph Neural Network | 500 experimental | Classification Accuracy | 89% | Zhong et al., 2023 |

| Optimal Photocatalyst Band Gap | Convolutional NN | ~8,000 materials | Root Mean Square Error (RMSE) | 0.32 eV | Chen et al., 2023 |

| Heterogeneous Catalytic Turnover Frequency (TOF) | Ensemble MLP | 2,340 entries | R² Score (test set) | 0.91 | Schmidt et al., 2024 |

Detailed Protocol: Training an ANN for Catalyst Screening

Objective: To train an MLP model for predicting the adsorption energy of key intermediates on alloy surfaces.

Materials & Computational Setup:

- Hardware: GPU cluster (e.g., NVIDIA V100).

- Software: Python 3.9+, PyTorch/TensorFlow, scikit-learn, pandas.

- Dataset: CSV file containing calculated descriptors (e.g., d-band center, coordination number, elemental properties) and target adsorption energies from DFT.

Procedure:

- Data Preprocessing: Load dataset. Handle missing values (impute or remove). Scale features using

StandardScaler. Split data into training (70%), validation (15%), and test (15%) sets. - Model Definition: Define an MLP with 3 hidden layers (e.g., 128, 64, 32 neurons) using PyTorch. Use ReLU activation for hidden layers and a linear output. Initialize weights (e.g., He initialization).

- Training Loop: For 1000 epochs: a. Forward pass: Compute predicted adsorption energies. b. Compute loss (Mean Squared Error). c. Zero gradients, perform backward pass (backpropagation). d. Update parameters using Adam optimizer (learning rate=1e-3). e. Every 50 epochs, evaluate on validation set; employ early stopping if validation loss plateaus for 100 epochs.

- Evaluation: On the held-out test set, calculate MAE, RMSE, and R². Perform parity plot analysis.

- Deployment: Save the trained model. Deploy as a web service or script for rapid screening of new candidate materials.

The Scientist's Toolkit: Key Reagents & Materials for ANN-Driven Catalysis Research

Table 2: Essential Research Toolkit for ANN-Catalysis Integration

| Item / Solution | Function in Research |

|---|---|

| High-Throughput Experimentation (HTE) Rig | Generates large, consistent datasets of catalytic performance (yield, conversion) required for training robust ANNs. |

| Density Functional Theory (DFT) Code | Generates quantum-mechanical data (energies, descriptors) to train ANNs where experimental data is scarce. |

| Crystal Structure Databases (e.g., ICSD, COD) | Provides atomic coordinates for known materials, the foundational input for structure-based GNN/CNN models. |

| Python Scientific Stack (NumPy, pandas) | Enables data manipulation, cleaning, and feature engineering from raw experimental/theoretical data. |

| Deep Learning Framework (PyTorch/TensorFlow) | Provides the flexible environment to define, train, and optimize ANN architectures for catalytic problems. |

| Automated Hyperparameter Optimization Lib (Optuna) | Systematically searches for the best ANN model parameters (layers, learning rate) to maximize predictive accuracy. |

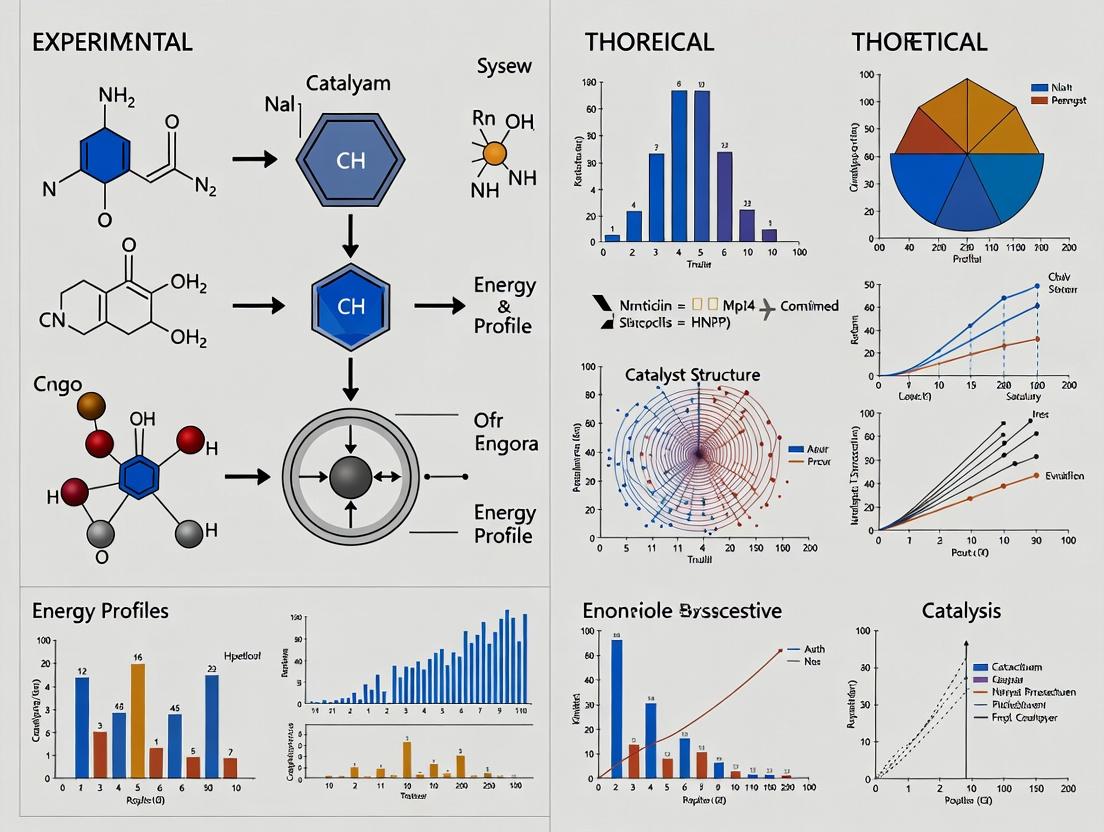

Visualizing the ANN-Catalysis Research Workflow

Diagram Title: ANN-Catalysis Research Cycle

Diagram Title: GNN for Adsorption Energy Prediction

ANNs have evolved from a simplistic model of biological computation to a foundational pillar of modern catalysis research. By serving as high-dimensional function approximators, they create powerful, data-driven links between catalyst descriptors (from theory or experiment) and performance metrics. The future of the field lies in a tightly integrated loop: ANNs guide high-value experiments and computations, the results of which continuously refine and expand the training data, leading to more accurate, generalizable, and ultimately, predictive models for next-generation catalyst design. This synergistic approach forms the core thesis of modern ANN for catalysis research, bridging experiment and theory.

The design of novel catalysts is a multidimensional optimization problem constrained by scaling relations. The integration of experimental and Density Functional Theory (DFT) datasets within an Artificial Neural Network (ANN) framework presents a paradigm shift. This guide outlines the systematic construction, curation, and fusion of these complementary data streams to train robust predictive models that accelerate catalyst discovery from both experiment and theory perspectives.

The Dual-Stream Data Universe

Catalytic data originates from two primary, complementary sources: controlled laboratory experiments and quantum-mechanical simulations.

Table 1: Comparison of Experimental and DFT Data Streams

| Aspect | Experimental Measurements | Theoretical (DFT) Datasets |

|---|---|---|

| Primary Output | Macroscopic observables (e.g., rate, yield, TOF, selectivity). | Electronic/atomic-scale descriptors (e.g., adsorption energies, reaction barriers, d-band center). |

| Throughput | Moderate to high (via high-throughput reactors). | Very high (automated computational workflows). |

| System Complexity | Real, complex systems (effects of solvents, impurities, defects). | Idealized, clean models (single crystal facets, perfect sites). |

| Key Cost | Time, materials, and characterization. | Computational resources (CPU/GPU hours). |

| Uncertainty Source | Measurement error, reactor hydrodynamics, sample heterogeneity. | Functional approximation error, convergence criteria, model geometry. |

Detailed Experimental Protocols for Key Measurements

Protocol: Steady-State Catalytic Rate Measurement in a Plug-Flow Reactor (PFR)

Objective: Determine turnover frequency (TOF) and activation energy (Ea).

- Catalyst Preparation: Synthesize catalyst (e.g., supported metal nanoparticles) via incipient wetness impregnation. Reduce in situ in H2 at specified temperature (e.g., 400°C for 2h).

- Reactor Setup: Load known mass (e.g., 50 mg) of reduced catalyst into a stainless-steel tubular PFR. Dilute with inert silica to maintain bed geometry.

- Conditioning: Flow reactant mixture (e.g., CO:H2:He = 1:2:7) at total flow rate (e.g., 20 sccm) at sub-conversion conditions (<10%) for 1 hour to establish steady state.

- Kinetic Measurement: Measure product composition via online Gas Chromatography (GC) or Mass Spectrometry (MS). Vary temperature (e.g., 200-250°C) while holding partial pressures constant. Calculate rate per gram of catalyst.

- Active Site Counting: Perform ex situ H2 chemisorption or CO pulse titration on a separate, identically prepared sample to determine active site density. Convert rate to TOF (s⁻¹).

- Data Processing: Plot ln(TOF) vs. 1/T (Arrhenius plot). The slope gives -Ea/R.

Protocol:In SituX-ray Absorption Spectroscopy (XAS) Measurement

Objective: Determine metal oxidation state and local coordination under reaction conditions.

- Sample Cell: Load powdered catalyst into a dedicated in situ capillary cell or fixed-bed reactor with appropriate gas feed and heating.

- Alignment: Align the cell in the synchrotron X-ray beam. Calibrate energy using a metal foil (e.g., Pt foil for Pt L3-edge).

- Data Collection: Collect X-ray Absorption Near Edge Structure (XANES) and Extended X-ray Absorption Fine Structure (EXAFS) spectra:

- At room temperature in inert gas.

- During reduction in H2 at target temperature.

- Under reaction gas mixture at operational temperature.

- Analysis: Fit XANES region with linear combination of reference spectra to quantify oxidation states. Fit EXAFS region to extract coordination numbers and bond distances.

Workflow for Integrated Data Pipeline

The synthesis of experimental and computational data into a predictive ANN model requires a structured pipeline.

Diagram Title: ANN Catalysis Data Fusion Pipeline

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Research Reagent Solutions and Materials

| Item | Function/Description | Key Application |

|---|---|---|

| Metal Precursor Salts (e.g., H2PtCl6·6H2O, Ni(NO3)2·6H2O) | Source of active metal for catalyst synthesis via impregnation or co-precipitation. | Preparation of supported heterogeneous catalysts. |

| High-Surface-Area Supports (e.g., γ-Al2O3, SiO2, TiO2, CeO2) | Provide stabilizing matrix for active phase, influence electronic properties via strong metal-support interaction (SMSI). | Catalyst synthesis. |

| Calibration Gas Mixtures (e.g., 1% CO/He, 1% H2/Ar) | Quantitative reference for analytical instruments (GC, MS). Essential for accurate concentration measurement. | Activity testing and chemisorption. |

| UHP Gases (Ultra High Purity H2, O2, He, Ar) | Purge gases, carrier gases, and reactive gases free of contaminants (e.g., H2O, O2, hydrocarbons). | Reactor conditioning, catalyst reduction, and inert atmospheres. |

| Reference Catalysts (e.g., EUROPT-1, NIST standards) | Well-characterized materials (e.g., 6.3% Pt/SiO2) for benchmarking reactor performance and analytical methods. | Validation of experimental protocols. |

| Computational Software Suites (e.g., VASP, Quantum ESPRESSO, ASE) | Ab initio simulation packages to perform DFT calculations for energy and property prediction. | Generation of theoretical datasets. |

| Automated Workflow Tools (e.g., FireWorks, AiiDA, CatKit) | Frameworks to automate high-throughput DFT calculation setup, execution, and data management. | Scaling theoretical data generation. |

ANN Architecture and Descriptor Space Integration

The predictive power of an ANN in catalysis hinges on the choice of input descriptors that bridge experiment and theory.

Diagram Title: ANN Model with Multi-Source Inputs

Data Tables: Quantitative Benchmarks

Table 3: Representative Catalytic Performance Data (Experimental vs. ANN-Predicted)

| Catalyst System | Reaction | Experimental TOF (s⁻¹) | ANN-Predicted TOF (s⁻¹) | Experimental Selectivity (%) | ANN-Predicted Selectivity (%) |

|---|---|---|---|---|---|

| Pt3Sn/SiO2 | Propane Dehydrogenation | 0.45 (at 600°C) | 0.41 | 98.2 | 97.5 |

| Co/MnO | Fischer-Tropsch Synthesis | 0.008 (at 220°C) | 0.0075 | 78 (C5+) | 75 (C5+) |

| PdAu/C | Vinyl Acetate Synthesis | 5.2 (at 150°C) | 4.9 | 92.1 | 90.8 |

Table 4: Key DFT-Calculated Descriptors for Transition Metal Surfaces

| Metal Surface | d-band Center (εd, eV) | O* Adsorption Energy (eV) | CO* Adsorption Energy (eV) | N2 Dissociation Barrier (eV) |

|---|---|---|---|---|

| Pt(111) | -2.48 | -3.42 | -1.45 | 1.15 |

| Ru(0001) | -1.95 | -4.10 | -1.65 | 0.85 |

| Au(111) | -4.50 | -0.80 | -0.20 | 2.50 |

| Ni(111) | -1.30 | -4.25 | -1.55 | 1.02 |

The integrated catalytic data landscape, where experimentally measured quantities are continuously aligned with computationally derived descriptors, forms the foundation for next-generation ANN-driven discovery. This virtuous cycle—where model predictions guide new high-priority experiments and calculations—dramatically accelerates the search for optimal catalysts, effectively closing the loop between hypothesis, simulation, and empirical validation.

This technical guide details the core artificial neural network (ANN) architectures driving modern computational catalysis research, positioned within a broader thesis integrating experimental and theoretical perspectives. The selection and design of these architectures are critical for translating atomic-scale simulations and spectral data into predictive models for catalyst discovery and optimization.

Feedforward Neural Networks (FNNs): The Regression Workhorse

FNNs, or multilayer perceptrons (MLPs), form the foundational architecture for mapping catalyst descriptors to target properties. They establish scalar relationships between input features (e.g., adsorption energies, elemental properties, reaction barriers) and output metrics (e.g., turnover frequency, selectivity, stability).

Experimental/Theoretical Context: FNNs are predominantly used in the post-processing of data generated from density functional theory (DFT) calculations or curated experimental datasets. They learn the complex, non-linear functions that underpin catalytic activity volcanoes or structure-property relationships.

Key Quantitative Data & Performance:

Table 1: Typical FNN Performance for Catalytic Property Prediction

| Target Property | Typical Input Features | Dataset Size | Reported Mean Absolute Error (MAE) | Reference Year |

|---|---|---|---|---|

| Adsorption Energy (eV) | Compositional, electronic (d-band center), structural | 1,000 - 50,000 DFT data points | 0.05 - 0.15 eV | 2023 |

| Reaction Energy Barrier (eV) | Transition state descriptors, reactant/product states | 500 - 10,000 DFT data points | 0.08 - 0.20 eV | 2024 |

| Catalytic Activity (TOF) | Microkinetic model parameters, descriptor sets | 100 - 1,000 multi-fidelity data points | 0.3 - 0.8 log(TOF) units | 2023 |

Detailed Protocol for FNN Training on DFT Data:

- Data Curation: Assemble a dataset of DFT-calculated adsorption energies for various adsorbates on different surface models.

- Feature Engineering: Calculate input features for each data point (e.g., elemental properties of the substrate, coordination numbers, generalized coordination numbers, etc.).

- Model Architecture: Implement a 3-5 hidden layer FNN with 128-512 neurons per layer. Use activation functions like ReLU or SiLU.

- Training: Split data (80/10/10 train/validation/test). Use Mean Squared Error (MSE) loss and the Adam optimizer with a learning rate scheduler (e.g., ReduceLROnPlateau).

- Validation: Monitor MAE on the validation set. Employ early stopping to prevent overfitting.

- Deployment: The trained model can rapidly screen thousands of candidate materials by predicting properties from descriptors alone.

Title: FNN Workflow in Catalysis Modeling

Convolutional Neural Networks (CNNs): Analyzing Spectral & Image Data

CNNs excel at processing data with spatial or topological structure, making them ideal for analyzing spectroscopic data (e.g., XRD, XPS, Raman, IR) and microscopy images (TEM, SEM) in catalysis.

Experimental/Theoretical Context: CNNs bridge the gap between raw experimental characterization data and catalyst performance. They can identify phases, quantify particle sizes, classify defect types, and even predict activity directly from spectra or images, linking ex situ and in situ characterization to theory.

Key Quantitative Data & Performance:

Table 2: CNN Applications in Catalytic Data Analysis

| Data Type | CNN Task | Typical Architecture | Reported Accuracy/Error |

|---|---|---|---|

| X-Ray Diffraction (XRD) | Phase Identification & Quantification | ResNet-18, 1D-CNN | >98% Phase ID accuracy |

| Transmission Electron Microscopy (TEM) | Nanoparticle Size/Shape Distribution | U-Net, Mask R-CNN | Pixel-wise IOU > 0.90 |

| Raman/IR Spectroscopy | Active Site Fingerprinting & Deconvolution | 1D-CNN with attention | Peak position MAE < 2 cm⁻¹ |

Detailed Protocol for CNN-based XRD Phase Analysis:

- Data Preparation: Collect a library of labeled XRD patterns (simulated from CIF files or measured). Apply augmentations (noise, baseline shift, peak broadening).

- Preprocessing: Normalize intensity, interpolate to fixed 2θ range (e.g., 10-90°).

- Model Architecture: Use a 1D-CNN with sequential convolutional layers (filter sizes 3-5), pooling layers, and fully connected heads for classification/regression.

- Training: Use Categorical Cross-Entropy loss for multi-phase identification. Train with Adam optimizer.

- Interpretation: Apply Gradient-weighted Class Activation Mapping (Grad-CAM) to highlight which regions of the XRD pattern most influenced the prediction.

Title: CNN for Catalyst Spectral Analysis

Graph Neural Networks (GNNs): The Native Atomic-Scale Model

GNNs operate directly on graph representations, where atoms are nodes and bonds are edges. This makes them the most natural and powerful architecture for modeling catalysts from first principles, capturing local chemical environments intrinsically.

Experimental/Theoretical Context: GNNs are the central tool for theory-guided catalyst discovery. They learn from atomic structures (from DFT-relaxed geometries) and predict energies, forces, and electronic properties. This enables high-throughput virtual screening and molecular dynamics with quantum accuracy (via learned potentials), directly connecting atomic theory to macroscopic performance.

Key Quantitative Data & Performance:

Table 3: Performance of State-of-the-Art GNNs for Catalysis

| GNN Model | Primary Task | Key Innovation | Error on Benchmark Sets (e.g., OC20) |

|---|---|---|---|

| SchNet | Energy/Force Prediction | Continuous-filter convolutional layers | Energy MAE ~ 0.5 eV/atom |

| DimeNet++ | Energy/Force Prediction | Directional message passing | Energy MAE ~ 0.3 eV/atom |

| CGCNN | Crystal Property Prediction | Crystal graph representation | Formation Energy MAE ~ 0.05 eV/atom |

| GemNet | Energy/Force Prediction | Explicit modeling of angles/torsions | Force MAE ~ 0.05 eV/Å |

Detailed Protocol for GNN-Based Catalyst Screening:

- Graph Construction: Represent a catalyst surface or nanoparticle as a graph. Nodes: atoms with features (atomic number, valence). Edges: connections within a cutoff radius (e.g., 5 Å).

- Message Passing: Implement a GNN layer (e.g., from DimeNet++). For each node, aggregate messages from neighboring nodes and edges, updating node states.

- Readout/Pooling: After several message-passing layers, aggregate the final node states into a global graph representation.

- Prediction: Pass the graph representation through an output network to predict the target (e.g., adsorption energy, reaction energy).

- Active Learning: Use model uncertainty to select new DFT calculations, iteratively improving the model in underrepresented regions of chemical space.

Title: GNN Message Passing for a Catalyst Surface

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Computational Tools & Datasets for ANN in Catalysis

| Tool/Resource | Type | Primary Function in ANN for Catalysis |

|---|---|---|

| Atomic Simulation Environment (ASE) | Software Library | Building, manipulating, and running calculations on atomistic systems; central for dataset generation. |

| Open Catalyst Project (OC20/OC22) Dataset | Benchmark Dataset | Massive dataset of relaxations and energies for surfaces & catalysts; standard for training/testing GNNs. |

| PyTorch Geometric (PyG) / Deep Graph Library (DGL) | Software Library | Specialized frameworks for easy implementation and training of GNNs on graph-structured data. |

| CatBERTa or similar | Pre-trained Model | Transformer-based models fine-tuned on catalyst literature for automated knowledge extraction. |

| MatRSC (Materials Research Support Center) Database | Experimental Dataset | Curated repository of experimental catalytic performance data for training multi-fidelity models. |

| LAMMPS with ML-potential plugins | Simulation Engine | Performing large-scale molecular dynamics simulations using GNN-learned interatomic potentials (IPs). |

Within the broader thesis on Artificial Neural Networks (ANNs) for catalysis integrating experiment and theory, feature engineering stands as the critical bridge. Translating raw, multi-faceted data from experiments, microscopy, and electronic structure calculations into robust, predictive descriptors is foundational for training accurate and generalizable ANN models. This guide details the extraction, validation, and integration of these descriptors.

Descriptor Categories & Quantitative Data

Table 1: Core Descriptor Categories for Catalytic ANNs

| Source | Descriptor Category | Example Descriptors | Typical Data Type | ANN Input Scaling | ||

|---|---|---|---|---|---|---|

| Bulk Experiments | Activity/Selectivity | Turnover Frequency (TOF), Yield, Selectivity (%) | Continuous Float | Log or Standard Scaler | ||

| Stability | Decay constant (k_deact), % activity loss after N cycles | Continuous Float | Standard Scaler | |||

| Kinetic Parameters | Activation Energy (Ea), Reaction Orders | Continuous Float | Min-Max Scaler | |||

| Microscopy | Morphological | Particle size (nm), size distribution std. dev., facet ratio | Continuous Float | Min-Max Scaler | ||

| Structural | Coordination number, defect density (counts/nm²) | Continuous Float / Integer | Standard Scaler | |||

| Compositional (from EDS) | Surface atomic % (A/B), segregation index | Continuous Float | Min-Max Scaler | |||

| Electronic Structure | Energetic | Adsorption energies (ΔEads, eV), d-band center (εd, eV) | Continuous Float | Standard Scaler | ||

| Electronic | Bader charges ( | e | ), density of states at E_F | Continuous Float | Standard Scaler | |

| Geometric | Bond lengths (Å), nearest-neighbor distances (Å) | Continuous Float | Min-Max Scaler |

Table 2: Benchmark Descriptor Values for Common Catalytic Systems

| Catalytic System | Experimental TOF (s⁻¹) | Mean Particle Size (nm) | CO Adsorption Energy (eV) | d-band Center (eV, rel. to E_F) | Source |

|---|---|---|---|---|---|

| Pt(111) / ORR | 2.5 x 10⁻² | N/A (single crystal) | -1.25 | -2.3 | [J. Electrochem. Soc.] |

| Au/TiO₂ / CO Oxidation | 5.1 x 10⁻³ | 3.2 ± 0.7 | -0.45 | -3.8 | [Nature Catalysis] |

| Co/Pt Core-Shell / HER | 0.15 (H₂ s⁻¹) | 4.5 ± 1.1 | N/A | -1.9 (Pt shell) | [Science] |

| Single-Atom Fe-N-C / ORR | 4.3 e⁻ site⁻¹ s⁻¹ | N/A (atomically dispersed) | -0.85 (O₂) | -1.2 (Fe site) | [Energy & Env. Science] |

Experimental Protocols for Descriptor Generation

Protocol: Measuring Turnover Frequency (TOF) for Heterogeneous Catalysts

Objective: Quantify intrinsic activity per active site.

- Catalyst Activation: Reduce catalyst (e.g., 100 mg) in H₂ flow (50 sccm) at specified temperature (e.g., 400°C) for 2 hours.

- Kinetic Measurement: Conduct reaction in plug-flow reactor under differential conversion (<15%). Precisely control partial pressures, flow rates (via mass flow controllers), and temperature (fixed-bed).

- Product Analysis: Use online GC/MS or MS for quantification every 10-15 minutes.

- Active Site Counting:

- For metals: Perform H₂ or CO chemisorption (static or pulse) post-reaction. Assume stoichiometry (e.g., H:Pt = 1:1, CO:Pt = 1:1).

- For acids: Use NH₃-TPD or iso-propylamine TPD.

- Calculation: TOF = (moles of product formed per second) / (moles of active sites). Report with reactant partial pressures and temperature.

Protocol: Quantitative STEM-EDS Analysis for Bimetallic Nanoparticles

Objective: Extract size, composition, and distribution descriptors.

- Sample Prep: Deposit catalyst powder on holey carbon Cu grid. Use low-power plasma cleaning for 30s to reduce contamination.

- HAADF-STEM Imaging: Acquire images at 200-300kX magnification. Use dose-controlled mode to prevent beam damage.

- Particle Analysis: Use ImageJ/Fiji with "Analyze Particles" to extract projected area (A) for each particle. Calculate equivalent circular diameter D = 2√(A/π). Export size list.

- EDS Mapping & Quantification:

- Acquire spectrum image cube (e.g., 128x128 pixels, 50 ms/pixel).

- Use Cliff-Lorimer method for quantification: CA/CB = kAB * (IA/IB), where kAB is the experimentally determined sensitivity factor.

- Extract average composition per particle and across the population.

- Descriptor Output: Mean size, standard deviation, skewness of size distribution, average atomic % of component A, compositional histogram.

Protocol: DFT Calculation of Adsorption Energy & d-band Descriptors

Objective: Compute standardized electronic structure descriptors.

- Model Construction: Build slab model (≥4 layers) with ≥15 Å vacuum. Use optimized bulk lattice constants.

- Geometry Optimization: Employ plane-wave DFT code (VASP, Quantum ESPRESSO). Use PBE functional, PAW pseudopotentials, cutoff energy ≥400 eV. Converge forces on atoms to <0.03 eV/Å.

- Adsorption Energy: Place adsorbate in multiple high-symmetry sites. Optimize. Calculate: ΔE_ads = E_(slab+ads) - E_slab - E_ads(gas).

- d-band Center: From the optimized clean surface's projected density of states (PDOS) for the surface metal d-orbitals, compute the first moment: ε_d = ∫_{-∞}^{E_F} E * ρ_d(E) dE / ∫_{-∞}^{E_F} ρ_d(E) dE.

- Descriptor Output: ΔE_ads for key intermediates (C, O, CO, OH), ε_d, and optionally, Bader charges for surface atoms.

Visualization of Workflows & Relationships

Title: ANN-Driven Catalyst Design Feature Engineering Pipeline

Title: Multi-Source Descriptor Integration Path

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials & Reagents for Descriptor Generation

| Category | Item / Reagent | Function & Specification | Key Consideration |

|---|---|---|---|

| Catalyst Synthesis | Metal Precursors (e.g., H₂PtCl₆, HAuCl₄, Ni(NO₃)₂) | Source of active metal component. High purity (>99.99%) essential. | Anion type affects decomposition and final dispersion. |

| High-Surface-Area Supports (e.g., γ-Al₂O₃, Carbon Black, TiO₂) | Provide stabilizing surface and can participate in catalysis. | Surface chemistry (hydroxyl groups, defects) critical. | |

| Reducing Agents (e.g., NaBH₄, H₂ gas, ethylene glycol) | Reduce metal precursors to zero-valent state during synthesis. | Reduction kinetics control nucleation and growth. | |

| Catalytic Testing | Calibration Gas Mixtures (e.g., 5% H₂/Ar, 1000 ppm CO/He) | Quantification of active sites via chemisorption; reactant feeds. | Certified analytical standards required for accuracy. |

| Reference Catalysts (e.g., EUROPT-1, JM standards) | Benchmarked materials for cross-laboratory validation of activity. | Ensures experimental protocol reliability. | |

| High-Temperature Sealant (e.g., Graphite ferrules, ceramic adhesives) | Ensure leak-free reactor operation up to 800°C. | Prevents bypass and ensures safety. | |

| Microscopy | Holey Carbon TEM Grids (e.g., Quantifoil, Lacey Carbon) | Support film for catalyst powder deposition. | Grid type affects particle distribution and background. |

| Plasma Cleaner (e.g., Ar/O₂ plasma) | Removes hydrocarbon contamination from grids prior to imaging. | Reduces background in EDS and improves image contrast. | |

| EDS Sensitivity Factor Standards (e.g., pure element standards) | Required for quantitative compositional analysis via Cliff-Lorimer. | Must be measured on the same instrument. | |

| Electronic Structure | Pseudopotential Libraries (e.g., VASP PAW, GBRV) | Replace core electrons in DFT, drastically reducing compute cost. | Choice (ultrasoft, PAW) affects accuracy for adsorption. |

| Computational Catalysis Databases (e.g., CatApp, NOMAD) | Provide reference energies and structures for validation. | Enables benchmarking of calculation setup. |

Within the domain of catalysis research, the integration of experimental observations with theoretical ab initio calculations presents a powerful paradigm for accelerating material discovery and mechanistic understanding. Artificial Neural Networks (ANNs) have emerged as the pivotal technology enabling this symbiosis. This whitepaper details the technical framework by which ANNs ingest, harmonize, and learn from heterogeneous multi-source data, thereby constructing robust predictive models that bridge the gap between catalytic theory and experiment.

Architectural Framework for Multi-Source Data Integration

ANNs designed for catalytic informatics must process disparate data modalities: continuous theoretical parameters (e.g., density functional theory (DFT)-computed adsorption energies, activation barriers), discrete experimental characterization data (e.g., X-ray diffraction phases, spectroscopy peaks), and continuous experimental performance metrics (e.g., turnover frequency (TOF), selectivity). A hybrid or fusion architecture is typically employed.

Diagram 1: ANN Data Fusion Workflow for Catalysis (85 chars)

Data Harmonization & Representation Learning

The primary technical challenge is the differing scales, dimensions, and noise profiles of data sources.

Table 1: Common Data Types and Preprocessing for Catalysis ANNs

| Data Type | Example in Catalysis | Typical Preprocessing | ANN Input Representation |

|---|---|---|---|

| Theoretical Scalars | DFT adsorption energy (eV) | Z-score normalization | Dense vector node |

| Theoretical Vectors | Projected density of states (pDOS) | PCA dimensionality reduction | 1D convolutional layer input |

| Experimental Spectra | In-situ FTIR, XPS peaks | Baseline correction, alignment, binning | 1D or 2D (for maps) convolutional layer input |

| Categorical Experimental | Crystal phase (e.g., FCC, BCC) | One-hot encoding | Embedding or dense layer |

| Operational Parameters | Temperature, Pressure | Min-max scaling to [0,1] | Dense vector node |

Experimental Protocols for Data Generation

High-quality, consistent data generation is critical for training robust multi-source ANNs.

Protocol 4.1: High-Throughput Experimental Catalytic Testing for ANN Training

- Objective: Generate consistent activity/selectivity data under varied conditions.

- Materials: Parallel packed-bed reactor system, mass flow controllers, online GC/MS.

- Procedure:

- Catalyst library (e.g., doped metal oxides) is loaded into identical reactor channels.

- Reactant gases are precisely mixed and split across channels.

- Temperature is ramped per a predefined protocol (e.g., 50-400°C, 5°C/min).

- Effluent from each channel is analyzed periodically via GC/MS.

- TOF and selectivity are calculated for each catalyst at each condition.

- Data for ANN: Tabular matrix of [Catalyst ID, Dopant, Temp, Pressure, TOFProductA, Selectivity_B].

Protocol 4.2: Coupled Operando Spectroscopy and Activity Measurement

- Objective: Obtain simultaneous mechanistic (spectral) and performance data.

- Materials: Operando XRD or DRIFTS cell, synchrotron beamline (if applicable), mass spectrometer.

- Procedure:

- Catalyst is placed in the operando cell under reactive gas flow.

- While collecting XRD patterns or IR spectra, the cell effluent is analyzed by MS.

- Spectral features (e.g., peak position, intensity) are extracted as time-series.

- MS data provides concurrent activity metrics.

- Data for ANN: Paired, time-aligned datasets: spectral tensors and scalar activity values.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for ANN-Driven Catalysis Research

| Item / Reagent | Function in Research | Key Consideration for ANN Integration |

|---|---|---|

| Standardized Catalyst Libraries | Provides consistent, comparable data points across studies. Enables high-throughput screening. | Essential for generating large, uniform training datasets. Metadata (synthesis conditions) must be digitally recorded. |

| Benchmarked DFT Code (e.g., VASP, Quantum ESPRESSO) | Generates theoretical descriptors (energies, electronic structure) for candidate materials. | Calculation parameters must be rigorously standardized to ensure descriptor consistency for the model. |

| Operando Spectroscopy Cells | Allows collection of real-time structural/spectral data under reaction conditions. | Output must be in a digital, parseable format (e.g., .spe, .xrdml) for automated feature extraction. |

| Automated Reactor Systems (e.g., HTE rigs) | Systematically collects performance data (conversion, selectivity) across wide parameter spaces. | Integration with Laboratory Information Management Systems (LIMS) is crucial for direct data pipeline to ANN training sets. |

| Curated Public Databases (e.g., NOMAD, Materials Project, CatHub) | Provides pre-computed theoretical data and experimental references for initial model training and benchmarking. | Data provenance and quality flags are critical for assessing usability in training. |

ANN Training & Validation Paradigm

Training requires a loss function that penalizes deviations from both theoretical and experimental targets, often employing a multi-task learning framework.

Diagram 2: Multi-Task ANN Training Logic (75 chars)

Table 3: Example Quantitative Benchmark of a Fusion ANN for Catalyst Screening

| Model Architecture | Training Data Sources | Test Set Performance (MAE) | Key Advantage |

|---|---|---|---|

| Theory-Only ANN | DFT descriptors (N=5000) | Activity Prediction: 0.85 eV | Fast screening of hypothetical materials. |

| Experiment-Only ANN | High-throughput experiment (N=800) | Activity Prediction: 0.45 log(TOF) | Grounded in real-world conditions. |

| Fusion ANN (Early Fusion) | Combined DFT & Experimental (N=5800*) | Activity: 0.38 log(TOF) Descriptor Prediction: 0.12 eV | Predicts both property and performance; higher accuracy and generalizability. |

Note: N represents data points. The fusion model uses a shared representation learned from both domains.

The symbiosis of theory and experiment, mediated by ANNs, represents a transformative methodology in catalysis research. By implementing the technical frameworks for data harmonization, multi-task learning, and rigorous experimental protocols outlined herein, researchers can construct predictive models that are greater than the sum of their parts. These models not only accelerate the discovery cycle but also provide deeper mechanistic insights by revealing the underlying physical principles that connect computational descriptors to observed catalytic behavior.

Building and Deploying ANN Models: A Step-by-Step Guide for Catalytic Discovery

This guide details the integrated workflow for applying Artificial Neural Networks (ANNs) in catalysis research, bridging experimental and theoretical data streams to accelerate catalyst discovery and optimization.

Data Curation & Aggregation

The foundation of a robust ANN model is a high-quality, multi-source dataset. Curation involves systematic collection from disparate sources.

Table 1: Primary Data Sources in Catalysis Research

| Source Type | Example Data | Typical Volume | Key Challenges |

|---|---|---|---|

| Experimental | Turnover Frequency (TOF), Selectivity %, Yield, Activation Energy (Ea) | 10² - 10⁴ data points | Noise, inconsistent conditions, sparse high-dimensional data. |

| Computational (DFT) | Adsorption energies, Reaction barriers, Transition state geometries | 10³ - 10⁵ data points | Systematic error, scaling to realistic conditions. |

| Published Literature | Text, tables, figures from journals/patents | Unstructured corpus | Information extraction, standardization. |

| High-Throughput Experimentation | Spectral data, conversion from parallel reactors | 10⁴ - 10⁶ data points | Data alignment, feature engineering from raw signals. |

Experimental Protocol: Data Generation via Temperature-Programmed Reaction (TPRx)

Objective: To generate consistent experimental kinetic data for model training.

- Catalyst Preparation: Load 50 mg of catalyst (e.g., supported metal nanoparticles) into a U-shaped quartz microreactor.

- Pretreatment: Activate catalyst in situ under 50 mL/min H₂ flow at 500°C for 1 hour.

- Reaction Phase: Cool to desired temperature. Introduce reactant feed (e.g., CO:H₂:He = 5:10:85) at a total flow of 100 mL/min.

- Analysis: Effluent gas is monitored by online Mass Spectrometry (MS). Calibrate MS signals for each species (e.g., CO, CH₄, C₂H₄) using standard gas mixtures.

- Data Recording: Record time-dependent partial pressures. Calculate conversion, selectivity, and TOF (moles product per mole active site per second) after steady-state is achieved (typically 30-60 min).

Data Preprocessing & Feature Engineering

Raw data must be transformed into numerical feature vectors.

Common Preprocessing Steps:

- Imputation: K-Nearest Neighbors (KNN) imputation for missing property values (e.g., missing dopant electronegativity).

- Normalization: Min-Max scaling applied to all continuous features (e.g., binding energies, particle sizes).

- Categorical Encoding: One-hot encoding for categorical descriptors (e.g., crystal structure type, predominant surface facet).

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Catalytic Data Generation

| Reagent/Material | Function/Description |

|---|---|

| Alumina (Al₂O₃) Washcoat | High-surface-area support for dispersing active metal phases. |

| H₂PtCl₆·6H₂O (Chloroplatinic Acid) | Common precursor for synthesizing Pt nanoparticles via impregnation. |

| Zeolite Beta (BEA Framework) | Microporous solid acid catalyst; used for acid-catalyzed reactions like cracking. |

| Cerium-Zirconium Oxide (CexZr1-xO2) Mixed Oxide | Oxygen storage material; critical for redox catalysis (e.g., automotive TWC). |

| ISOBUTANE & BUTENE Calibration Gas Mix (1% each in He) | Certified standard for calibrating analytical equipment during alkylation studies. |

ANN Model Architecture & Training

A feedforward ANN with specialized input layers is typically used.

Experimental Protocol: Model Training with K-fold Cross-Validation

Objective: Train an ANN to predict catalytic activity (TOF) from catalyst descriptors.

- Partitioning: Randomly shuffle the dataset and split into training (70%), validation (15%), and hold-out test (15%) sets.

- Architecture Definition: Using a framework like PyTorch, define a network with:

- Input layer: Nodes = number of feature descriptors.

- Hidden layers: 2-3 layers with 64-128 neurons each, using ReLU activation.

- Output layer: Single neuron (for TOF prediction) or multiple for multi-task learning.

- Training Loop: Train for up to 1000 epochs using Adam optimizer. Employ L2 regularization (weight decay=1e-5) to prevent overfitting.

- Validation: After each epoch, calculate Mean Absolute Error (MAE) on the validation set. Implement early stopping if validation MAE does not improve for 50 consecutive epochs.

- Evaluation: Apply the final model to the unseen test set and report key metrics: MAE, R² score.

Diagram 1: ANN Catalyst Discovery Workflow (76 chars)

Model Interpretation & Deployment

Trained models are used for prediction and interpreted to extract scientific insight.

SHAP Analysis Protocol:

- Sample: Use the training set as the background distribution.

- Calculate SHAP Values: Employ the

KernelExplainerorDeepExplainerfrom the SHAP library. - Visualization: Generate summary plots to rank feature importance and dependence plots to reveal relationships (e.g., how predicted TOF varies with adsorption energy).

Deployment via Web Application:

- Backend: A Flask/Django API loads the saved ANN model (.pth or .h5 format).

- Input: User submits a JSON file with catalyst feature vectors.

- Output: The API returns a JSON object containing predictions (TOF, selectivity) and uncertainty estimates.

Active Learning Loop

The deployed model guides new research, closing the loop.

Diagram 2: Active Learning Cycle for Catalysis (58 chars)

This workflow establishes a virtuous cycle where ANN models continuously learn from both planned experiments and theoretical calculations, dramatically accelerating the pace of catalytic discovery and optimization.

This technical guide, framed within a broader thesis on Artificial Neural Network (ANN) development for catalysis research, addresses the critical challenge of integrating heterogeneous data from experimental and theoretical/computational sources. The predictive power of an ANN in catalysis is fundamentally constrained by the quality, consistency, and interoperability of its training data. Effective data preparation and curation are therefore paramount, transforming disparate data streams into a standardized, machine-readable knowledge base for robust model development.

The Data Heterogeneity Landscape in Catalysis Research

Catalysis research generates multifaceted data. Standardizing these streams is the first step toward building a unified dataset for ANN training.

Table 1: Sources and Characteristics of Heterogeneous Data in Catalysis

| Data Source | Data Type | Typical Format(s) | Key Heterogeneity Challenges |

|---|---|---|---|

| Experimental Catalysis | Catalytic activity (e.g., Turnover Frequency, TOF) | Spreadsheets, Lab notebooks, PDF reports | Varied reaction conditions (T, P), inconsistent units, missing error bars, different catalyst naming conventions. |

| Selectivity/Conversion | Instrument outputs (GC, MS) | Calibration differences, data processing software variance. | |

| Catalyst Characterization | Spectra (XRD, XPS, FTIR), Microscopy images | File format diversity ( .raw, .dm3, .tiff), instrument-specific metadata, non-uniform resolution. | |

| Stability Tests (e.g., TGA) | Time-series data | Different time intervals, baseline correction methods. | |

| Computational Catalysis | DFT Calculations | Output files (VASP, Gaussian) | Different levels of theory (e.g., GGA vs. meta-GGA), basis sets, convergence criteria. |

| Reaction Energies & Barriers | Text files, databases | Referenced to different energy zero-points (e.g., clean slab vs. gas-phase molecules). | |

| Descriptor Calculations | Python scripts, CSV files | Inconsistent descriptor definitions (e.g., d-band center calculation method). | |

| Published Literature | All of the above | PDF, HTML | Unstructured text, figures with embedded data, journal-specific formatting. |

Core Standardization Methodology

Protocol: Establishing a Minimal Information Standard

A community-driven minimal information checklist ensures each data entry is meaningful and reusable.

Experimental Protocol: Data Annotation for a Catalytic Reaction Measurement

- Catalyst Identity: Use a standardized notation (e.g.,

Pt_10wt%/Al2O3_sph_5nmfor 10 wt% Pt on Al2O3, spherical, 5nm average particle size). Include synthesis method key. - Reaction Conditions: Record Temperature (K), Pressure (Pa), Reactant Flow Rates (mol/s), and Reactor Type (e.g., packed-bed, CSTR).

- Performance Metrics: Report Turnover Frequency (TOF in s⁻¹) with its calculation basis (e.g., per surface atom from chemisorption), Conversion (%), and Selectivity (%) at a specified time-on-stream (e.g., 1 hour). Always provide associated error estimates.

- Characterization Link: Each activity data point must be linked to the specific catalyst batch and its characterization data (e.g., XRD ID, TEM image ID).

- Metadata: Principal investigator, date, raw data file path, and data processing script version.

Protocol: Computational Data Alignment

To combine DFT data from different sources or calculations:

- Energy Referencing: Choose a common reference (e.g., set the energy of a clean, optimized slab and gas-phase H₂ molecule to 0 eV). All adsorption energies and reaction barriers must be recalculated relative to this reference.

- Descriptor Calculation: Standardize the algorithmic definition for common descriptors. For d-band center, specify: the projected density of states (PDOS) energy range, integration method, and Fermi level alignment procedure.

- Level of Theory Tagging: Each computed property must be tagged with a unique identifier for its computational setup (e.g.,

DFT-PBE-D3-RPBE-400eV).

Table 2: Standardized Descriptor Template for ANN Input

| Descriptor Category | Standardized Name | Unit | Calculation Method |

|---|---|---|---|

| Geometric | avg_particle_size |

nm | From TEM image analysis, using [ImageJ] v1.53 with minimum 200 particle count. |

surface_atom_fraction |

- | Calculated via cuboctahedral model for NPs < 5 nm, else from coordination number. | |

| Electronic | d_band_center |

eV | From Pd-4d projected DOS, Fermi level aligned, integrated from -10 eV to Fermi, using Lobster v3.2.0. |

work_function |

eV | Planar-averaged electrostatic potential difference from DFT slab calculation. | |

| Compositional | alloy_concentration_X |

at.% | From EDX or ICP-MS measurement. |

| Experimental | TOF_initial |

s⁻¹ | Initial rate normalized per surface atom determined by H₂ chemisorption at 308 K. |

Diagram: Data Curation Workflow for Catalysis ANN

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Data Curation in Catalysis Informatics

| Tool / Solution | Category | Primary Function |

|---|---|---|

| Python (Pandas, NumPy) | Programming Language | Core library for data manipulation, transformation, and alignment of tabular data from diverse sources. |

| Catra | Data Curation Platform | Open-source platform specifically designed for extracting and structuring catalyst testing data from spreadsheets and text files. |

| ChemDataExtractor 2.0 | Text Mining | NLP toolkit for automated extraction of chemical entities, properties, and relationships from published literature. |

| Pymatgen | Materials Informatics | Python library for analyzing, manipulating, and transforming computational materials data (VASP, Gaussian outputs). |

| FAIR-DI | Data Infrastructure | Framework for ensuring data is Findable, Accessible, Interoperable, and Reusable (FAIR) via unique identifiers and metadata. |

| OCELOT | Ontology | Ontology for the catalysis domain, providing standardized vocabulary and relationships for semantic data integration. |

| Jupyter Notebooks / Lab | Reproducibility | Interactive environment for documenting, sharing, and executing the entire data curation pipeline. |

Diagram: Data Transformation to ANN Features

Implementing the Pipeline: A Practical Workflow

- Ingestion: Automate data collection from instruments and computational clusters using APIs or monitored directories.

- Standardization: Apply the minimal information protocol using templated scripts. Convert all units to SI. Map all catalyst names to a canonical identifier.

- Validation: Implement rule-based checks (e.g., "TOF cannot be negative", "sum of selectivities ≤ 100%"). Flag outliers for expert review.

- Feature Engineering: Generate the standardized descriptors from Table 2. For missing values, use appropriate imputation (e.g., interpolation for trends, model-based for descriptors) and document the method.

- Storage: Use a versioned database (e.g., SQLite, MongoDB) or structured file format (HDF5) that preserves metadata and provenance. Every datum should be traceable to its origin.

The construction of a high-performance ANN for catalysis prediction is an exercise in data-centric science. A rigorous, automated, and community-aligned framework for standardizing heterogeneous experimental and computational data is not merely preparatory work but the foundational step that determines the ceiling of model accuracy and generalizability. The protocols and tools outlined herein provide a roadmap for transforming catalytic data from a collection of facts into a coherent, interconnected, and intelligent resource for accelerating discovery.

Within the broader thesis on Artificial Neural Networks (ANNs) for catalysis—spanning both experimental and theoretical perspectives—supervised learning stands as the foundational paradigm. It enables the crucial bridge between catalyst structure/composition and functional properties. This guide details the technical implementation of supervised models for two key tasks: (1) Property Prediction (mapping from catalyst design space to performance metrics) and (2) Inverse Design (mapping from desired properties back to the design space). This dual capability is central to accelerating the discovery and optimization of catalysts and, by methodological extension, therapeutic molecules in drug development.

Foundational Principles

Supervised learning for catalysis involves training a model on a dataset (\mathcal{D} = {(\mathbf{x}i, \mathbf{y}i)}{i=1}^N), where (\mathbf{x}i) is a representation of a catalyst (e.g., composition, morphology, synthesis conditions) and (\mathbf{y}_i) is a vector of target properties (e.g., turnover frequency, selectivity, stability). The model learns the function (f: \mathcal{X} \rightarrow \mathcal{Y}). Inverse design inverts this mapping, often via iterative optimization or generative models conditioned on property targets.

Core Methodologies & Experimental Protocols

Data Curation & Representation

Protocol: For heterogeneous catalysis, a typical dataset is constructed from high-throughput experimentation or density functional theory (DFT) calculations.

- Material Descriptors: Calculate or extract features. Common descriptors include elemental properties (electronegativity, atomic radius), orbital-based features (d-band center for surfaces), and geometric descriptors (coordination number, bond lengths).

- Target Properties: Experimental measurements (e.g., product yield from gas chromatography, conversion from mass spectrometry) or theoretical values (activation energy, adsorption energies) are collected.

- Splitting: Data is split into training, validation, and test sets using scaffold splitting (based on core structural motifs) to prevent data leakage and assess model generalizability to novel chemistries.

Model Architectures & Training

Protocol for Training a Graph Neural Network (GNN) for Catalyst Property Prediction:

- Graph Construction: Represent each catalyst (e.g., a molecule or a solid-surface adsorbate system) as a graph (G=(V,E)). Nodes (V) represent atoms, with features encoding element type, hybridization, etc. Edges (E) represent bonds or interatomic distances.

- Model Setup: Implement a Message-Passing Neural Network (MPNN). Each layer updates node features by aggregating ("passing") information from neighboring nodes.

- Training Loop:

- Loss Function: Use Mean Squared Error (MSE) for regression (e.g., predicting energy) or Cross-Entropy for classification (e.g., predicting successful/unsuccessful catalyst).

- Optimizer: Adam optimizer with an initial learning rate of 1e-3.

- Regularization: Apply dropout (rate=0.1) and L2 weight decay (1e-5) to prevent overfitting.

- Validation: Monitor loss on the validation set after each epoch; employ early stopping if validation loss plateaus for 10 consecutive epochs.

Inverse Design via Conditional Generation

Protocol for Conditional Variational Autoencoder (CVAE):

- Architecture: The model consists of an encoder (q\phi(z|x, y)), a prior (p\theta(z|y)), and a decoder (p_\theta(x|z, y)). The condition (y) is the target property vector.

- Training: Maximize the Evidence Lower Bound (ELBO): (\mathcal{L}(\theta, \phi; x, y) = \mathbb{E}{q\phi(z|x,y)}[\log p\theta(x|z,y)] - D{KL}(q\phi(z|x,y) \| p\theta(z|y))).

- Inference: To design a new catalyst, sample a latent vector (z) from the prior (p\theta(z|y{target})) and decode it using the decoder (p\theta(x|z, y{target})) to generate a candidate structure (x).

Table 1: Performance of Supervised Models on Benchmark Catalysis Datasets

| Model Architecture | Dataset (Task) | Key Metric | Performance (Test Set) | Reference/Year |

|---|---|---|---|---|

| Graph Neural Network (GNN) | OC20 (Adsorption Energy Prediction) | Mean Absolute Error (MAE) | 0.58 eV | 2023 |

| Ensemble of MLPs | QM9 (Molecular Property Prediction) | MAE on Internal Energy at 298K | < 0.1 kcal/mol | 2022 |

| Transformer (FermiNet) | Catalyst Discovery for CO2 Reduction | Success Rate (Target FE > 80%) | 34% | 2023 |

| Conditional VAE | Inverse Design of Porous Materials | Structure Recovery Rate (Top-10) | 62% | 2024 |

Table 2: Key Research Reagent Solutions & Computational Tools

| Item | Function in Catalysis/Computational Research |

|---|---|

| High-Throughput Experimentation (HTE) Rigs | Automated platforms for parallel synthesis and testing of catalyst libraries under controlled conditions (pressure, temperature, flow). |

| Density Functional Theory (DFT) Codes (VASP, Quantum ESPRESSO) | Compute accurate ground-state electronic structures, adsorption energies, and reaction pathways for training data generation and validation. |

| Graph Representation Libraries (RDKit, pymatgen) | Convert molecular or crystalline structures into standardized graph or descriptor representations for model input. |

| Deep Learning Frameworks (PyTorch, TensorFlow with JAX) | Build, train, and deploy complex neural network architectures (GNNs, Transformers, VAEs). |

| Active Learning Loops (Phoenics, BoTorch) | Intelligently select the most informative experiments or simulations to perform next, optimizing the data acquisition process. |

Visualized Workflows & Relationships

Diagram 1: ANN for Catalysis Integrated Workflow

Diagram 2: CVAE for Inverse Design

Within the broader thesis on Artificial Neural Networks (ANN) for catalysis, integrating experimental and theoretical perspectives, a paradigm shift is occurring. The core challenge in heterogeneous, homogeneous, and biocatalysis is the multidimensional optimization of catalytic systems. This whitepaper details how ANN models serve as surrogate models for high-fidelity simulations and sparse experimental data, enabling the prediction of catalytic performance metrics—activity, selectivity, and stability—and the subsequent identification of optimal operating conditions.

Fundamental ANN Architectures for Catalytic Property Prediction

ANNs map complex relationships between catalyst descriptors/operational variables and target properties. Common architectures include:

- Multilayer Perceptrons (MLPs): For scalar property prediction (e.g., turnover frequency, yield).

- Convolutional Neural Networks (CNNs): For spatial and image-like data (e.g., microscopy images, XRD patterns, surface geometries).

- Graph Neural Networks (GNNs): For molecular and crystal structures, representing atoms as nodes and bonds as edges.

- Hybrid Models: Combining ANNs with physical equations (Physics-Informed Neural Networks) or other ML models for improved extrapolation.

Data Pipeline and Feature Engineering

High-quality, featurized data is critical. Sources include:

- Theoretical: Density Functional Theory (DFT) calculations for adsorption energies, electronic structure, activation barriers.

- Experimental: High-throughput experimentation (HTE) data, spectroscopic characterization (XPS, EXAFS), temporal performance data.

- Operational: Reactor conditions (T, P, flow rates, concentrations).

Table 1: Common Catalyst Descriptors for ANN Input

| Descriptor Category | Specific Examples | Typical Data Source |

|---|---|---|

| Elemental & Compositional | Atomic number, electronegativity, d-band center, composition ratios | Periodic Table, DFT, XPS |

| Structural | Coordination number, bond lengths, crystal phase, surface energy | XRD, EXAFS, DFT |

| Electronic | Bader charge, density of states, work function | DFT, UPS/Kelvin Probe |

| Morphological | Particle size, facet distribution, porosity | TEM, BET Surface Area |

| Operational | Temperature, pressure, reactant partial pressure, space velocity | Experiment Control Systems |

Detailed Experimental & Computational Protocols

Protocol: High-Throughput Catalyst Screening for ANN Training

Objective: Generate standardized activity/selectivity data across a compositional library.

- Library Synthesis: Use inkjet printing or impregnation robots to prepare catalyst arrays (e.g., M1-MxOy on Al2O3) on a standardized substrate.

- Characterization: Perform rapid in-situ XRD and XPS on each library member.

- Activity Testing: Place array in a scanning mass spectrometer reactor. Expose to reactant flow (e.g., CO2 + H2). Raster the probe to measure product evolution (CO, CH4, CH3OH) for each spot at controlled T (200-400°C) and P (1-20 bar).

- Data Logging: For each spot, record descriptors (composition, lattice parameter, surface oxidation state) and targets (conversion %, selectivity %, deactivation rate over 24h).

- Data Curation: Assemble into a structured CSV file for ANN training.

Protocol: DFT-Augmented ANN for Stability Prediction

Objective: Predict catalyst sintering propensity under operating conditions.

- DFT Calculations: For a model nanoparticle (e.g., Pt10 on CeO2), calculate:

- Metal-Adsorbate binding energies (EO, ECO).

- Metal-support adhesion energy.

- Diffusion barriers for single atoms on the support.

- Feature Generation: Use these energies as descriptors alongside experimental particle size.

- ANN Training: Train a recurrent neural network (RNN) on temporal experimental data of particle growth vs. T, P, gas environment.

- Validation: Compare ANN-predicted stability maps against in-situ TEM observations of sintering.

Diagram Title: ANN Workflow for Catalyst Stability Prediction

Key Applications & Quantitative Performance

Table 2: ANN Performance in Catalytic Property Prediction (Recent Benchmarks)

| Application | Target Property | ANN Architecture | Key Descriptors | Reported Performance (Metric) | Reference Year |

|---|---|---|---|---|---|

| CO2 Reduction | CO selectivity vs. CH4 | Ensemble MLP | d-band center, *OH binding, coordination # | R² = 0.92, MAE = 5.2% selectivity | 2023 |

| Methane Combustion | Light-off Temperature (T50) | GNN | Metal-O bond length, oxide formation energy | MAE = 15°C on test set | 2024 |

| Propane Dehydrogenation | C3H6 Yield at 24h | Hybrid PINN | Pt-Pt distance, support acidity, Sn/Pt ratio | Predicts deactivation within 8% error | 2023 |

| Water-Gas Shift | Optimal Operating Temperature | CNN + MLP | Operando Raman spectra features, P, GHSV | Identifies optimum within ±10°C | 2024 |

| Cross-Coupling | Reaction Yield | Molecular GNN | Morgan fingerprints, solvent polarity, ligand sterics | R² = 0.87 on unseen substrates | 2023 |

Predicting Optimal Operating Conditions

ANNs enable navigation of the complex condition-property landscape. A typical workflow involves:

- Training an ANN on historical experimental data.

- Coupling the ANN with a global optimization algorithm (e.g., Genetic Algorithm, Bayesian Optimization).

- Defining an objective function (e.g., Maximize [Yield × Stability]).

- Letting the optimizer query the ANN to find global optima in T, P, space velocity, and feed composition.

Diagram Title: ANN-Bayesian Optimization for Reaction Conditions

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials & Tools for ANN-Catalysis Research

| Item / Solution | Function in ANN-Catalysis Research | Example Vendor/Software |

|---|---|---|

| High-Throughput Reactor System | Generates large, consistent datasets for ANN training under varied conditions. | HTE ChemSystems, Autolab |

| Standardized Catalyst Libraries | Provides controlled variable spaces (composition, structure) for model development. | NIST RM 8870-8872 |

| Operando Spectroscopy Cells | Delays real-time descriptor data (e.g., surface species) for dynamic ANN models. | Harrick, SPECS in-situ cells |

| DFT Software Suites | Computes electronic/energetic descriptors for catalysts not yet synthesized. | VASP, Quantum ESPRESSO, CP2K |

| ML Frameworks | Provides tools to build, train, and validate ANN architectures. | PyTorch, TensorFlow, Scikit-learn |

| Catalysis-Specific ML Libraries | Offers pre-built tools for featurizing molecules and surfaces. | CatLearn, Amp, DScribe |

| Active Learning Platforms | Manages iterative experiment-ANN loops for targeted discovery. | ChemOS, CARP (Catalysis AI Platform) |

The integration of ANN models, fed by data from both controlled experiments and first-principles theory, is maturing into an essential methodology in catalysis research. It moves the field beyond intuition-based design to a quantitative, predictive science. The key applications—predicting activity, selectivity, stability, and optimal conditions—are fundamentally interconnected through the common framework of the ANN as a high-dimensional regressor and optimizer. This approach, central to the presented thesis, promises to accelerate the discovery and development of next-generation catalysts for energy and sustainable chemistry.

1. Introduction: Framing within ANN for Catalysis Research

The integration of Artificial Neural Networks (ANN) in catalysis research synthesizes experimental and theoretical perspectives, creating a closed-loop discovery engine. High-Throughput Virtual Screening (HTVS) serves as the in silico theory-driven front, rapidly exploring vast chemical spaces. Its most promising candidates then feed into Self-Driving Laboratories (SDLs)—the automated experimental back-end—which validate, optimize, and generate new high-fidelity data. This ANN-guided cycle accelerates the discovery of catalysts, materials, and molecular entities with unprecedented efficiency.

2. High-Throughput Virtual Screening: The Computational Filter

HTVS leverages ANNs to predict key molecular properties, filtering millions of candidates to hundreds of viable leads.

2.1 Core Methodology & Protocols

Protocol: ANN-Based Virtual Screening Workflow

- Library Curation: Assemble a molecular library (e.g., ZINC20, Enamine REAL) of 10^6 - 10^9 compounds. Apply rule-based filters (e.g., drug-likeness, synthetic accessibility).

- Feature Representation: Encode molecules into ANN-compatible descriptors (e.g., ECFP fingerprints, Graph Neural Network-ready graphs, or 3D pharmacophore features).

- Model Inference: Employ a pre-trained ANN model (e.g., a Convolutional Neural Network for image-like representations or a Message-Passing Neural Network for graphs) to predict target properties (binding affinity, catalytic turnover frequency, solubility).

- Post-Processing: Apply molecular dynamics simulations (e.g., using OpenMM) or more precise DFT calculations (e.g., Gaussian, ORCA) to the top 0.01% of hits for validation and pose refinement.

2.2 Key Quantitative Data

Table 1: Performance Comparison of ANN Architectures for Virtual Screening

| ANN Architecture | Typical Library Size Screened | Speed (molecules/sec) | Typical Use Case | AUC-ROC Range |

|---|---|---|---|---|

| Dense Neural Network (on fingerprints) | 10^6 - 10^8 | 10^4 - 10^5 | Early ADMET, activity prediction | 0.75 - 0.90 |

| Convolutional Neural Network (on images) | 10^6 - 10^7 | 10^3 - 10^4 | Ligand-based virtual screening | 0.80 - 0.92 |

| Graph Neural Network (MPNN) | 10^5 - 10^7 | 10^2 - 10^3 | Structure-activity relationship, reactivity prediction | 0.85 - 0.95 |

| Transformer (e.g., ChemBERTa) | 10^6 - 10^8 | 10^3 - 10^4 | Property prediction from SMILES | 0.82 - 0.93 |

3. Guiding Automated Experimentation: The Self-Driving Lab (SDL)

SDLs are physical robotic platforms integrated with a central AI controller (typically an ANN-based optimizer) that iteratively designs, executes, and learns from experiments.

3.1 Core Experimental Protocol

Protocol: Single Iteration of a Catalysis-Focused SDL

- Design of Experiment (DoE): The ANN controller (e.g., a Bayesian Neural Network or Gaussian Process model) selects the next batch of experiments from the candidate set (from HTVS) by maximizing an acquisition function (e.g., Expected Improvement).

- Automated Execution: Robotic fluid handlers (e.g., from Opentrons or HighRes Biosolutions) prepare reactions varying parameters (catalyst, ligand, solvent, temperature, time). An inline analytical suite (e.g., HPLC, GC-MS, plate reader) quantifies yield/selectivity.

- Data Processing & Model Update: Analytical raw data is automatically processed. The results are added to the training dataset, and the ANN controller is retrained to close the loop.

3.2 Key Quantitative Data

Table 2: Metrics and Impact of Representative Self-Driving Labs

| SDL Platform/Study | Domain | Optimization Parameters | Experiments to Optimum | Time Savings vs. Manual |

|---|---|---|---|---|

| AI-Chemist (USTC) | Oxide photocatalysts | 5 (composition, conditions) | < 100 | ~90% |

| Coscientist (CMU) | Cross-coupling conditions | 4 (catalyst, ligand, base, solvent) | < 50 | >95% |

| Ada (U. Toronto) | Polymer photovoltaic materials | 3 (composition, processing) | ~200 | ~85% |

| RoboRXN (IBM) | Organic synthesis pathway | Reaction steps & conditions | N/A (autonomous flow) | >70% |

4. Integrated Workflow Diagram

Title: ANN-Driven Closed Loop for Catalysis Discovery

5. The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Resources for Building an HTVS-SDL Pipeline

| Item / Solution | Category | Function & Explanation |

|---|---|---|

| ZINC20 / Enamine REAL | Compound Libraries | Massive, commercially available databases for virtual screening, providing purchaseable molecules. |

| RDKit | Cheminformatics | Open-source toolkit for molecule manipulation, descriptor calculation, and integration into ANN pipelines. |

| DeepChem | ML Framework | Open-source library specifically for deep learning on molecular data, providing pre-built ANN models. |

| Gaussian 16 / ORCA | Quantum Chemistry | Software for precise DFT calculations to validate and refine HTVS hits before experimental testing. |

| Opentrons OT-2 / Chemspeed Swing | Robotic Liquid Handler | Automates reagent dispensing and reaction setup in SDLs with programmable protocols. |

| Mettler Toledo React-IR / EasySampler | Inline Analytics | Provides real-time reaction monitoring (IR spectroscopy) and automated sampling for kinetic analysis. |

| Bayesian Optimization Toolbox (BoTorch) | AI Controller | PyTorch-based library for building advanced Bayesian optimization loops, the "brain" of an SDL. |

| Citrination (Citrine Informatics) | Data Platform | Manages structured materials/chemistry data, enabling ANN training and SDL decision-making. |

Overcoming Challenges in Catalytic ANN Development: Data, Model, and Interpretability Issues

Within the broader thesis on Artificial Neural Networks (ANNs) for catalysis—integrating experimental kinetics, spectroscopy, and computational (ab initio, DFT) thermodynamics—data scarcity is a fundamental bottleneck. High-throughput experimentation and accurate in silico simulations remain resource-intensive. This guide details pragmatic techniques to overcome limited dataset sizes, enabling robust ANN models for catalyst discovery, optimization, and mechanistic insight.

Core Techniques: Methodologies and Protocols

Transfer Learning (TL)

Transfer learning repurposes knowledge from a source domain (large dataset) to a target domain (small catalytic dataset). The paradigm is particularly apt when source data comes from theoretical calculations, and target data from experiment.

Protocol: Feature Extraction & Fine-Tuning for Catalytic Property Prediction

- Source Model Selection & Pre-training: Select a high-performance ANN (e.g., Graph Neural Network) pre-trained on a large computational catalyst database (e.g., OC20, CatHub). This model has learned fundamental representations of atomic structures, adsorption energies, and electronic features.

- Dataset Preparation:

- Source Domain: Large set of DFT-calculated adsorption energies/activation barriers for various adsorbates on metal/alloy surfaces.

- Target Domain: Small experimental dataset (e.g., <200 samples) of turnover frequencies (TOF) or selectivity for a specific catalytic reaction (e.g., CO2 hydrogenation) under defined conditions (T, P).

- Feature Extraction Stage:

- Remove the final regression/classification layer of the pre-trained ANN.

- Pass your target-domain catalyst structures (represented as graphs or descriptors) through the frozen base network.

- Extract the high-dimensional feature vectors from the last layer before the removed head. These are your "learned descriptors."

- Fine-Tuning Stage:

- Append a new, randomly initialized output layer(s) matching your target property (e.g., a single neuron for TOF prediction).

- Optionally unfreeze and retrain some of the final layers of the base network along with the new head, using the small target dataset. Use a low learning rate (e.g., 1e-5 to 1e-4) and strong regularization (e.g., L2, Dropout).

- Validation: Perform rigorous k-fold cross-validation on the target domain data. Use a held-out experimental test set never seen during fine-tuning.

Data Augmentation (DA)

Data augmentation artificially expands the training set by creating modified, physically plausible versions of existing data points.

Protocol: Physics-Informed Data Augmentation for Catalysis

- Descriptor/Junction-Based Augmentation (for non-graph models):

- Apply controlled "noise" or perturbations to numerical catalyst descriptors (e.g., d-band center, coordination number, electronegativity) within physically meaningful bounds derived from uncertainty estimates in experiment or theory.

- Method: For each sample

iwith descriptor vector xi, generate augmented sample xi' = x_i + ε, where ε ~ N(0, σ). The standard deviation σ is set per descriptor (e.g., 5% of its empirical range or based on measurement error).

- Graph-Based Augmentation (for GNNs):

- Atom/Node Perturbation: Slightly perturb the feature vector of a subset of atoms (e.g., adding noise to initial atomic features).

- Edge Perturbation: Randomly add or remove a small fraction of bonds (edges) within a crystal or molecular graph, simulating surface defects or adsorbate configuration variance.

- Subgraph Sampling: For a large catalyst structure, sample different local environments or adsorption sites as distinct (but related) training examples.

- Synthetic Data from Theory: Use cheap, lower-fidelity computational methods (e.g., semi-empirical, force fields) to generate approximate property labels for hypothetical or slightly altered catalyst structures, followed by calibration with a handful of high-fidelity (DFT/experimental) points.

Table 1: Efficacy of Techniques in Representative Catalysis Studies

| Technique | Source Domain (Size) | Target Catalytic Task (Size) | Performance Gain (vs. Training from Scratch) | Key Metric | Reference (Example) |

|---|---|---|---|---|---|

| Transfer Learning | DFT Adsorption Energies on metals (~100k) | Experimental Methanation TOF on Ni-alloys (54) | MAE reduced by ~62% | Mean Absolute Error (MAE) on log(TOF) | Wang et al., ACS Catal., 2022 |

| Fine-Tuning GNN | OC20 Dataset (~1.3M structures) | Experimental OER Overpotential (Transition Metals) (210) | R² improved from 0.31 to 0.79 | Coefficient of Determination (R²) | Wang et al., ACS Catal., 2022 |

| Descriptor Augmentation | Experimental CO Oxidation Activity (120) | Same, after 5x augmentation (600) | Predictive uncertainty reduced by ~45% | Ensemble Model Variance | Li et al., J. Chem. Inf. Model., 2023 |

| Theory-Guided DA | DFT-derived COHP descriptors (~10k) | Experimental Ethylene Hydrogenation Rate (78) | Required training data reduced by 10x for same accuracy | Data Efficiency | Li et al., J. Chem. Inf. Model., 2023 |

Table 2: Comparison of Techniques' Suitability

| Characteristic | Transfer Learning | Data Augmentation |

|---|---|---|

| Best Use Case | Target task is related to a large, existing source dataset. | Data generation is costly, but plausible variations can be defined. |

| Data Requirement | Requires a relevant source dataset. | Requires rules/physics for meaningful variation. |

| Computational Cost | Moderate-High (pre-training required). | Low (applied during training). |

| Risk of Negative Transfer | High (if source & target are unrelated). | Low (if physics rules are sound). |

| Typical ANN Architecture | Deep Networks (CNNs, GNNs). | Any (Descriptors, CNNs, GNNs). |

Visualized Workflows

ANN Transfer Learning Workflow

Data Augmentation Strategies

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for Implementing TL & DA in Catalysis

| Item / Resource | Function & Relevance | Example / Format |

|---|---|---|

| Pre-trained ANN Models | Foundation for Transfer Learning. Provides learned chemical representations. | OC20 Pretrained GNNs (e.g., SchNet, DimeNet++), CatBERTa (for text mining). |

| Catalysis Databases | Source for pre-training or generating synthetic data via theory. | CatHub, NOMAD, Catalysis-Hub.org, Materials Project. |

| Descriptor Libraries | Enables descriptor-based augmentation and model input. | pymatgen, ase, catlearn for computing structural/electronic features. |

| Graph Neural Network Libs | Essential for implementing TL/DA on graph-structured catalyst data. | PyTorch Geometric (PyG), DGL, JAX-MD. |

| Uncertainty Quantification Tools | Critical for evaluating model confidence on small data and guiding DA. | Ensemble methods, Monte Carlo Dropout, evidential deep learning. |

| Active Learning Platforms | Integrates with TL/DA to iteratively select most informative experiments. | ChemOS, AMD, or custom scripts based on Bayesian optimization. |

1. Introduction