Predicting Catalytic Oxidation in Drug Metabolism: A Comparative Guide to ANN, SVM, and MLR QSAR Models for Researchers

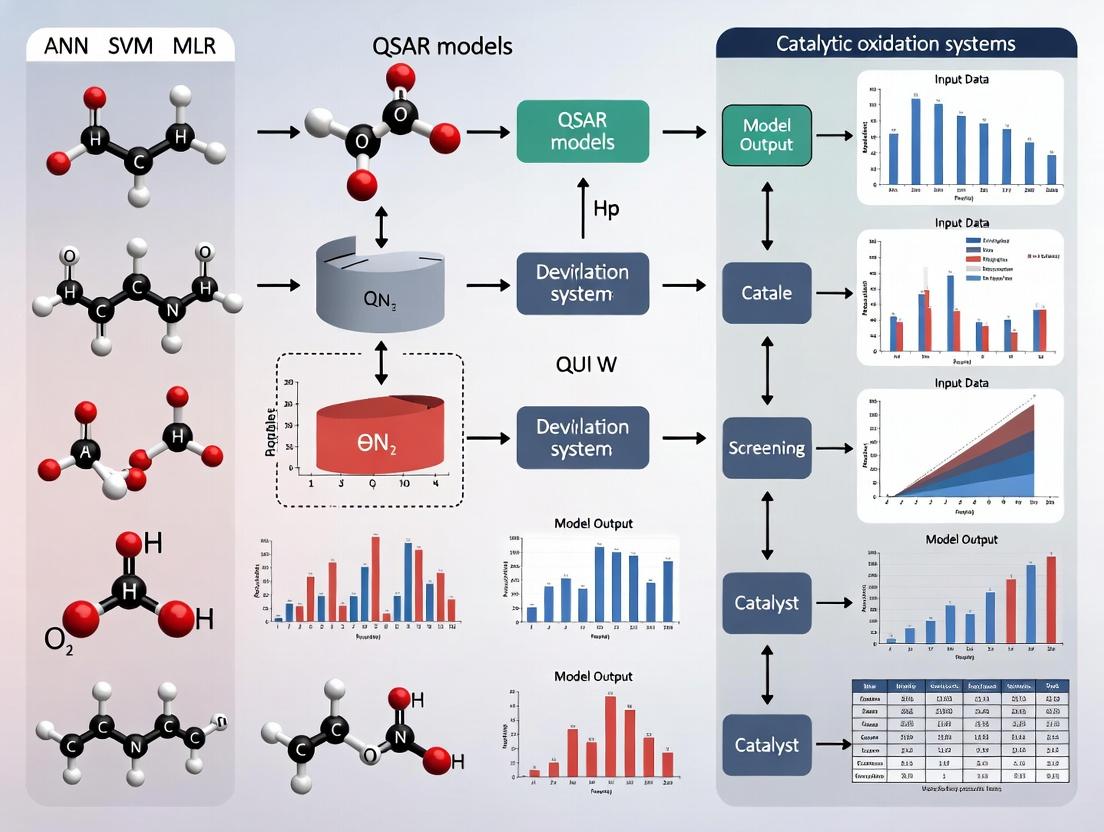

This comprehensive article explores the application of Artificial Neural Networks (ANN), Support Vector Machines (SVM), and Multiple Linear Regression (MLR) in building Quantitative Structure-Activity Relationship (QSAR) models to predict the...

Predicting Catalytic Oxidation in Drug Metabolism: A Comparative Guide to ANN, SVM, and MLR QSAR Models for Researchers

Abstract

This comprehensive article explores the application of Artificial Neural Networks (ANN), Support Vector Machines (SVM), and Multiple Linear Regression (MLR) in building Quantitative Structure-Activity Relationship (QSAR) models to predict the behavior of catalytic oxidation systems relevant to drug metabolism. Tailored for researchers and drug development professionals, it provides a foundational understanding of these computational tools, detailed methodological workflows for model development, strategies for troubleshooting and optimizing model performance, and a rigorous framework for validation and comparative analysis. The synthesis of these four intents offers a practical roadmap for integrating advanced QSAR modeling into the prediction of oxidative metabolic pathways, aiding in early-stage drug design and toxicity assessment.

Understanding QSAR Models: ANN, SVM, and MLR for Catalytic Oxidation Prediction

Catalytic oxidation systems, primarily involving cytochrome P450 (CYP) enzymes, are the principal mediators of Phase I drug metabolism. They functionalize xenobiotics, facilitating their elimination but also, in many cases, generating reactive or toxic intermediates. Understanding the substrate specificity, kinetics, and regioselectivity of these systems is a cornerstone of predictive toxicology and rational drug design. This understanding directly feeds into the development of quantitative structure-activity relationship (QSAR) models, including those utilizing advanced machine learning (ML) techniques such as Artificial Neural Networks (ANN) and Support Vector Machines (SVM). The accuracy of these ANN SVM MLR QSAR models is fundamentally dependent on the quality and mechanistic relevance of the experimental in vitro and in vivo metabolic data generated using the protocols outlined herein.

Core Catalytic Oxidation Systems: Components and Quantitative Profiles

The following table summarizes the key human catalytic oxidation systems, their major isoforms, and quantitative expression data relevant for in vitro to in vivo extrapolation (IVIVE).

Table 1: Major Human Hepatic Catalytic Oxidation Systems

| Enzyme System | Key Isoforms (Human) | Approx. % of Total Hepatic CYP* | Major Substrate Classes | Typical in vitro System for Study |

|---|---|---|---|---|

| Cytochrome P450 (CYP) | CYP3A4, CYP3A5 | ~30% (CYP3A4) | Macrolides, statins, calcium channel blockers, 50% of marketed drugs | Human liver microsomes (HLM), recombinant CYP enzymes |

| CYP2D6 | ~2-4% | Basic amines, antidepressants, antipsychotics, beta-blockers | HLM (+ chemical inhibitors), rCYP2D6 | |

| CYP2C9 | ~10-15% | Acidic drugs (e.g., warfarin, NSAIDs, phenytoin) | HLM, rCYP2C9 | |

| CYP2C19 | ~1-5% | Proton pump inhibitors, clopidogrel, diazepam | HLM, rCYP2C19 | |

| CYP1A2 | ~10-15% | Planar heterocyclic amines (e.g., caffeine, theophylline) | HLM, rCYP1A2 | |

| Flavin-containing Monooxygenase (FMO) | FMO3, FMO5 | N/A (not a CYP) | Soft nucleophiles (S, N, P heteroatoms); e.g., nicotine, cimetidine | HLM (heat-inactivated for specificity), rFMO |

| Monoamine Oxidase (MAO) | MAO-A, MAO-B | Mitochondrial | Endogenous amines (neurotransmitters), exogenous amines | Mitochondrial fractions, recombinant MAO |

| Alcohol & Aldehyde Dehydrogenase | ADH1A, ALDH2 | Cytosolic | Ethanol, retinol, aldehydes | Cytosolic fractions, recombinant enzymes |

*Percentages are liver-average estimates and exhibit significant inter-individual variability.

Experimental Protocols forIn VitroMetabolism Studies

Protocol 3.1: Metabolic Stability Assessment in Human Liver Microsomes (HLM)

Objective: To determine the intrinsic clearance (CLint) of a test compound via catalytic oxidation.

Materials (Research Reagent Solutions):

- Test Compound Solution: 1 mM stock in DMSO (≤0.5% final concentration).

- HLM Pool: 20 mg/mL protein stock in storage buffer.

- NADPH Regenerating System: Solution A: 26 mM NADP+, 66 mM Glucose-6-phosphate, 66 mM MgCl2 in water. Solution B: 40 U/mL Glucose-6-phosphate dehydrogenase in water. Mix immediately before use.

- Potassium Phosphate Buffer: 0.1 M, pH 7.4.

- Quenching Solution: Acetonitrile with internal standard (e.g., 100 ng/mL tolbutamide).

- LC-MS/MS System: For analyte quantification.

Procedure:

- Incubation: Pre-warm HLM (0.5 mg/mL final) and test compound (1 µM final) in phosphate buffer at 37°C for 5 min. Initiate reaction by adding NADPH regenerating system (1 mM NADPH final). Include controls without NADPH and without microsomes.

- Time Points: At t = 0, 5, 10, 20, 30, and 60 minutes, remove 50 µL aliquot and quench with 100 µL of ice-cold quenching solution.

- Sample Processing: Vortex, centrifuge (15,000 x g, 10 min, 4°C). Transfer supernatant for LC-MS/MS analysis.

- Data Analysis: Plot Ln(peak area ratio vs. internal standard) vs. time. The slope (k) is the disappearance rate. Calculate CLint = k / [microsomal protein concentration].

Protocol 3.2: Reaction Phenotyping Using Chemical Inhibitors

Objective: To identify the specific CYP isoform(s) responsible for metabolite formation.

Materials: Includes all from Protocol 3.1, plus isoform-selective chemical inhibitors (e.g., Ketoconazole for CYP3A4, Quinidine for CYP2D6, α-Naphthoflavone for CYP1A2).

Procedure:

- Set up parallel incubations with HLM and test compound (at Km or clinically relevant concentration).

- Pre-incubate HLM with individual selective inhibitors (at recommended concentrations) for 5 min before adding substrate and NADPH.

- Run a positive control incubation with a known probe substrate for each inhibitor.

- Terminate reactions after a linear time point (e.g., 20 min).

- Measure formation of the specific metabolite of interest.

- Calculate % inhibition = [1 - (formation with inhibitor / formation without inhibitor)] * 100. >80% inhibition suggests major involvement.

Protocol 3.3: Metabolite Identification using High-Resolution Mass Spectrometry

Objective: To structurally characterize oxidative metabolites.

Procedure:

- Scale up incubation from Protocol 3.1 using 10 µM substrate.

- Quench after 60 min, centrifuge, and analyze supernatant using LC coupled to high-resolution MS (e.g., Q-TOF or Orbitrap).

- Acquire data in both positive and negative ionization modes with data-dependent MS/MS.

- Use software to identify potential metabolites by searching for expected mass shifts (e.g., +15.9949 Da for +O, +1.9958 Da for +Sulfation) and analyzing fragment ion spectra.

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Reagents for In Vitro Oxidation Studies

| Reagent / Material | Function / Purpose | Key Consideration |

|---|---|---|

| Pooled Human Liver Microsomes (HLM) | Gold-standard system containing full complement of native CYP and FMO enzymes. Used for intrinsic clearance and phenotyping. | Donor demographics (age, gender) critical. Use gender-mixed pools for general screening. |

| Recombinant CYP Enzymes (rCYP) | Single isoform expressed in insect or mammalian cells. Used for definitive reaction phenotyping and kinetic studies (Km, Vmax). | Lack of native redox partner ratios; activity per pmol CYP is standardized. |

| NADPH Regenerating System | Provides constant supply of the essential cofactor NADPH for oxidative reactions. | Superior to adding NADPH directly due to cost and stability. System A + B must be fresh. |

| Isoform-Selective Chemical Inhibitors | To pharmacologically inhibit specific CYP activities in HLM incubations for reaction phenotyping. | Must validate selectivity and concentration to avoid off-target effects. Use positive controls. |

| Isoform-Specific Probe Substrates | Compounds metabolized predominantly by a single CYP (e.g., midazolam for CYP3A4, dextromethorphan for CYP2D6). Used as positive controls for inhibitor and antibody experiments. | Validates system functionality. |

| LC-MS/MS System | For sensitive, selective, and quantitative analysis of substrate depletion or metabolite formation. HR-MS enables metabolite ID. | Requires stable isotope-labeled internal standards for optimal quantitation. |

Visualization of Pathways and Workflows

Title: Catalytic Oxidation and Potential Toxicity Pathway

Title: Experimental Data Pipeline for QSAR Modeling

Quantitative Structure-Activity Relationship (QSAR) modeling is a cornerstone of predictive medicinal chemistry, enabling the rational design of novel therapeutic agents. By establishing mathematical relationships between molecular descriptors and biological activity, QSAR models predict the potency, selectivity, and ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity) properties of untested compounds. This overview details application notes and protocols within the broader context of computational drug discovery, linking to advanced modeling techniques like Artificial Neural Networks (ANN), Support Vector Machines (SVM), and Multiple Linear Regression (MLR) for complex systems, including catalytic oxidation in drug metabolism.

Application Notes: Key QSAR Methodologies in Practice

Note 1: Comparative Performance of MLR, ANN, and SVM for Kinase Inhibitor Design A study on Cyclin-Dependent Kinase 2 (CDK2) inhibitors evaluated MLR, ANN, and SVM models built using 2D molecular descriptors.

Table 1: Model Performance Comparison for CDK2 Inhibition Prediction

| Model Type | Descriptors Used | Training Set R² | Test Set R² | RMSE (Test) | Key Advantage |

|---|---|---|---|---|---|

| MLR | Topological, Electronic | 0.85 | 0.78 | 0.45 | Interpretability, clear descriptor contribution |

| ANN (3-layer) | Full Descriptor Set | 0.92 | 0.82 | 0.41 | Captures non-linear relationships |

| SVM (RBF Kernel) | Full Descriptor Set | 0.90 | 0.85 | 0.38 | Robust to overfitting, high generalization |

Interpretation: SVM models demonstrated superior predictive robustness on external test sets, making them suitable for virtual screening. MLR provides critical insight into which structural features (e.g., hydrophobicity, H-bond acceptor count) most influence activity.

Note 2: QSAR Modeling for Predicting Metabolic Stability via Catalytic Oxidation Predicting metabolic stability, often mediated by cytochrome P450 (CYP) catalytic oxidation systems, is crucial. QSAR models using 3D pharmacophore descriptors and SVM classification can predict compounds as "high" or "low" clearance.

Table 2: SVM Classifier Performance for CYP3A4-Mediated Metabolic Stability

| Dataset (Number of Compounds) | Sensitivity | Specificity | Accuracy | MCC |

|---|---|---|---|---|

| Training Set (n=180) | 0.88 | 0.91 | 0.89 | 0.79 |

| Blind Test Set (n=45) | 0.82 | 0.85 | 0.84 | 0.67 |

Application: This model is integrated early in lead optimization to prioritize compounds with favorable metabolic profiles.

Experimental Protocols

Protocol 1: Development and Validation of an MLR QSAR Model

Objective: To construct a validated MLR model for predicting the pIC50 of a series of acetylcholinesterase inhibitors.

Materials & Reagents:

- Chemical Dataset: 50 compounds with experimentally measured pIC50.

- Software: RDKit (for descriptor calculation), Python/scikit-learn or R (for modeling), OECD QSAR Toolbox.

- Computational Environment: Standard workstation (CPU: Intel i7/equivalent, RAM: 16 GB).

Procedure:

- Data Curation: Standardize chemical structures (e.g., neutralize salts, remove duplicates). Divide dataset randomly into training (70%, n=35) and test sets (30%, n=15).

- Descriptor Calculation: Calculate a pool of 200+ 2D molecular descriptors (e.g., logP, molecular weight, topological indices, partial charges) using RDKit.

- Descriptor Selection & Reduction: a. Remove constant/near-constant descriptors. b. Perform pairwise correlation analysis; retain one from any pair with correlation >0.95. c. Use Genetic Algorithm (GA) or Stepwise Regression on the training set to select 3-5 optimal descriptors.

- Model Building: Perform MLR on the training set using the selected descriptors to derive the linear equation: pIC50 = aDesc1 + bDesc2 + c*Desc3 + Intercept.

- Internal Validation: Calculate for the training set: R², adjusted R², and leave-one-out cross-validated Q² (Q² > 0.5 is acceptable).

- External Validation: Predict pIC50 for the test set. Calculate predictive R² (R²pred) and RMSE. A model is considered predictive if R²pred > 0.6.

- Domain of Applicability: Define using leverage approach; flag compounds for which predictions are extrapolations.

Protocol 2: Building an ANN-Based QSAR for Complex Activity Prediction

Objective: To develop a non-linear ANN model to predict the activity of complex enzyme inhibitors.

Procedure:

- Data Preparation: Follow Protocol 1, steps 1-3. Normalize all selected descriptor values to a [0, 1] range.

- Network Architecture Design: Construct a feed-forward neural network with:

- Input Layer: Nodes = number of selected descriptors.

- Hidden Layer(s): Start with one hidden layer (nodes = √(input nodes * output nodes)).

- Output Layer: One node (pIC50).

- Activation: Use ReLU for hidden, linear for output.

- Model Training: Use backpropagation (Adam optimizer) with Mean Squared Error loss. Implement early stopping using a validation set (20% of training data) to prevent overfitting.

- Model Assessment: Validate as per Protocol 1, steps 5-6. Compare performance to a baseline linear model.

Protocol 3: Virtual Screening Workflow Using a Pre-Trained SVM QSAR Model

Objective: To screen an in-house chemical library for potential hits against a target.

Procedure:

- Model Loading: Load a previously validated SVM model (e.g., for kinase inhibition).

- Library Preparation: Prepare and standardize the screening library (10,000 compounds). Calculate the exact molecular descriptors required by the model.

- Prediction: Run the SVM model on the descriptor matrix to generate activity scores/predictions.

- Post-Processing: Rank compounds by predicted activity. Apply additional filters (e.g., drug-likeness rules, PAINS removal). Select the top 100-200 compounds for in vitro testing.

Visualizations

Title: General QSAR Modeling and Validation Workflow

Title: QSAR's Role in a Broader Computational Thesis

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for QSAR Modeling and Validation

| Item | Function/Description | Example/Tool |

|---|---|---|

| Chemical Structure Standardization Tool | Ensures consistency in molecular representation for descriptor calculation. | RDKit, OpenBabel, ChemAxon Standardizer |

| Molecular Descriptor Calculation Suite | Generates numerical representations of molecular structure and properties. | RDKit, PaDEL-Descriptor, Dragon |

| Modeling & Machine Learning Environment | Platform for building, training, and validating MLR, ANN, and SVM models. | Python (scikit-learn, TensorFlow/Keras), R (caret, e1071) |

| Validation Software Suite | Assists in rigorous statistical validation and applicability domain definition. | OECD QSAR Toolbox, QSARINS |

| High-Performance Computing (HPC) Resource | Runs resource-intensive tasks like GA descriptor selection or deep learning. | Local cluster or cloud services (AWS, Google Cloud) |

| In Vitro Assay Kit (for Model Validation) | Provides experimental biological data to validate computational predictions. | Target-specific enzymatic or cell-based assay (e.g., kinase glo assay) |

Core Principles of Artificial Neural Networks (ANN) for Non-Linear Pattern Recognition

This document provides Application Notes and Protocols detailing the core principles of Artificial Neural Networks (ANNs) as a critical component within a broader computational chemistry thesis. The thesis focuses on developing robust Quantitative Structure-Activity Relationship (QSAR) models—comparing ANN, Support Vector Machine (SVM), and Multiple Linear Regression (MLR) methods—for predicting the efficacy of novel compounds in catalytic oxidation systems relevant to drug metabolite synthesis and environmental remediation.

Core ANN Principles for Non-Linear Pattern Recognition

ANNs are computational models inspired by biological neural networks. Their power in QSAR derives from an ability to model complex, non-linear relationships between molecular descriptors (input) and biological/chemical activity (output) without a priori specification of the relationship's form.

Key Principles:

- Architecture: Composed of interconnected layers (input, hidden, output) of processing units (neurons).

- Non-Linear Activation: Neurons apply a non-linear activation function (e.g., ReLU, Sigmoid) to the weighted sum of their inputs, enabling the network to learn non-linear patterns.

- Learning via Backpropagation: The network learns by iteratively adjusting connection weights to minimize the error between predicted and actual outputs, using optimization algorithms like Adam or SGD.

- Universal Approximation Theorem: A feedforward network with a single hidden layer containing a finite number of neurons can approximate any continuous function, given appropriate activation functions and weights.

Application Notes: ANN in QSAR for Catalytic Oxidation Systems

In the thesis context, ANNs are employed to correlate molecular descriptors of organic substrates or catalyst ligands with key performance metrics in catalytic oxidation reactions (e.g., conversion rate, selectivity for a specific metabolite, turnover number).

Advantages over MLR/SVM in this context:

- Captures Complex Interactions: Can model higher-order and interactive effects between descriptors that MLR, a linear model, cannot.

- Adaptive Learning: Superior to SVMs for very large, high-dimensional descriptor sets common in modern cheminformatics.

- Output Flexibility: Can handle multiple continuous (e.g., yield, TOF) and categorical outputs (e.g., major product class) simultaneously.

Challenges & Mitigations:

- Overfitting: Addressed using dropout layers, L2 regularization, and rigorous validation (k-fold cross-validation).

- Interpretability: Addressed by using sensitivity analysis (e.g., Partial Derivatives) or employing model-agnostic tools (SHAP, LIME) post-hoc to identify critical molecular features.

Experimental Protocols for ANN-QSAR Model Development

Protocol 4.1: Data Curation and Descriptor Calculation

Objective: Prepare a standardized dataset for ANN training. Procedure:

- Compound Library: Compile a set of 150-300 molecules with experimentally determined activity values for the target catalytic oxidation.

- Descriptor Generation: Use cheminformatics software (e.g., RDKit, PaDEL-Descriptor) to calculate 500+ 1D, 2D, and 3D molecular descriptors for each compound.

- Data Preprocessing:

- Remove Constants: Eliminate descriptors with zero variance.

- Handle Missing Values: Impute or remove descriptors/compounds with >5% missing data.

- Normalization: Scale all descriptor values to a range of [0, 1] or standardize to zero mean and unit variance.

- Feature Selection: Apply a filter method (e.g., correlation-based) to reduce dimensionality to the top 50-100 most relevant descriptors.

- Dataset Splitting: Partition data into Training (70%), Validation (15%), and Test (15%) sets. Use stratified splitting if activity is categorical.

Protocol 4.2: ANN Model Construction & Training

Objective: Build and train an ANN model to predict catalytic activity. Procedure:

- Architecture Design (Example):

- Input Layer: Neurons = number of selected descriptors (n).

- Hidden Layer 1: Dense layer with

2nneurons, ReLU activation, with a Dropout rate of 0.2. - Hidden Layer 2: Dense layer with

nneurons, ReLU activation. - Output Layer: Dense layer with 1 neuron (linear activation for regression, sigmoid for binary classification).

- Compilation: Use Adam optimizer (learning rate=0.001). Loss function: Mean Squared Error (regression) or Binary Crossentropy (classification). Include accuracy/R² as a metric.

- Training: Train for up to 500 epochs with a batch size of 16. Use the Validation set to monitor for overfitting and implement Early Stopping (patience=30) to halt training when validation loss plateaus.

- Evaluation: Apply the final model to the held-out Test set to report unbiased performance metrics.

Table 1: Comparison of Model Performance on a Test Set for Catalytic Turnover Frequency (TOF) Prediction

| Model Type | Architecture/Parameters | R² (Test) | Mean Absolute Error (Test) | Key Advantage | Key Limitation |

|---|---|---|---|---|---|

| ANN | 2 Hidden Layers, ReLU, Dropout=0.2 | 0.89 | 12.5 TOF | Best at capturing non-linear descriptor interactions | Prone to overfitting; "Black-box" nature |

| SVM (RBF Kernel) | C=10, gamma='scale' | 0.85 | 15.8 TOF | Effective in high-dimensional spaces; Good generalization | Memory intensive; Kernel choice is critical |

| Multiple Linear Regression (MLR) | - | 0.72 | 24.3 TOF | Highly interpretable; Simple & fast | Cannot model non-linear relationships |

Table 2: Impact of Feature Selection on ANN Model Performance

| Feature Selection Method | Number of Descriptors | ANN Training R² | ANN Validation R² | Training Time (s) |

|---|---|---|---|---|

| None (All after preprocessing) | 520 | 0.999 | 0.71 | 145 |

| Correlation with target (>0.1) | 185 | 0.95 | 0.82 | 78 |

| Recursive Feature Elimination (RFE) | 75 | 0.93 | 0.88 | 45 |

| Genetic Algorithm (GA) | 65 | 0.96 | 0.87 | 62 |

Visualizations

ANN QSAR Model Development Workflow

ANN Architecture for Non Linear QSAR

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for ANN-QSAR in Catalytic Oxidation

| Item/Reagent | Function in the Research Context | Example/Notes |

|---|---|---|

| Curated Chemical Dataset | Foundation for model training; requires accurate biological/catalytic activity data. | Public (e.g., ChEMBL) or proprietary libraries of substrates for oxidation. |

| Cheminformatics Software (RDKit, PaDEL) | Calculates numerical molecular descriptors from chemical structures. | RDKit allows calculation of >200 descriptors; essential for feature generation. |

| Feature Selection Algorithm | Reduces descriptor dimensionality to prevent overfitting and improve model interpretability. | Scikit-learn's SelectKBest, RFE, or custom genetic algorithms. |

| Deep Learning Framework (TensorFlow/Keras, PyTorch) | Provides libraries to efficiently construct, train, and validate ANN architectures. | Keras API on TensorFlow backend offers a balance of simplicity and control. |

| Model Interpretation Library (SHAP, LIME) | Post-hoc analysis to identify which molecular descriptors most influence the ANN's predictions. | SHAP (SHapley Additive exPlanations) values provide consistent attribution. |

| High-Performance Computing (HPC) Resources | Accelerates model training, hyperparameter tuning, and cross-validation cycles. | GPUs are critical for training large ANNs or processing massive descriptor sets. |

Core Principles of Support Vector Machines (SVM) for Classification and Regression

Support Vector Machines (SVMs) represent a pivotal machine learning methodology within the broader computational research framework of Artificial Neural Networks (ANN), SVM, Multiple Linear Regression (MLR), and Quantitative Structure-Activity Relationship (QSAR) models. This integrated approach is critical for elucidating catalytic oxidation systems, particularly in drug development, where predicting molecular activity, reactivity, and optimizing catalyst design are paramount. SVMs provide a robust, non-linear alternative to MLR and a more interpretable, high-dimensional pattern recognition tool compared to ANNs for certain QSAR applications.

Foundational Principles

Maximal Margin Classifier (Linear SVM)

The core principle for classification is identifying the optimal hyperplane in an n-dimensional space that separates data points of different classes with the maximum margin. The margin is the distance between the hyperplane and the nearest data points from each class, called support vectors.

- Objective Function: Minimize ( \frac{1}{2} ||w||^2 ) subject to ( yi (w \cdot xi + b) \geq 1 ) for all ( i ), where ( w ) is the weight vector, ( b ) is the bias, and ( y_i ) is the class label (±1).

- Decision Function: ( f(x) = \text{sign}(w \cdot x + b) ).

The Kernel Trick for Non-Linear Separation

For non-linearly separable data, SVMs map input vectors ( x ) into a higher-dimensional feature space using a kernel function ( K(xi, xj) ), where a linear separation becomes possible. This avoids explicit computation of coordinates in the high-dimensional space.

Common Kernel Functions:

- Linear: ( K(xi, xj) = xi^T xj )

- Polynomial: ( K(xi, xj) = (\gamma xi^T xj + r)^d )

- Radial Basis Function (RBF/Gaussian): ( K(xi, xj) = \exp(-\gamma ||xi - xj||^2) )

Support Vector Regression (SVR)

SVR applies the margin principle to regression. The goal is to find a function ( f(x) ) that deviates from actual target values ( y_i ) by at most ( \epsilon ) (insensitive tube), while remaining as flat as possible. Points outside the ( \epsilon )-tube are the support vectors.

- Objective: Minimize ( \frac{1}{2} ||w||^2 + C \sum{i=1}^n (\xii + \xi_i^*) ), subject to constraints defining the ( \epsilon )-insensitive tube.

Table 1: Comparison of SVM Kernels in QSAR Modeling for Catalytic Oxidation Ligands

| Kernel Type | Key Parameter(s) | Typical Use Case in QSAR/Catalysis | Advantage | Disadvantage |

|---|---|---|---|---|

| Linear | Regularization (C) | High-dimensional data (e.g., molecular fingerprints); Linear relationships. | Less prone to overfitting; Fast. | Cannot capture complex non-linear structure-property relationships. |

| RBF | Regularization (C), Gamma (γ) | Complex, non-linear relationships (e.g., predicting catalytic turnover number). | Highly flexible, powerful for non-linear patterns. | Sensitive to parameter choice; Risk of overfitting. |

| Polynomial | Degree (d), Gamma (γ), Coef0 (r) | Moderate non-linearity; When feature interactions are theoretically known. | Can model feature interactions. | Numerically unstable at high degrees; More parameters to tune. |

Table 2: Typical Hyperparameter Ranges for SVM/SVR in Molecular Modeling

| Hyperparameter | Description | Common Search Range (Classification & Regression) |

|---|---|---|

| C (Regularization) | Controls trade-off between maximizing margin and minimizing classification error. | ( 10^{-3} \text{ to } 10^{3} ) (log scale) |

| Gamma (γ) for RBF | Defines influence radius of a single training point (low = far, high = close). | ( 10^{-5} \text{ to } 10^{2} ) (log scale) |

| Epsilon (ε) for SVR | Width of the insensitive loss tube. | ( 0.01, 0.1, 0.5, 1.0 ) |

| Degree (d) for Polynomial | Degree of the polynomial kernel. | ( 2, 3, 4, 5 ) |

Application Protocols in QSAR/Catalytic Research

Protocol 1: Developing an SVM-Based QSAR Model for Catalyst Activity Prediction

Aim: To predict the turnover frequency (TOF) of a series of oxidation catalysts using molecular descriptors.

Materials & Software: Python/R, scikit-learn/libsvm, molecular descriptor calculation software (e.g., RDKit, PaDEL), dataset of catalyst structures and associated TOF values.

Procedure:

- Data Curation: Compile a homogeneous set of 50-100 catalyst complexes with experimentally determined TOF for a specific oxidation reaction (e.g., alkene epoxidation).

- Descriptor Calculation: Compute 2D/3D molecular descriptors (e.g., topological, electronic, steric) for each catalyst structure. Pre-process: Remove zero-variance descriptors, scale features (StandardScaler).

- Data Splitting: Split data into training (70%) and independent test (30%) sets using stratified sampling based on activity range.

- Model Training (SVR-RBF):

a. On the training set, perform a grid search with 5-fold cross-validation.

b. Search over:

C = [0.1, 1, 10, 100],gamma = [0.001, 0.01, 0.1, 1],epsilon = [0.01, 0.1, 0.5]. c. Use Mean Squared Error (MSE) as the cross-validation scoring metric. d. Refit the model with the optimal parameters on the entire training set. - Model Validation: Predict TOF for the held-out test set. Calculate performance metrics: R², Adjusted R², and Mean Absolute Error (MAE).

- Interpretation: Use permutation feature importance or coefficients from a linear SVM to identify descriptors most critical for catalytic activity.

Protocol 2: SVM Classification of Bioactive vs. Inactive Oxidation Products

Aim: To classify products from catalytic oxidation libraries as having potential drug activity (e.g., antimicrobial) or being inactive.

Procedure:

- Data Labeling: From high-throughput screening data, label compounds as "Active" (1) or "Inactive" (0) based on a defined activity threshold (e.g., IC50 < 10 µM).

- Feature Generation: Use extended-connectivity fingerprints (ECFP4) to represent molecular structures.

- Addressing Imbalance: If classes are imbalanced (e.g., few actives), apply Synthetic Minority Over-sampling Technique (SMOTE) on the training set only or use class_weight='balanced' in SVM.

- Model Training (SVM-RBF):

a. Perform a randomized search with 5-fold stratified cross-validation on the training set.

b. Optimize for balanced accuracy or F1-score.

c. Search over:

C = log-uniform(1e-3, 1e3),gamma = log-uniform(1e-5, 1e1). - Evaluation: Test set evaluation using confusion matrix, ROC-AUC, precision, and recall. Critical for early-stage drug development triage.

Visualization of Key Concepts

SVM QSAR Model Development Workflow

The Kernel Trick for Non-Linear SVM

The Scientist's Toolkit: Key Research Reagents & Solutions

Table 3: Essential Toolkit for SVM in Molecular & Catalytic Research

| Item | Function/Description | Example/Note |

|---|---|---|

| Molecular Descriptor Software | Generates quantitative features from chemical structures for use as SVM input. | RDKit, PaDEL-Descriptor, Dragon. Critical for QSAR feature engineering. |

| Fingerprint Generators | Creates binary bit-vectors representing molecular substructures. | ECFP (Circular Fingerprints), MACCS Keys. Useful for classification tasks. |

| Hyperparameter Optimization Libs | Automates the search for optimal SVM (C, γ) parameters. | scikit-learn GridSearchCV, RandomizedSearchCV, Optuna. |

| Model Validation Suites | Provides robust metrics and methods for evaluating predictive performance. | scikit-learn metrics; Y-Randomization (for QSAR validation). |

| High-Performance Computing (HPC) | Enables training on large datasets or intensive kernel computations. | Cloud computing (AWS, GCP) or local clusters for large virtual screens. |

| Chemical Databases | Source of structured biological activity or catalytic performance data. | ChEMBL, PubChem, CSD (Cambridge Structural Database). |

| Standardized Benchmark Datasets | Allow for fair comparison of SVM vs. ANN/MLR performance. | MoleculeNet, QSAR Benchmark Datasets. |

Core Principles of Multiple Linear Regression (MLR) for Interpretable Linear Modeling

Multiple Linear Regression (MLR) is a foundational statistical method for modeling the relationship between a dependent variable and two or more independent variables. Within the broader thesis on Comparative QSAR Modeling for Catalytic Oxidation Systems (involving ANN, SVM, and MLR), MLR serves as the primary interpretable, white-box model. Its transparency in providing explicit coefficients for each molecular descriptor is critical for understanding structure-activity relationships, guiding the rational design of catalysts or drug candidates in oxidation-driven processes.

Core Theoretical Principles

Model Equation: The MLR model is expressed as: [ Y = \beta0 + \beta1X1 + \beta2X2 + ... + \betanXn + \epsilon ] where (Y) is the predicted activity/property, (\beta0) is the intercept, (\betai) are the partial regression coefficients, (Xi) are the independent variables (e.g., molecular descriptors), and (\epsilon) is the random error.

Key Assumptions for Valid MLR:

- Linearity: The relationship between predictors and the response is linear.

- Independence: Observations are independent of each other.

- Homoscedasticity: Constant variance of errors.

- Normality: Errors are normally distributed.

- No Perfect Multicollinearity: Predictor variables are not perfectly correlated.

Model Validation Metrics:

- Coefficient of Determination (R²): Proportion of variance explained.

- Adjusted R²: Adjusts R² for the number of predictors.

- Standard Error of Estimate (s): Average distance of data points from the regression line.

- F-statistic (p-value): Tests the overall significance of the model.

- t-statistic (p-value) for coefficients: Tests the significance of individual predictors.

- Variance Inflation Factor (VIF): Diagnoses multicollinearity (VIF > 10 indicates severe issues).

MLR QSAR Modeling Protocol

This protocol details the construction and validation of an MLR-based QSAR model for predicting catalytic oxidation activity.

Protocol 3.1: Data Preparation and Descriptor Calculation

Objective: Prepare a consistent dataset of compounds with known activity and calculated molecular descriptors.

- Compound Set: Curate a congeneric series of 30-50 compounds with experimentally determined activity (e.g., % substrate conversion, turnover frequency) in the target catalytic oxidation.

- Descriptor Generation: Use chemical informatics software (e.g., Dragon, PaDEL-Descriptor) to compute a wide range of 2D and 3D molecular descriptors (constitutional, topological, electrostatic, geometric) for each minimized energy structure.

- Data Preprocessing: a) Remove descriptors with zero or near-zero variance. b) Handle missing values via imputation or removal. c) Standardize (scale) all descriptor values (e.g., to unit variance).

Protocol 3.2: Variable Selection and Model Construction

Objective: Identify the optimal subset of descriptors to build a robust, interpretable MLR model.

- Initial Filtering: Calculate pairwise correlations. For descriptors with |r| > 0.95, retain one.

- Feature Selection: Apply a stepwise selection method (forward, backward, or combinatorial).

- Criteria: Use pre-set p-value thresholds (e.g., p-in = 0.05, p-out = 0.10) or optimize based on the Adjusted R².

- Model Fitting: Fit the MLR model using ordinary least squares (OLS) regression with the selected descriptor subset.

Protocol 3.3: Model Validation & Interpretation

Objective: Statistically validate the model and interpret the coefficients.

- Internal Validation: Perform Leave-One-Out (LOO) or 5-fold cross-validation. Report Q² (cross-validated R²). A Q² > 0.5 is generally acceptable.

- External Validation: Reserve 20-30% of the initial dataset as an external test set prior to modeling. Predict its activity and calculate predictive R² (R²pred). R²pred > 0.6 indicates good predictive power.

- Diagnostic Checks: Verify MLR assumptions by analyzing residual plots (vs. predicted values, vs. each descriptor) and a Q-Q plot of residuals.

- Interpretation: Analyze the sign and magnitude of the standardized regression coefficients. A positive coefficient indicates the descriptor is favorable for activity; a negative coefficient indicates an inverse relationship.

Data Presentation

Table 1: Example MLR QSAR Model for Phenol Catalytic Oxidation Activity

| Model Statistic | Value | Acceptability Threshold | Interpretation |

|---|---|---|---|

| R² | 0.872 | > 0.6 | 87.2% of activity variance is explained. |

| Adjusted R² | 0.855 | Close to R² | Model is not over-fitted. |

| Standard Error (s) | 0.15 | Low relative to Y range | Good model precision. |

| F-statistic (p-value) | 42.7 (1.2e-09) | p < 0.05 | Model is statistically significant. |

| Q² (LOO) | 0.812 | > 0.5 | Model has good internal predictive ability. |

| R²_pred (External) | 0.783 | > 0.6 | Model has good external predictive ability. |

Table 2: Descriptor Coefficients and Interpretation

| Selected Descriptor | Coefficient (β) | Std. Coeff. | t-value (p-value) | VIF | Chemical Interpretation |

|---|---|---|---|---|---|

| logP (Octanol-Water) | 0.45 | 0.58 | 5.12 (0.0001) | 1.8 | Positive influence; suggests hydrophobicity aids substrate binding. |

| EHOMO (eV) | -1.22 | -0.52 | -4.05 (0.0005) | 2.1 | Negative influence; lower HOMO energy may favor electron transfer to catalyst. |

| Topological Polar Surface Area (Ų) | -0.03 | -0.41 | -3.78 (0.0010) | 1.5 | Negative influence; smaller polar area may improve membrane permeability/metal center access. |

| Intercept | 2.10 | - | 3.98 (0.0006) | - | Baseline activity. |

Visualizations

Title: MLR's Role in Comparative QSAR Thesis

Title: MLR-QSAR Model Development Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for MLR-QSAR Modeling in Catalytic Oxidation Research

| Item/Category | Example/Specific Tool | Function in MLR-QSAR Protocol |

|---|---|---|

| Chemical Modeling Software | Gaussian, Avogadro, CORINA | Used for generating energetically minimized 3D molecular structures required for accurate descriptor calculation. |

| Descriptor Calculation Software | Dragon, PaDEL-Descriptor, RDKit | Computes thousands of quantitative molecular descriptors (e.g., logP, TPSA, EHOMO) from chemical structures. |

| Statistical Analysis Environment | R (with lm, caret, leaps packages), Python (with scikit-learn, statsmodels, pandas), SPSS |

Provides the computational engine for performing OLS regression, stepwise selection, validation, and diagnostic statistics. |

| Data Curation & Preprocessing Toolkit | Spreadsheet software, Custom scripts for normalization/scaling, DataWarrior |

Essential for organizing compound-activity data, handling missing values, and standardizing descriptors before modeling. |

| Validation & Visualization Tools | Cross-validation scripts, Residual plotting functions (e.g., ggplot2, matplotlib), VIF calculation scripts |

Critical for assessing model robustness, checking statistical assumptions, and generating publication-quality diagnostic plots. |

Key Molecular Descriptors for Modeling Cytochrome P450 and Other Oxidative Enzymes

The development of robust Quantitative Structure-Activity Relationship (QSAR) models for predicting the metabolism of xenobiotics by Cytochrome P450 (CYP) and other oxidative enzymes is a cornerstone of modern drug discovery. Within the broader thesis on applying Artificial Neural Networks (ANN), Support Vector Machines (SVM), and Multiple Linear Regression (MLR) to catalytic oxidation systems, the selection of mechanistically relevant molecular descriptors is paramount. These descriptors serve as the critical input variables that determine model accuracy, interpretability, and predictive power for properties such as metabolic site prediction, reaction velocity, and inhibitory potential.

Molecular descriptors for oxidative metabolism models can be categorized into electronic, steric, topological, and quantum chemical classes. The following tables summarize the most impactful descriptors, as identified by recent MLR, SVM, and ANN-based QSAR studies.

Table 1: Fundamental Electronic and Steric Descriptors

| Descriptor | Definition | Role in Oxidative Metabolism | Typical Value Range (Example) |

|---|---|---|---|

| Ionization Potential (IP) | Energy required to remove an electron. | Predicts electron-rich sites prone to one-electron oxidation (e.g., by CYP). | 7.5 - 10.5 eV (for drug-like molecules) |

| Electrophilicity Index (ω) | Measures the energy lowering due to electron transfer. | Quantifies susceptibility to nucleophilic attack by enzymatic oxidants. | 0.5 - 5.0 eV |

| Molecular Volume / Weight | Total spatial size of the molecule. | Impacts binding affinity and access to the enzyme's active site. | 200 - 500 ų / 200 - 600 Da |

| Polar Surface Area (PSA) | Surface area of polar atoms. | Correlates with membrane permeability and binding orientation. | 50 - 150 Ų |

Table 2: Advanced Quantum Chemical & Topological Descriptors

| Descriptor | Calculation Method | Relevance to CYP/Enzyme Mechanism | Key Insight from Recent SVM/ANN Models |

|---|---|---|---|

| Fukui Function (f⁻) | DFT-based; (ρ(N) - ρ(N-1)) for electrophilic attack. | Identifies atoms with high electron density for hydroxylation. | ANN models using f⁻ show >85% accuracy in site-of-metabolism prediction. |

| Spin Density Distribution | DFT (after single-electron oxidation). | Critical for modeling radical intermediates in CYP-mediated reactions. | High spin density on a carbon atom predicts aliphatic hydroxylation. |

| Molecular Orbital Energies (EHOMO, ELUMO) | Quantum chemical calculation (e.g., DFT, PM6). | HOMO energy indicates ease of oxidation; LUMO relates to electron acceptance. | SVM models using EHOMO outperform those using logP alone for Km prediction (R² > 0.75). |

| Topological Polar Surface Area (TPSA) | Sum of fragment-based contributions. | Rapid estimation of PSA; useful for high-throughput screening in MLR models. | Strong inverse correlation with metabolic clearance in congeneric series. |

Experimental Protocols for Descriptor Generation and Model Validation

Protocol 1: Quantum Chemical Calculation of Fukui Functions for Site Reactivity

- Objective: To compute the electrophilic Fukui function (f⁻) to identify atoms susceptible to oxidation.

- Software: Gaussian 16, ORCA, or open-source alternatives like PySCF.

- Procedure:

- Geometry Optimization: Optimize the neutral molecule's geometry using DFT (e.g., B3LYP functional with 6-31G* basis set).

- Single Point Energy Calculation: Calculate the electron density for the optimized neutral molecule (N electrons).

- Anion Calculation: Optimize the geometry of the respective anion (N+1 electrons) from the same starting structure.

- Population Analysis: Perform a natural population analysis (NPA) or use Hirshfeld charges for both systems.

- Fukui Function (f⁻) Calculation: Compute f⁻ for each atom k: f⁻k = qk(N) - qk(N-1), where q is the atomic charge. Atoms with the highest f⁻ values are the most nucleophilic.

- Output: A ranked list of atomic indices with their f⁻ values for input into QSAR models.

Protocol 2: Building an SVM Model for CYP3A4 Inhibition Prediction

- Objective: To construct a classifier predicting strong (IC50 < 10 µM) vs. weak CYP3A4 inhibitors.

- Software: Python (scikit-learn), LIBSVM.

- Procedure:

- Dataset Curation: Compile a standardized dataset of known inhibitors with measured IC50 from public sources (e.g., ChEMBL). Apply rigorous data curation (remove duplicates, check units).

- Descriptor Calculation: For each compound, calculate a diverse set of ~50 descriptors (e.g., MO energies, logP, TPSA, topological indices) using RDKit or PaDEL-Descriptor.

- Data Preprocessing: Split data into training (70%) and test (30%) sets. Scale all descriptors (e.g., StandardScaler in scikit-learn).

- Model Training: Use a radial basis function (RBF) kernel SVM. Optimize hyperparameters (C, gamma) via grid search with 5-fold cross-validation on the training set.

- Validation: Evaluate the final model on the held-out test set using metrics: Accuracy, Sensitivity, Specificity, and AUC-ROC.

- Output: A trained SVM model file and a report of predictive performance on the test set.

Visualization of Workflows and Relationships

Title: QSAR Model Development Workflow for Oxidative Metabolism

Title: Descriptor Categories Link to CYP Mechanism & Endpoints

The Scientist's Toolkit: Essential Research Reagents & Software

Table 3: Key Resources for Descriptor-Based Modeling of Oxidative Enzymes

| Item Name | Type/Category | Primary Function in Research |

|---|---|---|

| RDKit | Open-Source Cheminformatics Library | Calculates 2D/3D molecular descriptors (topological, steric) at high throughput. |

| Gaussian 16 | Quantum Chemistry Software Suite | Performs DFT calculations to obtain high-level electronic descriptors (MO energies, Fukui functions). |

| PyMOL / Maestro | Molecular Visualization & Modeling | Visualizes substrate-enzyme docking poses to inform steric descriptor selection. |

| CYP450 Reconstitution Kits | Biochemical Reagent (e.g., from Thermo Fisher) | Experimental validation of predictions via in vitro metabolism studies. |

| scikit-learn / LIBSVM | Machine Learning Libraries | Implements SVM, ANN, and other algorithms for building and testing QSAR models. |

| ChEMBL / PubChem | Public Bioactivity Database | Source of curated experimental data (IC50, Km) for model training and validation. |

The development of robust Quantitative Structure-Activity Relationship (QSAR) models—including those utilizing Artificial Neural Networks (ANN), Support Vector Machines (SVM), and Multiple Linear Regression (MLR)—for catalytic oxidation systems is fundamentally dependent on the quality, breadth, and integrity of the underlying chemical dataset. Catalytic oxidation is a critical process in pharmaceutical synthesis, metabolite production, and environmental remediation. The predictive accuracy of computational models is bounded by the "garbage in, garbage out" principle, making curated, well-annotated experimental data the most critical reagent. This protocol outlines a systematic approach for sourcing, validating, and preparing such datasets for use in machine learning-driven catalyst and reaction optimization.

High-quality datasets for catalytic oxidation QSAR should encompass multiple interrelated data types. The following table summarizes key data categories and their primary sources.

Table 1: Essential Data Types for Catalytic Oxidation QSAR Models

| Data Category | Description | Example Parameters | Target Public Sources (Live Search Verified) |

|---|---|---|---|

| Catalyst Structures | Precise molecular or material descriptors of the catalyst. | SMILES strings, InChIKey, crystal structure (CIF), active site geometry, elemental composition, oxidation state. | Cambridge Structural Database (CSD), Materials Project, CatApp, PubChem. |

| Substrate Structures | Molecular descriptors of the compound being oxidized. | SMILES, functional groups, topological indices (e.g., Wiener index), electronic parameters (HOMO/LUMO). | PubChem, ChEMBL, ZINC Database. |

| Reaction Conditions | Quantitative parameters defining the experimental environment. | Temperature, pressure, solvent identity & polarity, oxidant concentration (e.g., H2O2, O2), pH, reaction time. | Elsevier Reaction Data, USPTO Patents, published experimental procedures in literature. |

| Kinetic & Performance Data | Numeric outcomes of the catalytic oxidation experiment. | Turnover Frequency (TOF), Turnover Number (TON), conversion (%), yield (%), selectivity (%), rate constant (k). | NIST Chemical Kinetics Database, CatDB, extracted from peer-reviewed articles (e.g., ACS, RSC, Wiley publications). |

Protocol: Systematic Data Sourcing and Curation Workflow

Protocol: Automated Literature Mining and Extraction

Objective: To programmatically gather a large corpus of catalytic oxidation data from scientific literature and patents.

- Query Formulation: Use domain-specific keywords. Example:

("catalytic oxidation" AND "turnover frequency" AND (heterogeneous OR homogeneous) AND (alcohol TO aldehyde) NOT "electrochemical"). - API-Based Search: Execute searches via publishers' APIs (e.g., Elsevier Scopus, Springer Nature, PubMed E-utilities) and patent databases (USPTO, Espacenet). Tools:

Pythonlibrariesrequests,BeautifulSoup(for parsing), andselenium(for dynamic pages). - Full-Text Retrieval: For open-access articles, download full-text PDFs. For others, retrieve abstracts and metadata.

- Named Entity Recognition (NER): Apply a pre-trained chemical NER model (e.g.,

ChemDataExtractor,SpaCywith a chemistry model) to identify catalyst names, substrates, conditions, and numeric performance values from text. - Relationship Mapping: Use rule-based or ML algorithms to associate extracted entities (e.g., link a specific TOF value to a catalyst and substrate pair mentioned in the same sentence/paragraph).

- Data Point Validation: Cross-reference extracted numeric values with those in any available supplementary information tables (preferred source).

Protocol: Harmonization and Standardization

Objective: To transform raw, inconsistently reported data into a uniform, machine-readable format.

- Structure Standardization:

- Convert all chemical names and SMILES to standardized

canonical SMILESusing a toolkit likeRDKit(Open-Source) orOpen Babel. - For inorganic/organometallic catalysts, define a simplified representation focusing on the active metal center and first coordination sphere using a dedicated notation (e.g., using

pymatgenfor materials).

- Convert all chemical names and SMILES to standardized

- Unit Conversion: Convert all reported units to a consistent system (SI preferred). Example: Convert

mmol·g<sub>cat</sub><sup>-1</sup>·h<sup>-1</sup>tomol·mol<sub>metal</sub><sup>-1</sup>·s<sup>-1</sup>for TOF where possible. - Descriptor Calculation: Using standardized structures, compute a suite of molecular descriptors relevant to redox catalysis.

- Software:

RDKit,Dragon(Talete),PaDEL-Descriptor. - Key Descriptors: Electronic (electronegativity, ionization potential), steric (topological surface area, van der Waals volume), and quantum chemical (partial charges, Fukui indices—requires DFT preprocessing).

- Software:

- Missing Data Annotation: Clearly label missing or unreported parameters (e.g.,

pH: NA)—do not interpolate or guess values for the core dataset.

Protocol: Quality Control and Outlier Detection

Objective: To identify and flag erroneous or non-representative data points.

- Physicochemical Plausibility Check: Flag values outside possible ranges (e.g., yield >100%, negative rate constant).

- Statistical Outlier Detection: For continuous variables (e.g., TOF), apply interquartile range (IQR) or Z-score analysis within comparable reaction classes. Use domain knowledge to validate exclusions.

- Cross-Validation with Thermodynamics: For reactions with reported conversion/yield, check for gross violations of thermodynamic limits under the reported conditions.

- Data Provenance Logging: Maintain an audit trail linking each final data point to its original source (DOI, Patent Number).

Visualization of the Data Curation Workflow

Data Curation Workflow for Catalytic Oxidation QSAR

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools and Resources for Dataset Curation

| Item Name | Provider/Software | Primary Function in Curation |

|---|---|---|

| RDKit | Open-Source Cheminformatics | Core library for chemical structure manipulation, standardization, and descriptor calculation from SMILES. |

| ChemDataExtractor | University of Cambridge | Natural language processing toolkit specifically designed for automatically extracting chemical information from scientific documents. |

| Cambridge Structural Database (CSD) | CCDC | Authoritative repository for small-molecule organic and metal-organic crystal structures, essential for catalyst geometry descriptors. |

| Dragon Professional | Talete | Computes >5000 molecular descriptors for QSAR modeling; useful for comprehensive substrate/catalyst profiling. |

| pymatgen | Materials Project | Python library for materials analysis, enabling the generation of descriptors for solid/surface catalysts. |

| KNIME Analytics Platform | KNIME AG | Visual workflow tool for building, automating, and documenting the entire data preprocessing pipeline without extensive coding. |

| Jupyter Notebooks | Project Jupyter | Interactive environment for developing and sharing code for data mining, cleaning, and analysis in Python/R. |

| SciFinderⁿ | CAS | Commercial, comprehensive chemical information database for validating structures and searching reaction data. |

Protocol: Constructing the Final Modeling Dataset

Objective: To integrate all curated data into a unified table for machine learning.

- Feature Table Assembly: Create a master table where each row represents a unique catalytic oxidation experiment.

- Column Structure:

- Identifier Columns: Source DOI, Internal ID.

- Input Features (X): Descriptors for catalyst, substrate, and conditions (e.g., temperature, pH, solvent polarity index).

- Target Variables (Y): Performance metrics (e.g., TOF, Selectivity). Note: For classification models, discretize continuous targets (e.g., High/Low TOF).

- Train-Test Split Strategy: Perform a temporal split (older data for training, recent for testing) or a cluster-based split to evaluate extrapolation ability, rather than a simple random split, to prevent data leakage and over-optimistic performance estimates.

- Data Sheet Creation: Document the final dataset with a "datasheet" detailing motivations, composition, preprocessing steps, and potential uses/limitations, following best practices for dataset transparency.

Building Robust QSAR Models: A Step-by-Step Guide for ANN, SVM, and MLR

1. Introduction: Context within ANN, SVM, MLR QSAR for Catalytic Oxidation Systems Quantitative Structure-Activity Relationship (QSAR) modeling is a cornerstone in modern chemical research, enabling the prediction of molecular activity from structural descriptors. Within the specific thesis context of researching catalytic oxidation systems—crucial for environmental remediation, chemical synthesis, and drug metabolism studies—the development of robust QSAR models using Artificial Neural Networks (ANN), Support Vector Machines (SVM), and Multiple Linear Regression (MLR) is paramount. These models help predict catalytic efficiency, substrate specificity, or byproduct formation, accelerating the design of novel catalysts and oxidation processes.

2. Application Notes & Protocols: A Stepwise Workflow

2.1. Phase I: Data Acquisition and Curation

- Protocol 1.1: Dataset Compilation from Catalytic Oxidation Literature

- Objective: Assemble a homogeneous dataset of molecular structures and their corresponding catalytic oxidation activity metrics (e.g., turnover frequency (TOF), conversion %, TON, product selectivity).

- Methodology:

- Perform a systematic search using scientific databases (SciFinder, Reaxys, Web of Science) with keywords: "catalytic oxidation," "homogeneous/heterogeneous catalyst," "[specific substrate, e.g., alkane]," "kinetic data."

- Extract quantitative activity data for a consistent set of reaction conditions (temperature, pressure, solvent, oxidant).

- For each catalyst/substrate, generate or obtain a clean 2D or 3D molecular structure file (SDF, MOL).

- Log all data in a structured master table. Include fields: Compound ID, SMILES/String, Experimental Activity Value, Reaction Conditions Code, Reference.

- Protocol 1.2: Chemical Structure Standardization and Preparation

- Objective: Generate a consistent, chemically "sensible" representation of all molecules in the dataset.

- Methodology:

- Use cheminformatics toolkits (e.g., RDKit, OpenBabel) within a Python script or KNIME workflow.

- Apply steps: Neutralization of charges, removal of salts, generation of canonical tautomers, aromatization, and explicit hydrogen addition.

- Optimize 3D geometry using a force field (MMFF94 or UFF) and perform a conformational search if 3D descriptors are to be used.

- Output a standardized SDF file for descriptor calculation.

2.2. Phase II: Descriptor Calculation and Dataset Preparation

- Application Note 2.1: Descriptors encode molecular features into numerical values. For catalytic systems, key descriptor classes include:

- Electronic: HOMO/LUMO energies, partial charges, dipole moment (relevant for redox potential).

- Geometric/Topological: Molecular volume, surface area, connectivity indices.

- Steric: Taft’s steric constant, molar refractivity.

- Quantum Chemical: Fukui indices (for electrophilicity/nucleophilicity in oxidation).

- Protocol 2.2: Descriptor Calculation and Pre-processing

- Calculate descriptors using software like Dragon, PaDEL-Descriptor, or RDKit.

- Perform data cleaning: Remove descriptors with zero variance, >20% missing values, or high pairwise correlation (>0.95).

- Scale the remaining descriptor matrix (e.g., Standardization or Min-Max Scaling).

2.3. Phase III: Model Building, Validation, and Selection

- Protocol 3.1: Dataset Division and Model Training

- Split data into training (≈70-80%) and external test (≈20-30%) sets using rational methods (e.g., Kennard-Stone, activity-based sorting).

- For MLR: Use stepwise selection or genetic algorithm on the training set to select the most relevant, uncorrelated descriptors. Build linear model.

- For SVM: Optimize hyperparameters (kernel type: RBF; C, gamma) via grid/random search with cross-validation on the training set.

- For ANN: Design a multilayer perceptron (MLP). Optimize architecture (# layers, # neurons), learning rate, and epochs using cross-validation.

- Protocol 3.2: Rigorous Model Validation

- Principle: Adhere to OECD QSAR validation principles.

- Methodology:

- Internal Validation: Perform 5- or 10-fold cross-validation on the training set. Report Q², RMSEₛᵤᵦ.

- External Validation: Predict the held-out test set. Report R²ₑₓₜ, RMSEₑₓₜ.

- Y-Randomization: Shuffle activity values and rebuild models. Confirm low performance to rule out chance correlation.

- Applicability Domain (AD) Definition: Use methods like Leverage (Williams plot) or distance-based measures to define the chemical space where the model is reliable.

2.4. Phase IV: Model Interpretation and Deployment

- Protocol 4.1: Interpretation of the Selected Model

- MLR: Interpret sign and magnitude of coefficients.

- SVM/ANN: Use model-agnostic tools (e.g., SHAP, LIME) to determine descriptor importance and contribution for specific predictions.

- Protocol 4.2: Deployment for Virtual Screening

- Serialize the final model (e.g., using

picklein Python,.rdsin R). - Develop a simple web interface (Flask, Streamlit) or a script that:

- Accepts a SMILES string or SDF file.

- Applies the same standardization and descriptor calculation pipeline.

- Checks the input against the model's Applicability Domain.

- Returns a prediction with confidence interval.

- Serialize the final model (e.g., using

3. Data Presentation

Table 1: Representative Performance Metrics for Different QSAR Algorithms on a Catalytic Oxidation Dataset (Hypothetical Example)

| Model Type | Training R² | Cross-Validation Q² | External Test Set R² | RMSE (Test) | Key Descriptors Identified |

|---|---|---|---|---|---|

| MLR | 0.85 | 0.78 | 0.76 | 0.45 | HOMO Energy, Molecular Polarizability |

| SVM (RBF) | 0.92 | 0.85 | 0.83 | 0.32 | (Non-linear combination of multiple descriptors) |

| ANN (2 hidden layers) | 0.95 | 0.84 | 0.82 | 0.35 | (Complex non-linear relationships) |

4. Visualized Workflow

Diagram Title: QSAR Model Development Workflow

5. The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions & Materials for QSAR on Catalytic Systems

| Item | Function/Explanation |

|---|---|

| RDKit (Open-Source) | Core cheminformatics library for Python. Used for molecule standardization, descriptor calculation, fingerprint generation, and basic modeling. |

| PaDEL-Descriptor | Software for calculating 1D, 2D, and 3D molecular descriptors and fingerprints from chemical structures. |

| scikit-learn (Python) | Primary library for implementing MLR, SVM, and ANN models, as well as for data preprocessing, validation, and hyperparameter tuning. |

| TensorFlow/PyTorch | Deep learning frameworks essential for building complex, custom ANN architectures beyond basic MLPs. |

| KNIME / Orange Data Mining | Visual programming platforms that provide GUI nodes for data manipulation, modeling, and visualization, useful for prototyping. |

| OECD QSAR Toolbox | Software to aid in applying OECD validation principles, profiling chemicals, and filling data gaps, crucial for regulatory acceptance. |

| Catalytic Oxidation Dataset | Curated, homogeneous collection of catalyst/substrate structures and associated kinetic/activity data. The foundational asset. |

| High-Performance Computing (HPC) Cluster | Computational resource necessary for quantum chemical descriptor calculations (e.g., DFT for HOMO/LUMO) and extensive hyperparameter optimization. |

Feature Selection and Dimensionality Reduction Techniques for Oxidation Data

This application note details practical protocols for feature selection (FS) and dimensionality reduction (DR) within the specific context of developing quantitative structure-activity relationship (QSAR) models—specifically Artificial Neural Network (ANN), Support Vector Machine (SVM), and Multiple Linear Regression (MLR) models—for catalytic oxidation systems. In drug development and materials science, oxidation data, such as catalytic turnover frequencies or product yield percentages, is often linked to high-dimensional molecular or catalyst descriptors. Effective FS/DR is critical to prevent overfitting, improve model interpretability, and enhance the predictive performance of ANN, SVM, and MLR models in this research domain.

Core Techniques: Protocols and Application Notes

Filter-Based Feature Selection Methods

Protocol: Variance Threshold and Correlation Filtering

- Data Preparation: Standardize your dataset (e.g., molecular descriptors from DRAGON software, electronic parameters, steric maps) using StandardScaler or MinMaxScaler.

- Low-Variance Removal: Calculate the variance of each feature. Remove all features where the variance does not exceed a defined threshold (e.g., 0.01). This eliminates near-constant descriptors irrelevant to oxidation activity.

- High-Correlation Filter: Compute the Pearson correlation matrix for the remaining features. Identify pairs of features with correlation coefficients > |0.85|. For each highly correlated pair, remove the feature with the lower correlation to the target variable (e.g., oxidation rate constant).

- Output: A reduced, less redundant descriptor set for subsequent modeling.

Wrapper Method: Recursive Feature Elimination (RFE) for SVM/MLR

Protocol: RFE using Cross-Validation

- Base Model Selection: Choose an estimator. For linear relationships, use MLR. For non-linear, use SVM with a linear kernel.

- Ranking Features: Initialize RFE, specifying the estimator and the number of features to select. RFE fits the model, ranks features by importance (coefficient magnitude for MLR/SVM), and removes the weakest feature(s).

- Cross-Validation Loop: Embed RFE in a k-fold (e.g., 5-fold) cross-validation loop. This ensures stability of the selected feature subset.

- Optimal Feature Number: Use grid search to identify the optimal number of features that maximizes the cross-validated R² or minimizes RMSE for your oxidation dataset.

- Final Selection: Apply RFE with the optimal number to the entire training set to obtain the final feature subset.

Embedded Method: LASSO Regularization for MLR

Protocol: Feature Selection via L1 Regularization

- Model Formulation: Apply LASSO regression (Linear regression with L1 penalty) to your standardized descriptor matrix (X) and oxidation activity vector (y).

- Hyperparameter Tuning: Use cross-validated grid search (e.g.,

LassoCV) to find the optimal regularization strength (α) that minimizes the mean squared error. - Feature Extraction: Fit the final LASSO model with the optimal α. Features with non-zero coefficients are selected. LASSO effectively drives coefficients of irrelevant descriptors to zero.

- Validation: The selected feature set is inherently used to build a sparse, interpretable MLR model for oxidation activity prediction.

Dimensionality Reduction: Principal Component Analysis (PCA)

Protocol: PCA for Descriptor Space Compression

- Standardization: Crucial step: Standardize all features to have zero mean and unit variance.

- Covariance Matrix & Decomposition: Compute the covariance matrix of the standardized data and perform eigen decomposition.

- Component Selection: Plot the cumulative explained variance ratio. Select the number of principal components (PCs) that explain >80-95% of the total variance in the original oxidation dataset.

- Projection: Transform the original high-dimensional descriptor data into a new subspace defined by the selected PCs.

- Modeling: Use the PC scores as new, uncorrelated features for input into ANN or SVM models, which can handle the latent variables.

Table 1: Comparison of FS/DR Techniques for Oxidation Data QSAR Modeling

| Technique | Type | Key Hyperparameters | Output for Modeling | Suitability for Model Type | Pros for Oxidation Data | Cons |

|---|---|---|---|---|---|---|

| Variance Threshold | Filter | Threshold value | Subset of original features | ANN, SVM, MLR | Fast, removes non-informative descriptors. | Univariate, ignores feature relationships. |

| Correlation Filter | Filter | Correlation cutoff (e.g., 0.85) | Subset of original features | ANN, SVM, MLR | Reduces multicollinearity, improves MLR stability. | May remove synergistically important features. |

| RFE | Wrapper | Estimator, # of features | Optimal subset of original features | SVM, MLR (estimator-dependent) | Considers model performance, interaction-aware. | Computationally heavy, risk of overfitting to estimator. |

| LASSO | Embedded | Regularization strength (α) | Subset (non-zero coeff.) of original features | Primarily MLR/Linear Models | Built-in selection, produces interpretable models. | Assumes linearity, unstable with highly correlated features. |

| PCA | DR | # of Components / % Variance | Transformed features (PC scores) | ANN, SVM (MLR less ideal) | Handles multicollinearity, noise reduction. | Loss of interpretability (PCs are linear combinations). |

Table 2: Illustrative Results from Oxidation Catalyst Study

| Method | Initial Descriptors | Final Features/PCs | SVM R² (Test) | ANN R² (Test) | MLR R² (Test) | Key Selected Descriptor Types |

|---|---|---|---|---|---|---|

| Correlation Filter + RFE | 250 | 18 | 0.89 | 0.91 | 0.82 | ESP charges, Wiberg indices, Sterimol parameters |

| LASSO Regression | 250 | 22 | N/A | N/A | 0.85 | Conductor-like Screening Model (COSMO) energies, Hirshfeld charges |

| PCA (95% Variance) | 250 | 8 PCs | 0.87 | 0.90 | 0.79 | Latent variables (linear combos of all descriptors) |

The Scientist's Toolkit: Key Research Reagents & Solutions

Table 3: Essential Computational Tools & Datasets for Oxidation Data Analysis

| Item / Software | Function in FS/DR for Oxidation QSAR |

|---|---|

| DRAGON / PaDEL | Generates exhaustive sets of molecular descriptors (constitutional, topological, electronic) for catalyst/organic substrate libraries. |

| Gaussian, ORCA | Quantum chemistry software to calculate electronic structure descriptors (Fukui indices, HOMO/LUMO energies, partial charges) critical for oxidation mechanisms. |

| scikit-learn (Python) | Primary library implementing VarianceThreshold, RFE, LassoCV, PCA, and SVM/MLR/ANN models with a unified API. |

| RDKit | Open-source cheminformatics toolkit for handling molecular structures, calculating 2D/3D descriptors, and integrating with ML workflows. |

| Catalyst Database (e.g., NIST) | Curated experimental datasets of catalytic oxidation reactions (e.g., alkene epoxidation, C-H oxidation) for training and validating models. |

| Matplotlib / Seaborn | Visualization libraries for creating correlation matrices, feature importance plots, and PCA biplots to guide FS/DR decisions. |

Visualization of Methodologies

QSAR Feature Selection and Reduction Workflow

LASSO Regression Mechanism for Feature Selection

1. Introduction within the Thesis Context This protocol details the implementation of Multiple Linear Regression (MLR) Quantitative Structure-Activity Relationship (QSAR) models. Within the broader thesis investigating ANN, SVM, and MLR models for catalytic oxidation systems and drug development, MLR serves as the foundational, interpretable benchmark. Its linear framework provides clear insights into structural descriptors governing activity, against which more complex non-linear models (ANN, SVM) are compared for predictive performance in modeling oxidation-driven biological activities.

2. Foundational Assumptions of MLR-QSAR Prior to model development, the following statistical and domain-specific assumptions must be verified:

- Linearity: A linear relationship exists between molecular descriptors (independent variables) and the biological activity (dependent variable).

- Homoscedasticity: The variance of residual errors is constant across all levels of the predicted activity.

- Normality: Residual errors are normally distributed.

- Independence: Observations (compounds) are independent of each other.

- Absence of Multicollinearity: Molecular descriptors are not highly correlated with each other.

- Domain Applicability: The model is only valid for compounds within the chemical space defined by the training set.

3. Experimental Protocol: MLR Model Building & Validation

3.1. Data Curation and Descriptor Calculation

- Objective: To prepare a robust dataset of compounds with associated biological activity (e.g., -log(IC50), % inhibition in a catalytic oxidation assay).

- Protocol:

- Collect a minimum of 20 compounds per descriptor variable (a common heuristic).

- Optimize all 2D/3D molecular structures using a computational chemistry suite (e.g., Gaussian, RDKit).

- Calculate a pool of molecular descriptors (e.g., topological, electronic, geometrical) using software like Dragon, PaDEL-Descriptor, or Mordred.

- Store the dataset in a structured table (see Table 1).

Table 1: Example QSAR Dataset Structure

| Compound_ID | pActivity (Y) | LogP | Molar_Refractivity | HOMO_Energy | PSA | ... |

|---|---|---|---|---|---|---|

| Cmpd_01 | 5.21 | 3.45 | 78.91 | -9.12 | 45.6 | ... |

| Cmpd_02 | 4.87 | 2.89 | 65.34 | -8.95 | 62.3 | ... |

| ... | ... | ... | ... | ... | ... | ... |

3.2. Descriptor Selection and Model Equation Building

- Objective: To identify a minimal, significant, and non-collinear set of descriptors and derive the MLR equation.

- Protocol:

- Pre-process data: Remove constant/near-constant descriptors. Scale descriptors if necessary.

- Variable Selection: Apply a combination of:

- Filter Method: Correlation matrix analysis to remove highly inter-correlated descriptors (r > |0.8|).

- Wrapper Method: Stepwise regression (forward/backward) using an objective criterion (e.g., Akaike Information Criterion (AIC)).

- Model Fitting: Fit the MLR model using the selected descriptors (e.g., using

statsmodelsorscikit-learnin Python). - Equation Derivation: The final model takes the form:

pActivity = β₀ + (β₁ × Descriptor₁) + (β₂ × Descriptor₂) + ... + βₙ × Descriptorₙ) + εDocument coefficients (β), intercept, and statistical metrics (see Table 2).

3.3. Internal and External Validation

- Objective: To rigorously assess the model's predictive ability and robustness.

- Protocol:

- Data Splitting: Randomly divide the dataset (70-80% training set, 20-30% external test set).

- Internal Validation (Training Set):

- Leave-One-Out (LOO) or Leave-Many-Out (LMO) Cross-Validation: Calculate Q² (cross-validated R²).

- Y-Randomization Test: Scramble activity values and rebuild models. Ensure the original model significantly outperforms randomized models.

- External Validation (Test Set): Predict the activity of the unseen test set. Calculate key metrics (see Table 2).

Table 2: Key Model Validation Metrics

| Metric | Formula/Description | Acceptance Threshold (Typical) |

|---|---|---|

| R² | Coefficient of determination for fitted model. | > 0.6 |

| Adjusted R² | R² adjusted for number of descriptors. | Close to R². |

| Q² (LOO) | Cross-validated R². | > 0.5 |

| RMSE | Root Mean Square Error. | As low as possible. |

| s | Standard Error of Estimation. | As low as possible. |

| F | F-statistic (ratio of model variance to error variance). | Significant (p < 0.05). |

| R²ₑₓₜ | Coefficient of determination for external test set. | > 0.6 |

| r²ₘ | Metric for external validation slope through origin. | Close to 1.0 |

4. The Scientist's Toolkit: Key Research Reagents & Materials

| Item | Function in MLR-QSAR Protocol |

|---|---|

| Chemical Database (e.g., PubChem, ChEMBL) | Source of bioactive compound structures and associated assay data. |

| Computational Chemistry Software (e.g., Gaussian, OpenBabel) | For quantum mechanical calculation of electronic descriptors and geometry optimization. |

| Descriptor Calculation Software (e.g., Dragon, PaDEL) | To generate numerical representations of molecular structure. |

| Statistical Software (e.g., R, Python with pandas/statsmodels) | For data preprocessing, variable selection, MLR fitting, and validation. |

| Y-Randomization Script | Custom script to permute activity data and test model chance correlation. |

| Applicability Domain Tool (e.g., based on leverage) | To define the chemical space where the model's predictions are reliable. |

5. Visualization of Workflows

Title: MLR-QSAR Model Development and Validation Workflow

Title: Data Splitting and Validation Pathway for MLR-QSAR

Within a thesis comparing Artificial Neural Networks (ANN), Support Vector Machines (SVM), and Multiple Linear Regression (MLR) QSAR models for predicting catalyst efficiency in catalytic oxidation systems (e.g., for pollutant degradation or synthetic chemistry), the SVM module presents a critical component. Its performance is highly contingent on appropriate kernel selection and rigorous parameter optimization. These Application Notes provide a practical protocol for developing robust SVM-QSAR models in this research context.

Core Theoretical Framework: SVM Kernels

The kernel function implicitly maps input descriptors into a high-dimensional feature space, enabling the separation of non-linear relationships. The choice of kernel defines the hypothesis space for the model.

Table 1: Common SVM Kernels for QSAR Modeling

| Kernel | Mathematical Function | Key Hyperparameters | Best For |

|---|---|---|---|

| Linear | K(x~i~, x~j~) = x~i~^T^x~j~ | C (regularization) | Linearly separable data, high-dimensional descriptors, interpretation. |

| Radial Basis Function (RBF/Gaussian) | K(x~i~, x~j~) = exp(-γ‖x~i~ - x~j~‖²) | C, γ (kernel width) | Non-linear problems, default choice when data structure is unknown. |

| Polynomial | K(x~i~, x~j~) = (γx~i~^T^x~j~ + r)^d^ | C, γ, d (degree), r (coeff0) | Controlled non-linearity; rarely superior to RBF in practice. |

| Sigmoid | K(x~i~, x~j~) = tanh(γx~i~^T^x~j~ + r) | C, γ, r | Specific neural network-like architectures; use with caution. |

Experimental Protocol: SVM-QSAR Model Development

Protocol 1: Standardized Workflow for SVM Model Implementation Objective: To construct, optimize, and validate an SVM model for predicting the catalytic oxidation activity (e.g., conversion %, TOF, TON) from molecular/catalyst descriptors.

Materials & Software: Python (scikit-learn, pandas, numpy), Jupyter Notebook environment, standardized QSAR dataset (cleaned, descriptors calculated, endpoint normalized).

Procedure:

- Data Preparation: Split pre-processed dataset into training (70-80%) and hold-out test (20-30%) sets. Scale features (e.g., StandardScaler) using only training set statistics to avoid data leakage.

- Initial Kernel Screening: Train preliminary SVM models (with default C=1.0, γ='scale') using Linear, RBF, and Polynomial kernels on the training set. Assess via 5-fold cross-validated R² or RMSE.

- Hyperparameter Optimization (Grid Search CV):

- Define a hyperparameter grid. Example for RBF:

param_grid = {'C': [0.1, 1, 10, 100, 1000], 'gamma': [0.001, 0.01, 0.1, 1, 'scale', 'auto']} - Instantiate

GridSearchCV(SVR(kernel='rbf'), param_grid, cv=5, scoring='neg_root_mean_squared_error', n_jobs=-1). - Fit the grid search object to the scaled training data.

- Identify the best parameters (

best_params_).

- Define a hyperparameter grid. Example for RBF:

- Final Model Training & Validation:

- Train a final SVM model on the entire training set using the optimized hyperparameters.

- Predict on the held-out test set (scaled with training set scaler) for final unbiased evaluation.