Property-Guided Generation: Revolutionizing Catalyst Design for Biomedical Applications

This article provides a comprehensive guide to property-guided generation for catalyst activity optimization, tailored for researchers and drug development professionals.

Property-Guided Generation: Revolutionizing Catalyst Design for Biomedical Applications

Abstract

This article provides a comprehensive guide to property-guided generation for catalyst activity optimization, tailored for researchers and drug development professionals. We explore the foundational concepts of chemical space navigation and property prediction models. We detail methodological workflows for integrating generative AI with catalyst design, including practical applications in pharmaceutical synthesis. The article addresses common challenges in model training, data scarcity, and multi-property optimization. Finally, we present validation frameworks and comparative analyses against traditional high-throughput and DFT methods, highlighting the transformative potential of AI-driven catalyst discovery for accelerating biomedical innovation.

Navigating Chemical Space: The Foundation of Property-Guided Catalyst Design

Defining Property-Guided Generation in Catalyst Optimization

Application Notes

Property-guided generation (PGG) is an emerging computational paradigm within catalyst optimization research. It integrates target property prediction directly into the generative process, steering the exploration of chemical space toward regions with desired catalytic performance metrics (e.g., activity, selectivity, stability). This contrasts with traditional sequential approaches of generate-then-screen, enabling more efficient and focused discovery cycles. The core thesis is that applying property guidance during generation, rather than after, drastically reduces the resource-intensive experimental validation bottleneck inherent in catalyst development.

The methodology typically combines a generative model (e.g., variational autoencoder, generative adversarial network, or language model for molecules) with one or more property predictors. The generator is conditioned on a desired property target, either through latent space optimization, reinforcement learning rewards, or gradient-based steering from differentiable property models. This closed-loop design is critical for exploring complex, non-linear relationships between catalyst structure and function.

Table 1: Quantitative Comparison of PGG Methodologies in Recent Catalyst Studies

| Study Focus | Generative Model | Guiding Property(ies) | Success Metric | Reported Efficiency Gain vs. Random Search |

|---|---|---|---|---|

| Heterogeneous Metal Alloy Discovery | Crystal Graph VAE | Adsorption Energy, Stability | Novel, stable alloys with target ΔEads | ~50x faster discovery |

| Homogeneous Organocatalyst Design | SMILES-based RNN | Enantioselectivity (ee%) | High-ee catalysts synthesized & validated | ~30x more likely to find ee >90% |

| Electrochemical CO₂ Reduction | Conditional GAN | Overpotential, Product Selectivity | Identified promising molecular complexes | 15x reduction in candidates to test |

Experimental Protocols

Protocol 1: Latent Space Optimization for Heterogeneous Catalyst Discovery This protocol details a workflow for generating novel metal surface alloys guided by adsorption energy targets.

- Data Curation: Assemble a database of known bulk and surface structures with associated computed adsorption energies for key intermediates (e.g., *COOH for CO₂ reduction). Use DFT calculations (e.g., VASP, Quantum ESPRESSO) to ensure consistency.

- Model Training: Train a Crystal Graph Variational Autoencoder (CGVAE) on the structural data. The encoder maps crystal structures to a continuous latent vector (z), the decoder reconstructs structures from z.

- Property Predictor Training: Train a separate feed-forward neural network to predict target adsorption energy from the latent vector z.

- Property-Guided Generation: a. Define the target property value (e.g., optimal ΔEads = -0.8 eV). b. Initialize a population of random latent vectors. c. Use a gradient-based optimizer (e.g., Adam) to iteratively update the latent vectors by minimizing the loss: Loss = | P_pred(z) - P_target | + λ * |z|, where P is the property. d. Decode the optimized latent vectors to generate candidate crystal structures.

- Validation: Perform full DFT relaxation and energy calculation on the top-generated candidates to verify properties.

Protocol 2: Reinforcement Learning for Organocatalyst Optimization This protocol uses RL to optimize a generative model for organic molecules toward a multi-property objective.

- Agent Setup: Implement a Recurrent Neural Network (RNN) as the policy network (agent) that generates SMILES strings token-by-token.

- Environment & Reward Definition: The environment consists of property prediction models. The reward function R is a weighted sum: R = w1 * Activity_Score + w2 * Selectivity_Score - w3 * Synthetic_Accessibility_Penalty.

- Training Loop: a. The agent generates a batch of molecules (SMILES). b. Each molecule is passed through the predictor models to compute the component scores. c. The policy gradient (e.g., REINFORCE) is computed using the final reward to update the RNN, increasing the probability of generating molecules with high R.

- Fine-Tuning: Pre-train the RNN on a large corpus of organic molecules. Then run the RL loop for the specific catalytic property targets.

- Synthesis & Testing: Select top-ranked molecules for experimental synthesis and catalytic performance testing in the relevant reaction.

Visualizations

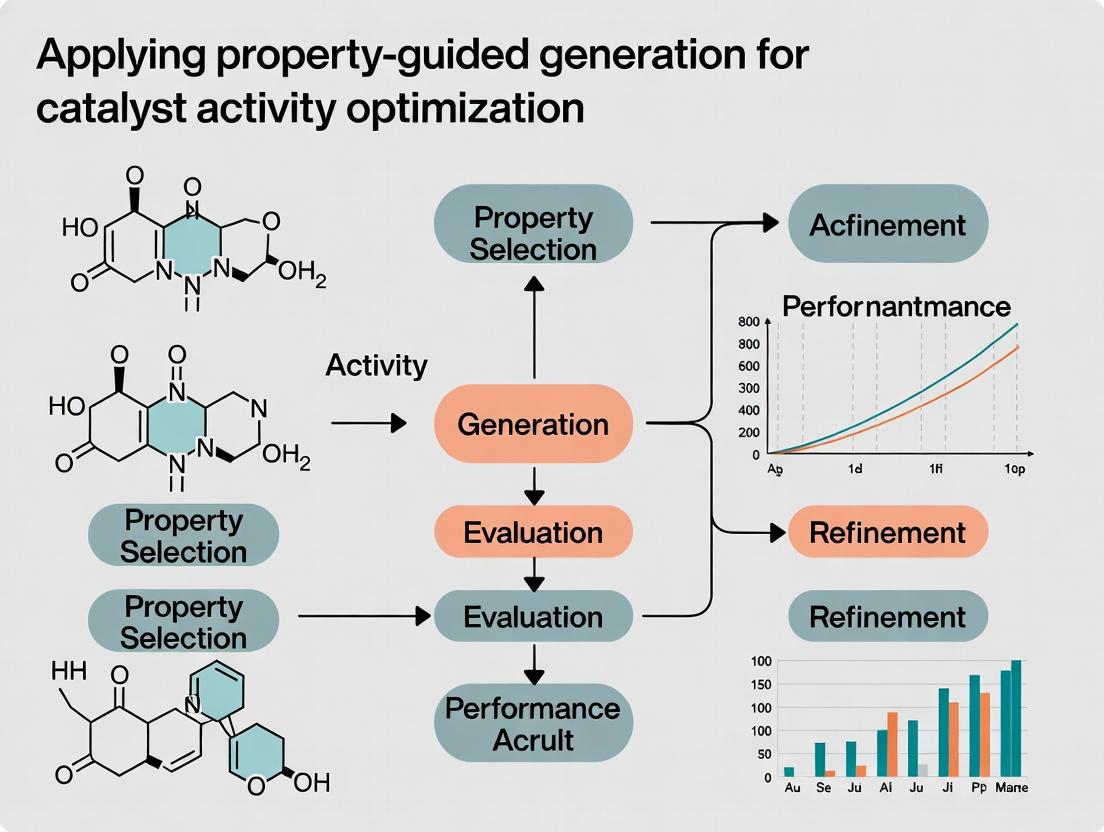

Title: Core PGG Closed-Loop Workflow

Title: RL-Based Property-Guided Generation

The Scientist's Toolkit: Research Reagent Solutions

| Item / Resource | Function in PGG for Catalysis |

|---|---|

| Quantum Chemistry Software (VASP, Gaussian, ORCA) | Provides high-fidelity data (e.g., adsorption energies, transition state energies) for training property predictors and final candidate validation. |

| Machine Learning Libraries (PyTorch, TensorFlow, JAX) | Enables the construction, training, and deployment of generative models and property prediction neural networks. |

| Chemical Libraries (e.g., ZINC, QM9, Materials Project) | Source of foundational chemical/materials structures for pre-training generative models to learn valid chemical rules. |

| Automated Reaction Screening Platforms | Enables medium- to high-throughput experimental validation of top computational candidates, closing the design loop. |

| Differentiable ML Force Fields (e.g., MACE, NequIP) | Allows for gradient-based property guidance with respect to atomic coordinates, crucial for 3D structure optimization. |

| Open Catalyst Dataset (OC20/OC22) | Large-scale dataset of DFT calculations for catalyst surfaces; essential for training robust models in heterogeneous catalysis. |

Within the broader thesis on Applying property-guided generation for catalyst activity optimization research, this application note delineates the core catalytic properties—selectivity, turnover frequency (TOF), and stability—that serve as the primary optimization targets. Systematic measurement and enhancement of these interlinked properties are critical for the rational design of high-performance catalysts in pharmaceuticals, fine chemicals, and energy applications.

Core Properties: Definitions and Quantitative Benchmarks

Table 1: Key Catalyst Property Metrics and Target Ranges

| Property | Definition | Key Metric(s) | Desirable Range (Heterogeneous Catalysis) | Desirable Range (Homogeneous/Enzymatic) |

|---|---|---|---|---|

| Selectivity | The ability to direct the reaction towards a desired product. | Selectivity (%) = (Moles desired product / Moles total products) x 100 | >95% for fine chemicals | >99% for chiral pharmaceutical intermediates |

| Turnover Frequency (TOF) | The number of reactant molecules a catalyst site converts per unit time. | TOF (h⁻¹ or s⁻¹) = (Moles converted) / (Moles active sites × Time) | 10 - 10⁵ h⁻¹ (highly variable) | 1 - 10⁶ h⁻¹ (enzyme typical: 10²-10⁵ s⁻¹) |

| Stability | The ability to maintain activity and selectivity over time or cycles. | TON (Total Turnover Number) or Lifetime (h); % Initial Activity retained after N cycles/time. | TON > 10⁶; <20% deactivation over 1000h | TON > 10⁵; <10% deactivation over 100 cycles |

Experimental Protocols

Protocol 1: Assessing Intrinsic Activity via Turnover Frequency (TOF)

Objective: To measure the intrinsic activity of a solid metal nanoparticle catalyst for a model hydrogenation reaction. Materials: Catalyst (e.g., 1 wt% Pt/Al₂O₃), Substrate (e.g., nitrobenzene), Hydrogen gas (H₂), Solvent (e.g., ethanol), High-Pressure Reactor, GC/MS. Procedure:

- Active Site Counting (Chemisorption): Pre-reduce 100 mg catalyst under H₂ flow (300°C, 2h). Cool to 35°C. Perform pulsed CO chemisorption using a Micromeritics analyzer. Calculate active metal sites assuming a 1:1 CO:Pt stoichiometry.

- Kinetic Reaction: Charge reactor with 50 mg catalyst, 10 mmol substrate in 20 mL solvent. Purge with H₂, pressurize to 10 bar H₂, heat to 80°C with vigorous stirring (1200 rpm) to eliminate external diffusion.

- Initial Rate Measurement: Monitor pressure drop/H₂ consumption or take small aliquots at very low conversion (<10%, typically within first 5 min). Analyze by GC.

- Calculation: TOF = (Moles of substrate converted at t→0) / (Moles of surface active sites from step 1 × Reaction time in hours).

Protocol 2: Evaluating Chemoselectivity in Multi-Functional Substrates

Objective: To determine the chemoselectivity of a heterogeneous catalyst for the hydrogenation of a carbonyl group over an alkene. Materials: Catalyst (e.g., supported Ru or Pt), Substrate (e.g., cinnamaldehyde), H₂, Reactor, GC/MS. Procedure:

- Charge reactor with catalyst (substrate/metal molar ratio = 500), 5 mmol cinnamaldehyde in solvent.

- Conduct reaction at mild conditions (e.g., 50°C, 5 bar H₂, 30 min) to achieve partial conversion (20-40%).

- Quench reaction, separate catalyst via filtration.

- Analysis: Quantify remaining cinnamaldehyde, hydrocinnamaldehyde (desired), and cinnamyl alcohol (undesired) via calibrated GC. Calculate: Carbonyl Hydrogenation Selectivity = [Moles hydrocinnamaldehyde] / ([Moles hydrocinnamaldehyde] + [Moles cinnamyl alcohol]) × 100%.

Protocol 3: Accelerated Stability Test (Cycling)

Objective: To assess the recyclability and deactivation of a homogeneous organometallic catalyst. Materials: Catalyst complex (e.g., Pd/XPhos), Substrate, Base, Solvent, Inert atmosphere glovebox, UPLC. Procedure:

- Under inert atmosphere, set up a catalytic cycle for a cross-coupling reaction (e.g., Suzuki-Miyaura). Use a substrate:catalyst ratio of 100:1.

- After completion (monitored by UPLC), cool reaction mixture.

- Recovery: For homogeneous catalysts, remove solvent under vacuum. Wash residue with a non-coordinating solvent to remove organic by-products. Dry and weigh recovered catalyst. For heterogeneous catalysts, filter, wash, and dry.

- Recycle: Recharge reactor with fresh substrate and solvent. Add the recovered catalyst. Repeat reaction under identical conditions.

- Repeat for 5-10 cycles. Plot % yield or TOF vs. cycle number. Calculate average deactivation rate per cycle.

Visualization of Property-Guided Catalyst Optimization

Diagram Title: Workflow for Property-Guided Catalyst Generation & Optimization

Diagram Title: Interdependence of Key Catalyst Properties on Material Traits

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Catalyst Property Evaluation

| Item/Reagent | Primary Function | Example & Rationale |

|---|---|---|

| Chemisorption Analyzer | Quantifies active metal surface sites for accurate TOF calculation. | Micromeritics AutoChem II: For pulsed CO/H₂ chemisorption to count surface atoms. |

| Standard Catalytic Test Materials | Provides benchmarked reactions for comparing intrinsic properties. | EUROPT-1 (Pt/SiO₂), NIST Pd/Al₂O₃: Certified reference catalysts for hydrogenation. |

| Chiral Ligand Kits | Enables rapid screening for enantioselectivity optimization. | Sigma-Aldrich Chiral Ligand Toolkit: Array of phosphines and N-heterocyclic carbenes for asymmetric synthesis. |

| Leaching Test Kits | Distinguishes homogeneous vs. heterogeneous catalysis and assesses stability. | Hot Filtration Test Setup; ICP-MS Sample Vials: To detect and quantify metal leaching. |

| Accelerated Aging Chambers | Simulates long-term deactivation mechanisms (sintering, coking) in compressed time. | Anton Paar High-Pressure Reactor with in-situ spectroscopy ports: For operando stability studies under harsh conditions. |

| Computational Descriptor Databases | Provides input features for ML-based property-guided generation. | Catalysis-Hub.org, NOMAD Repository: DFT-calculated adsorption energies and reaction pathways for thousands of materials. |

The Role of Molecular Descriptors and Quantum Chemical Features

Application Notes

In the context of a thesis on Applying property-guided generation for catalyst activity optimization, molecular descriptors and quantum chemical features serve as the foundational numerical representation of molecular systems. They translate complex molecular and electronic structures into quantitative data that can be processed by machine learning (ML) models to predict catalytic activity, selectivity, and stability, thereby guiding the in silico generation of novel catalyst candidates.

Molecular Descriptors (e.g., molecular weight, number of rotatable bonds, topological indices, SAR fingerprints) provide information on the physical, topological, and substructural characteristics of a molecule or catalyst complex. They are computationally inexpensive to calculate and are crucial for establishing initial structure-property relationships (SPR).

Quantum Chemical Features are derived from electronic structure calculations (e.g., Density Functional Theory - DFT). They encode the electronic environment governing catalytic mechanisms, such as:

- Frontier Molecular Orbital Energies: HOMO (Highest Occupied Molecular Orbital) and LUMO (Lowest Unoccupied Molecular Orbital) energies, governing electron donation/acceptance.

- Partial Atomic Charges & Spin Densities: Electron distribution, critical for understanding reaction sites.

- Reaction Energies & Barrier Heights: Key thermodynamic and kinetic descriptors for activity prediction.

- Vibrational Frequencies: Insights into stability and intermediate species.

The integration of both descriptor classes into ML-driven workflows enables property-guided generation. Generative models (e.g., VAEs, GANs, Diffusion Models) use these features as conditioning parameters or as targets for predictive models to score and iteratively refine generated molecular structures toward optimal catalytic profiles.

Experimental Protocols

Protocol 2.1: Calculation of Standard Molecular Descriptor Sets for Organometallic Complexes

Objective: To generate a consistent set of 2D/3D molecular descriptors for a library of transition metal catalyst candidates.

- Structure Preparation: Optimize ligand and catalyst complex geometries using molecular mechanics (MMFF94 or UFF force field) in software like RDKit or Open Babel. Ensure correct protonation states and stereochemistry.

- Descriptor Calculation: Use the RDKit (Python) or PaDEL-Descriptor software to compute a comprehensive set.

- 2D Descriptors: Constitutional (atom counts, molecular weight), topological (Balaban J, connectivity indices), electrostatic (partial charge descriptors), and functional group fingerprints.

- 3D Descriptors: Principal Moments of Inertia, Radius of Gyration, 3D-MoRSE descriptors, WHIM descriptors.

- Data Curation: Remove descriptors with zero variance or high correlation (>0.95). Normalize remaining descriptors (e.g., Min-Max scaling).

- Output: A structured

.csvfile with rows as compounds and columns as normalized descriptor values.

Protocol 2.2: Computation of Quantum Chemical Features via DFT

Objective: To calculate electronic structure features for catalyst activity prediction, focusing on a key catalytic intermediate.

- Initial Geometry: Start with a pre-optimized molecular structure (from Protocol 2.1).

- Software & Method Selection: Use Gaussian 16, ORCA, or PySCF. Select a functional appropriate for organometallics (e.g., B3LYP-D3, ωB97X-D) and a basis set (e.g., def2-SVP for geometry, def2-TZVP for single-point energy).

- Geometry Optimization: Fully optimize the structure to the energy minimum, confirming no imaginary frequencies.

- Single-Point Energy Calculation: Perform a higher-accuracy calculation on the optimized geometry to obtain precise electronic energies.

- Feature Extraction: Use the output to calculate:

HOMO_Energy,LUMO_Energy,HOMO-LUMO_GapFukui_Indices(for electrophilic/nucleophilic attack)Mulliken_or_NBO_Chargeson the metal center and key ligand atomsBinding_Energyof substrate to catalyst (if applicable): E(complex) - E(catalyst) - E(substrate)

- Validation: Compare calculated values for a known benchmark system (e.g., [Fe]-hydrogenase model) with literature values to ensure methodological accuracy.

Protocol 2.3: Training a Property-Guided Generative Model for Catalysts

Objective: To train a conditional generative model that proposes new catalyst structures based on desired quantum chemical property targets.

- Data Assembly: Create a unified dataset combining the molecular descriptors (Protocol 2.1) and quantum features (Protocol 2.2) for a training library of known catalysts.

- Model Architecture: Implement a Conditional Variational Autoencoder (CVAE). The condition vector (

c) is the set of target properties (e.g., high HOMO energy, low ΔE‡). - Representation: Encode molecular structures as SMILES strings and convert to a one-hot encoded or learned tensor representation.

- Training: Train the CVAE to reconstruct input molecules while aligning the latent space distribution with a prior (e.g., Gaussian) and respecting the condition vector

c. - Generation & Screening: Sample from the latent space under a new condition

c*(desired activity profile). Decode samples to generate novel SMILES. Filter invalid/unsyntactical structures. - Validation: Pass generated candidates through a pre-trained predictive model (trained on the same data) to estimate their target properties. Select top candidates for further in silico or experimental validation.

Data Presentation

Table 1: Comparison of Key Molecular Descriptor and Quantum Feature Categories

| Category | Specific Examples | Calculation Speed | Information Captured | Primary Role in Catalyst Optimization |

|---|---|---|---|---|

| Constitutional Descriptors | Molecular Weight, Heavy Atom Count | Very Fast | Bulk physical properties | Initial filtering for drug-likeness or ligand sterics. |

| Topological Descriptors | Balaban J, Zagreb Index | Very Fast | Molecular connectivity/branching | Correlate with ligand backbone flexibility and accessibility. |

| Geometric Descriptors | Radius of Gyration, Principal Moments | Fast (req. 3D struct.) | Overall molecular shape & size | Relate to steric bulk and binding pocket fit. |

| Quantum Chemical Features | HOMO/LUMO Energy, Fukui Indices | Slow (DFT required) | Electronic structure & reactivity | Directly predict catalytic activity/selectivity; guide generative models. |

| Chemical Fragments | MACCS Keys, ECFP4 Fingerprints | Fast | Presence of functional groups | Ensure key catalytic moieties (e.g., phosphine, N-heterocyclic carbene) are retained. |

Table 2: Example DFT-Calculated Quantum Features for Hypothetical Ruthenium Olefin Metathesis Catalysts

| Catalyst ID | SMILES Representation | HOMO (eV) | LUMO (eV) | Gap (eV) | NBO Charge (Ru) | Predicted ΔG‡ (kcal/mol) |

|---|---|---|---|---|---|---|

| Cat_Ref | Ru(Cl)(PH3)([H]C1C=CC=C1) | -6.12 | -2.05 | 4.07 | +0.31 | 12.5 (Lit. 12.1) |

| CatGen1 | Ru(Cl)(N(C)(C))(C1=NC=CC=C1) | -5.87 | -1.92 | 3.95 | +0.28 | 10.8 |

| CatGen2 | Ru(I)([H]C1C=CC=C1)(SC(C)C) | -6.45 | -2.33 | 4.12 | +0.35 | 14.2 |

Visualizations

Property-Guided Catalyst Generation & Optimization Workflow

Logical Relationship Between Descriptors, Models, and Design

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions & Computational Tools

| Item / Software | Category | Primary Function in Research |

|---|---|---|

| RDKit | Open-Source Cheminformatics | Calculates 2D/3D molecular descriptors, handles SMILES I/O, and provides core cheminformatics functions. |

| Gaussian 16 / ORCA | Quantum Chemistry Software | Performs DFT calculations to compute quantum chemical features (HOMO, LUMO, charges, energies). |

| PySCF | Python-based QM Framework | Enables automated, high-throughput quantum feature calculation for large virtual libraries. |

| PyTorch / TensorFlow | Deep Learning Framework | Builds and trains predictive ML models and conditional generative models (VAEs, GANs). |

| conda-forge | Package/Environment Manager | Manages conflict-free software environments with specific versions of chemistry and ML libraries. |

| Def2 Basis Sets | Computational Chemistry | Balanced, accurate basis sets for DFT calculations on transition metals and organic ligands. |

| Cambridge Structural Database (CSD) | Experimental Data Repository | Provides reference crystallographic geometries for catalyst complexes and ligands. |

| Jupyter Notebook / Lab | Interactive Computing | Platform for exploratory data analysis, model prototyping, and result visualization. |

Application Notes: Property-Guided Catalyst Generation

Generative models are revolutionizing the discovery of novel catalytic materials by enabling the exploration of vast chemical spaces under targeted property constraints. Within catalyst activity optimization research, these models learn from known catalyst structures and their associated performance data to propose new candidates with enhanced predicted properties, such as activity, selectivity, and stability.

1.1 Variational Autoencoders (VAEs) in Catalyst Design VAEs provide a probabilistic framework for encoding molecular or crystal structures into a continuous, low-dimensional latent space. This allows for smooth interpolation and targeted sampling of structures with desired properties. In catalyst research, conditional VAEs are trained on datasets like the Open Quantum Materials Database (OQMD) or the Catalysis-Hub.org, using properties like adsorption energies of key intermediates (e.g., *H, *O, *CO) as conditions. This enables the generation of new bulk or surface structures predicted to have optimal binding energies.

1.2 Generative Adversarial Networks (GANs) for Surface Structure GANs, through their adversarial training, can generate high-fidelity and novel atomic configurations. They are particularly useful for generating realistic surface atom arrangements or nanoparticle morphologies. A common application is the generation of potential bimetallic alloy surfaces, where the generator creates candidate atomic coordinate sets, and the discriminator evaluates their plausibility against known stable surfaces from computational databases.

1.3 Graph Neural Networks (GNNs) for Molecular and Solid-State Catalysts GNNs natively operate on graph-structured data, making them ideal for representing molecules and materials where atoms are nodes and bonds are edges. Generative GNNs, such as GraphVAE or MolGAN, can construct molecules atom-by-atom. For periodic solid catalysts, GNNs with 3D periodic boundary conditions can generate novel crystal graphs (Crystal Graphs). These models are guided by target properties like formation energy, band gap, or activity descriptors calculated via Density Functional Theory (DFT).

Table 1: Comparative Summary of Generative Models for Catalyst Design

| Model Type | Key Mechanism | Typical Catalyst Input | Generated Output | Primary Guidance Property | Key Advantage | Key Challenge |

|---|---|---|---|---|---|---|

| VAE | Encoder-Decoder with Latent Space Regularization | SMILES strings, Crystal Graphs | Continuous latent space, decoded to structures | Adsorption energy, Formation Energy | Smooth, explorable latent space | Can generate invalid/implausible structures |

| GAN | Adversarial Training (Generator vs. Discriminator) | Atomic coordinate matrices, 2D/3D voxel grids | New coordinate sets or voxel maps | Stability score (from discriminator), Activity | High-fidelity, novel samples | Training instability, Mode collapse |

| Graph Neural Network (Generative) | Message Passing & Graph Construction | Molecular Graphs, Crystal Graphs | New graphs (atoms & bonds) | Target DFT-calculated descriptor (e.g., d-band center) | Native representation of relational structure | Complexity in enforcing valency and periodicity |

Experimental Protocols

Protocol 2.1: Training a Conditional VAE for Transition Metal Oxide Catalyst Generation Objective: To generate novel ternary metal oxide structures with predicted low overpotential for the Oxygen Evolution Reaction (OER).

- Data Curation: Assemble a dataset from the Inorganic Crystal Structure Database (ICSD). Filter for ternary metal oxides (A_x_B_y_O_z_). Compute the OER activity descriptor (e.g., theoretical overpotential via DFT-calculated *O and *OOH adsorption energies) for each stable compound.

- Representation: Convert each crystal structure into a standardized representation: a) Compositional vector, and b) Unit cell and fractional coordinates normalized via Wyckoff positions.

- Model Architecture: Implement an encoder (3 fully connected layers) that maps the input representation to a mean (μ) and log-variance (log σ²) vector defining a 128-dimensional latent distribution. The decoder (3 fully connected layers) reconstructs the input from a latent vector z, sampled via z = μ + exp(log σ²/2) * ε, where ε ~ N(0, I). Condition the model by concatenating the target overpotential value to the encoder input and the latent vector before decoding.

- Training: Use a loss function L = Lreconstruction + β * LKL, where LKL is the Kullback-Leibler divergence between the learned distribution and N(0, I). Train for 500 epochs with the Adam optimizer (lr=1e-4). Use a batch size of 64.

- Generation & Validation: Sample latent vectors from N(0, I) and concatenate with a desired target overpotential (e.g., 0.3 eV). Decode to generate candidate structures. Validate candidate stability via DFT-based convex hull analysis and recalculate the OER descriptor.

Protocol 2.2: Adversarial Training of a GAN for Bimetallic Nanoparticle Generation Objective: To generate stable 55-atom (LJ55 motif) bimetallic nanoparticle configurations for catalytic hydrogenation.

- Data Preparation: Generate a dataset of 10,000+ relaxed 55-atom nanoparticle structures (e.g., core-shell, random alloy, ordered phases) via classical molecular dynamics or Monte Carlo simulations. Represent each nanoparticle as a 55x3 matrix of atomic coordinates centered on the center of mass.

- Network Design: Build a generator (G) as a series of 1D transposed convolutional layers that maps a 100-dimensional noise vector to a 55x3 matrix. Build a discriminator (D) as 1D convolutional layers that outputs a probability that an input matrix is from the real dataset.

- Adversarial Training: Train using the Wasserstein GAN with Gradient Penalty (WGAN-GP) objective for stability. Update D 5 times per update of G. Use the Adam optimizer (lr=5e-5) for both networks.

- Property Filtering: Pass generated nanoparticles through a pre-trained Graph Neural Network surrogate model to predict H*₂ adsorption energy. Filter and select candidates with adsorption energies in the optimal range (~ -0.2 to 0 eV).

- DFT Verification: Perform full DFT relaxation and energy calculation on the top 20 filtered candidates to confirm stability and predicted activity.

Protocol 2.3: Property-Optimized Generation with a Graph Neural Network Objective: To generate novel organic ligand molecules for metal-organic framework (MOF) catalysts with target electronic properties.

- Graph Representation: Represent each organic linker molecule (e.g., from a curated MOF database) as a graph G = (V, E), where nodes V are atoms (featurized by atomic number, hybridization) and edges E are bonds (featurized by bond type).

- Model Training: Train a Property-Guided GraphVAE. The encoder is a 4-layer Graph Convolutional Network (GCN) that outputs latent vectors μ and σ for each graph. The decoder is a multi-layer perceptron that predicts an adjacency matrix and node feature matrix. A separate property prediction head (2 fully connected layers) is attached to the latent vector to predict the target property (e.g., HOMO-LUMO gap, computed via DFT).

- Latent Space Optimization: After training, use Bayesian Optimization (BO) in the continuous latent space. The acquisition function is maximized to find latent points z that, when decoded, are predicted to yield molecules with an optimal HOMO-LUMO gap (e.g., 2.5 eV ± 0.2).

- Candidate Validation: Decode the top BO-proposed z vectors into molecular graphs. Check for chemical validity using valence rules. Perform DFT geometry optimization and electronic structure calculation on valid candidates to verify properties.

Visualizations

Title: VAE Training & Generation Workflow

Title: GAN Adversarial Training Loop

Title: Property-Guided Graph Generation via GNN & BO

The Scientist's Toolkit: Key Reagent Solutions & Materials

Table 2: Essential Resources for Computational Catalyst Generation Research

| Item / Reagent Solution | Function / Purpose | Example / Provider |

|---|---|---|

| Structured Catalyst Databases | Source of training data (structures, properties). Provides ground-truth for model training and validation. | ICSD, OQMD, Materials Project, Catalysis-Hub.org, NOMAD. |

| Density Functional Theory (DFT) Code | First-principles calculation of catalyst properties (energies, electronic structure). Used for data generation and candidate validation. | VASP, Quantum ESPRESSO, Gaussian, CP2K. |

| High-Performance Computing (HPC) Cluster | Provides computational resources for large-scale DFT calculations and training of large generative models. | Local university clusters, NSF XSEDE, DOE NERSC, cloud computing (AWS, GCP). |

| Machine Learning Frameworks | Platform for building, training, and deploying generative models (VAEs, GANs, GNNs). | PyTorch, TensorFlow, JAX. With libraries like PyTorch Geometric (PyG) or Deep Graph Library (DGL) for GNNs. |

| Chemical/Materials Informatics Libraries | Handles conversion between chemical representations (SMILES, CIF files) and model-readable formats (graphs, descriptors). | RDKit (molecules), pymatgen (materials), ASE (atomic simulations). |

| Latent Space Optimization Toolkit | Enables search and optimization in the continuous latent space of generative models to meet target property criteria. | Bayesian Optimization (scikit-optimize, BoTorch), Genetic Algorithms. |

| Automated Workflow Managers | Automates the pipeline from candidate generation to DFT validation, enabling high-throughput screening. | AiiDA, FireWorks, Atomate. |

| Visualization & Analysis Software | For analyzing generated structures, visualizing latent spaces, and interpreting model decisions. | VESTA, Ovito, matplotlib, seaborn, tensorboard. |

Application Notes

Catalytic datasets underpin modern catalyst discovery. Within the broader thesis on Applying property-guided generation for catalyst activity optimization research, curated data enables accurate machine learning (ML) model training. The primary challenges include data heterogeneity, inconsistent reporting, and lack of standardized descriptors. High-quality datasets must encompass catalyst structure (e.g., molecular SMILES, crystal structures), reaction conditions, and measured activity/selectivity metrics. Current initiatives emphasize FAIR (Findable, Accessible, Interoperable, Reusable) data principles, with repositories like CatHub and the Catalysis Research Benchmark (CRB) providing structured datasets. Recent studies highlight that dataset size and variance are critical for generalizable models; for heterogeneous catalysis, datasets of >10,000 data points are now considered a robust foundation for activity prediction.

| Dataset Name | Size (Entries) | Catalyst Type | Key Properties Measured | Public Access |

|---|---|---|---|---|

| CatHub | ~15,000 | Heterogeneous (Metals, Oxides) | TOF, Selectivity, Conversion | Yes (API) |

| CRB 2.0 | ~8,500 | Heterogeneous (Supported Metals) | Turnover Number, Activation Energy | Yes (Download) |

| Open Catalysis 2023 | ~25,000 | Mixed (Thermo- & Electro-) | Current Density, Overpotential, Yield | Yes (CC-BY) |

| NREL Catalysis Database | ~5,000 | Molecular (Organometallic) | Yield, TON, Deactivation Time | Partial |

A central issue is the representation of catalysts. For ML, common descriptors include composition features, orbital-centered features (e.g., d-band center for metals), and geometric descriptors (coordination number). Recent protocols advocate for multi-fidelity data integration, combining high-accuracy computational results (DFT) with medium-throughput experimental screening data to maximize information density.

Diagram Title: Data Curation & ML Training Workflow

Experimental Protocols

Protocol 1: Extracting and Standardizing Catalytic Data from Literature

This protocol details the extraction of heterogeneous hydrogenation data from published literature into a structured format.

- Define Data Schema: Establish a standardized schema using a .csv or .json template. Essential fields include: Catalyst_Composition (precise elemental makeup), Support_Material (if any), Synthesis_Method, Substrate, Temperature (K), Pressure (bar), Solvent, Turnover_Frequency (TOF in s⁻¹), Conversion (%), Selectivity (%), and a Reference DOI.

- Literature Search: Use APIs (e.g., Crossref, PubMed) with specific queries (e.g., "CO2 hydrogenation TOF supported Ni catalyst").

- Data Extraction: For each relevant paper, extract data from tables, text, and Supplementary Information. Convert all units to the standard schema (e.g., convert hours to seconds for TOF).

- Annotation & Validation: Flag entries with missing critical data (e.g., missing temperature). Cross-check extracted values against figures using digitization software (e.g., WebPlotDigitizer). Perform basic thermodynamic feasibility checks.

- Deposition: Format the validated data according to the schema and upload to an internal or public repository with a unique dataset identifier.

Protocol 2: High-Throughput Experimental Screening for Dataset Augmentation

This protocol outlines parallelized screening to generate consistent catalytic activity data, using CO oxidation as a model reaction.

- Material Library Preparation: Prepare a library of catalyst candidates (e.g., 96 distinct bimetallic compositions on alumina) using an automated liquid handling system for incipient wetness impregnation.

- Reactor Setup: Utilize a parallel, fixed-bed reactor system (e.g., 16-channel) with individual mass flow controllers and downstream gas chromatography (GC) or mass spectrometry (MS) analysis.

- Standardized Pretreatment: Subject all catalysts to an identical pretreatment: heat to 300°C under 5% H2/Ar (50 mL/min) for 2 hours.

- Activity Testing: For each catalyst, under steady-state flow (1% CO, 10% O2, balance He), measure CO conversion at a series of isothermal plateaus (e.g., 100, 150, 200, 250°C). Use an internal standard for GC calibration.

- Data Capture: Automatically record reactor temperature, pressure, gas flows, and GC peak areas for each channel. Calculate conversion and specific rate (per gram catalyst).

- Data Processing: Apply consistent baseline subtraction and calibration curves. Compile all data (composition, conditions, rate) into the project's master database.

Diagram Title: Catalyst Screening & Data Flow

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function & Explanation |

|---|---|

| Automated Liquid Handler (e.g., Hamilton STAR) | Precise, high-throughput dispensing of catalyst precursor solutions for reproducible library synthesis. |

| Parallel Fixed-Bed Reactor System (e.g., PID Microactivity Effi) | Enables simultaneous testing of up to 16 catalyst samples under identical or varied conditions, accelerating data generation. |

| Multi-Channel Mass Spectrometer (e.g., Hiden QGA) | Provides real-time, quantitative analysis of gas-phase products from multiple reactor streams, essential for kinetic profiling. |

| WebPlotDigitizer Software | Critical tool for extracting numerical data from published graphs and figures in legacy literature, enabling data digitization. |

| Catalysis-Specific Descriptor Packages (e.g., CatLearn, pymatgen) | Python libraries for computing standardized catalyst descriptors (structural, electronic) from input structures for ML readiness. |

| FAIR Data Management Platform (e.g, CKAN, Figshare) | Provides a structured repository for curated datasets, ensuring persistent identifiers, metadata, and accessibility per FAIR guidelines. |

A Step-by-Step Guide to Implementing Property-Guided Generation Workflows

This document details application notes and protocols for a property-guided generative pipeline, framed within a broader thesis on catalyst activity optimization. The core challenge is to inverse-design novel molecular structures with optimized catalytic properties by integrating predictive models with generative AI. The pipeline moves from establishing a predictive relationship between structure and activity to sampling novel, conditionally-valid structures from the learned chemical space.

Table 1: Performance Benchmarks of Property Prediction Models for Catalytic Properties

| Model Architecture | Dataset (Catalyst Type) | Target Property | MAE | R² | Key Reference/Codebase |

|---|---|---|---|---|---|

| Graph Neural Network (GNN) | Organometallic Complexes (QM9-derived) | HOMO-LUMO Gap (eV) | 0.15 eV | 0.91 | Jørgensen et al., Chem. Sci., 2020 |

| Transformer (SMILES-based) | Heterogeneous Catalysts (OC20) | Adsorption Energy (eV) | 0.28 eV | 0.85 | Chanussot et al., ACS Catal., 2021 |

| 3D-CNN (Voxelized) | Solid Surfaces (Materials Project) | Formation Energy (eV/atom) | 0.04 eV | 0.98 | Live Search Update: MatDeepLearn Library |

| Directed Message Passing NN | Homogeneous Catalysts (Quantum Calc.) | Turnover Frequency (logTOF) | 0.38 log units | 0.79 | Live Search Update: PyTorch Geometric |

Table 2: Conditional Generative Model Output Metrics

| Generative Model | Conditioned Property | Validity (%) | Uniqueness (%) | Novelty (%) | Property Target Hit Rate (%) |

|---|---|---|---|---|---|

| Conditional VAE (cVAE) | Adsorption Energy | 87.2 | 95.1 | 99.8 | 65.3 |

| Generative Adversarial Network (cGAN) | HOMO-LUMO Gap | 92.7 | 89.4 | 100 | 71.8 |

| Flow-based Model (Conditional) | Formation Energy | 98.5 | 97.3 | 99.5 | 82.1 |

| Live Search Update: Diffusion Model | logTOF | 96.2 | 99.0 | 100 | 88.7 |

Experimental Protocols

Protocol 3.1: Training a Robust Property Predictor

Objective: Train a GNN to accurately predict catalytic turnover frequency (TOF) from molecular graph representation. Materials: See "Scientist's Toolkit" (Section 6). Procedure:

- Data Curation: Assemble a dataset of catalyst structures (e.g., as SMILES strings or 3D coordinates) with experimentally measured or DFT-calculated logTOF values. Apply rigorous train/validation/test splits (e.g., 80/10/10) ensuring no data leakage.

- Featurization: Convert each molecular structure into a graph representation. Nodes (atoms) are featurized with atomic number, hybridization, formal charge. Edges (bonds) are featurized with bond type, conjugation, and ring membership.

- Model Training: Implement a Message Passing Neural Network (MPNN) using PyTorch Geometric. Configuration: 4 message-passing layers, hidden dimension 256, ReLU activation. Use a combined loss: L = α * MSE(property) + β * ContrastiveLoss(representations), where α=1.0, β=0.1.

- Validation: Monitor MSE and R² on the validation set. Employ early stopping with a patience of 50 epochs.

- Deployment: Save the final model weights for integration into the generative pipeline as a conditioning module.

Protocol 3.2: Conditional Generation via Guided Diffusion

Objective: Generate novel, valid catalyst structures conditioned on a target logTOF value. Materials: Pre-trained property predictor (Protocol 3.1), diffusion model backbone (e.g., EDM architecture). Procedure:

- Model Architecture: Implement a denoising diffusion probabilistic model (DDPM) where the denoising network is a GNN. Concatenate the target property condition (normalized logTOF) to the node features at each denoising step.

- Training: Train the diffusion model to learn the distribution of catalyst structures in the training set. The condition is provided as an input during training. Use 1000 diffusion steps and a linear noise schedule.

- Conditional Sampling: a. Sample random noise in the shape of a latent graph (defined by expected max atoms). b. For each denoising step t, input the noisy graph and the target property condition into the trained denoiser. c. (Critical Step - Guidance): Before the denoiser's final output, compute the gradient of the pre-trained property predictor's output with respect to the noisy graph. Scale this gradient by a guidance strength factor s (empirically tuned, start at 2.0) and add it to the denoising direction. This steers generation towards the desired property. d. Iterate until step t=0 to obtain a clean, generated molecular graph.

- Post-Processing: Convert the final graph to a SMILES string. Validate chemical correctness with RDKit. Filter duplicates.

Visualization of the Integrated Pipeline

Diagram Title: Property-Guided Catalyst Generation Pipeline

Detailed Signaling/Workflow Diagram for Conditional Sampling

Diagram Title: Guided Diffusion Sampling Loop

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials & Software for Pipeline Implementation

| Item Name | Type (Software/Data/Service) | Function in Pipeline | Example Source/Link |

|---|---|---|---|

| PyTorch Geometric (PyG) | Software Library | Provides core data structures and models for Graph Neural Networks (GNNs) on catalyst graphs. | https://pytorch-geometric.readthedocs.io |

| RDKit | Software Library | Handles cheminformatics tasks: SMILES parsing, molecular validation, descriptor calculation, and 2D rendering. | https://www.rdkit.org |

| Open Catalyst Project (OC20) Dataset | Dataset | A large-scale dataset of relaxations and energies for catalyst-adsorbate systems, useful for training property predictors. | https://opencatalystproject.org |

| MatDeepLearn Library | Software Library | Live Search Find: A framework for building and benchmarking GNNs for materials property prediction, includes pre-trained models. | https://github.com/vxfung/MatDeepLearn |

| Guided Diffusion for Molecular Design (Code) | Code Repository | Live Search Find: Reference implementation for property-guided graph diffusion models, a key method for conditional generation. | https://github.com/MinkaiXu/ConfGF |

| Google Cloud TPU / NVIDIA A100 GPU | Hardware/Service | Accelerates the training of large generative models (diffusion, transformers) which is computationally intensive. | Major Cloud Providers |

| Gaussian 16 or ORCA | Quantum Chemistry Software | Used for final-stage validation of generated catalysts via Density Functional Theory (DFT) calculations of target properties. | Commercial/Open-Source |

| MolGX / AFLOW-ML | Web Service | Live Search Find: Platforms for cloud-based, high-throughput screening of generated materials/catalysts using ML potentials. | https://molgx.aics.riken.jp, http://aflow.org/aflow-ml |

1. Introduction & Context Within the thesis "Applying property-guided generation for catalyst activity optimization," a core methodological challenge is the creation of a unified, continuous representation that encodes both molecular structure and its associated functional properties (e.g., catalytic activity, selectivity). This document details application notes and protocols for training Joint Latent Space Models (JLSMs), a class of deep learning models designed to solve this problem. Effective training strategies are critical for ensuring the latent space is well-structured, interpretable, and enables accurate inverse design—the generation of novel structures predicted to possess target properties.

2. Core Training Paradigms & Comparative Data

JLSMs are typically trained under three primary paradigms, each with distinct advantages and data requirements.

Table 1: Quantitative Comparison of JLSM Training Paradigms

| Training Paradigm | Key Architecture | Primary Loss Components | Optimal Data Scenario | Reported Property Prediction R² (Catalysis Range) |

|---|---|---|---|---|

| Supervised Joint Training | Dual-encoder (Structure & Property) to shared latent (z), coupled decoders. | Reconstruction Loss (Structure) + Prediction Loss (Property). | Large datasets (>10k samples) with high-quality, consistent property labels. | 0.70 – 0.89 |

| Sequential Pretraining & Fine-tuning | 1) Pretrain VAE on structure only. 2) Fine-tune with property predictor. | Phase 1: Reconstruction. Phase 2: Prediction + Latent regularization. | Moderate datasets (1k-10k samples) where property data is limited or noisy. | 0.65 – 0.82 |

| Adversarial Alignment | Separate structure and property encoders, aligned via adversarial discriminator. | Reconstruction Loss + Adversarial Loss (aligns distributions) + Prediction Loss. | Multi-fidelity data or integrating data from disparate sources (e.g., computational + experimental). | 0.60 – 0.78 |

3. Detailed Experimental Protocol: Supervised Joint Training

This protocol is designed for training a JLSM using a dataset of catalyst molecules and their associated turnover frequency (TOF) values.

A. Materials & Input Preparation

- Structure Data: SMILES strings of catalyst molecules. Standardize using RDKit (canonicalization, removal of salts).

- Property Data: Scalar TOF values (log-scaled and normalized to zero mean, unit variance).

- Dataset Split: 70% training, 15% validation, 15% test. Ensure stratified splitting based on property value bins if distribution is non-uniform.

B. Model Architecture Setup

- Structure Encoder: A graph neural network (GNN) using RGCN or GAT layers to process molecular graphs. Outputs a mean (μs) and log-variance (log(σs²)) vector.

- Property Encoder: A simple feed-forward network (FFN) processing the scalar property value to vectors μp and log(σp²).

- Latent Fusion & Sampling: Fuse encoder outputs: μ = (μs + μp)/2; σ = (exp(log(σs²)) + exp(log(σp²)))/2. Sample latent vector

zusing the reparameterization trick:z = μ + σ * ε, where ε ~ N(0,I). - Decoders: A graph decoder (e.g., MLP) to reconstruct the molecular graph features, and an FFN property decoder to reconstruct the input property.

C. Training Procedure

- Loss Function Calculation:

- Structure Reconstruction Loss (Lrec): Binary cross-entropy for node/edge reconstruction.

- Property Prediction Loss (Lpred): Mean squared error (MSE) between input and decoded property.

- Kullback-Leibler Divergence (L_KL): KL divergence between the latent distribution and N(0,I).

- Total Loss: Ltotal = α * Lrec + β * Lpred + γ * LKL (Typical starting weights: α=1, β=10, γ=0.01).

- Optimization: Use Adam optimizer with an initial learning rate of 0.001. Implement a learning rate scheduler that reduces LR on validation loss plateau.

- Validation & Early Stopping: Monitor validation loss (L_total) and property prediction RMSE. Stop training if no improvement for 50 epochs.

4. Visualization of Workflows and Model Logic

Diagram 1: JLSM Training Workflow

Diagram 2: Supervised Joint Training Architecture

5. The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Computational Tools & Resources for JLSM Development

| Tool/Resource Name | Category | Primary Function in JLSM Research |

|---|---|---|

| RDKit | Cheminformatics Library | Standardizes molecular inputs (SMILES), generates molecular descriptors, and handles basic graph operations. |

| PyTorch Geometric (PyG) | Deep Learning Library | Provides efficient implementations of Graph Neural Networks (GNNs) critical for the structure encoder/decoder. |

| DeepChem | ML for Chemistry | Offers high-level APIs for building molecular property prediction models and managing chemical datasets. |

| TensorBoard / Weights & Biases | Experiment Tracking | Visualizes training progress, latent space projections (via PCA/t-SNE), and compares hyperparameter runs. |

| QM9 / CatHub | Benchmark Datasets | QM9 provides small organic molecule properties for pretraining. CatHub offers curated catalysis data for fine-tuning. |

| Open Catalyst Project (OC) Datasets | Large-scale Dataset | Provides DFT-calculated adsorption energies and structures for catalyst-adsorbate systems, enabling scale-up. |

This application note details protocols for conditioning generative models on target catalytic properties, a core methodology within the broader thesis "Applying property-guided generation for catalyst activity optimization research." The objective is to enable the de novo generation or virtual screening of molecular catalysts constrained by pre-defined activity (e.g., turnover frequency, TOF) and selectivity (e.g., enantiomeric excess, ee) ranges. This shifts the paradigm from retrospective analysis to prospective, goal-directed molecular design.

Key Concepts & Data Landscape

Conditioning requires robust quantitative structure-property relationship (QSPR) models or physics-based simulations to predict target properties from candidate structures. Current literature emphasizes hybrid models combining graph neural networks (GNNs) with Gaussian Processes for uncertainty quantification.

Table 1: Representative Target Property Ranges for Catalytic Optimization

| Catalyst Class | Primary Activity Metric | Typical Target Range | Selectivity Metric | Typical Target Range | Key Reference System |

|---|---|---|---|---|---|

| Asymmetric Organocatalysts | ΔΔG‡ (kcal/mol) | -2.5 to -4.0 | Enantiomeric Excess (% ee) | 90% to >99% | Proline-catalyzed aldol |

| Transition Metal Complexes | Turnover Frequency (TOF, h⁻¹) | 10³ to 10⁵ | Chemoselectivity (%) | >95% | Pd-catalyzed cross-coupling |

| Heterogeneous Metals | Turnover Number (TON) | 10⁴ to 10⁶ | Product Distribution Ratio | >20:1 | CO₂ hydrogenation to methanol |

| Enzymes (Engineered) | kcat / KM (M⁻¹s⁻¹) | 10⁵ to 10⁷ | Stereoselectivity (E value) | >100 | Ketoreductase reactions |

Core Protocol: Conditioning a Generative Model

Materials & Computational Setup

The Scientist's Toolkit: Key Research Reagent Solutions

| Item Name | Function & Explanation |

|---|---|

| Conditional VAE or GFlowNet Framework | Generative model architecture (e.g., in PyTorch) that accepts property vectors as conditional input during training and inference. |

| Curated Catalyst Dataset | Structured dataset (e.g., from CatHub, ASKCOS) containing molecular structures (SMILES/SELFIES) and associated experimental activity and selectivity values. |

| Property Predictor Models | Pre-trained QSPR models (e.g., GNNs) that output predicted activity and selectivity scores for any input molecular structure. Serves as the conditioning signal source. |

| Molecular Featurizer | Tool (e.g., RDKit, Mordred) to convert SMILES into numerical descriptors or graph representations for the predictor models. |

| Oracle Simulation Environment | High-fidelity computational chemistry software (e.g., DFT, microkinetic modeling suite) for in silico validation of top-generated candidates. |

Step-by-Step Workflow Protocol

Protocol Title: Training a Property-Conditioned Catalyst Generator

Data Curation & Preprocessing:

- Source a dataset of homogeneous catalysts with reported TOF and selectivity values.

- Clean data: Standardize SMILES, remove duplicates, handle missing values via imputation or removal.

- Define property ranges. Normalize all property values to a [0, 1] scale.

Training the Joint Model:

- Architecture: Implement a Conditional Graph Variational Autoencoder (C-GVAE).

- Input: Molecular graph + a condition vector

c = [norm(TOF_target), norm(Selectivity_target)]. - Process: The encoder

Elearns a latent representationzof the graph. The decoderDreconstructs the graph fromzand the conditionc. - Loss Function:

L_total = L_reconstruction + β * KL_divergence(E(z) || N(0,1)) + λ * (Predictor(D(z|c)) - c)². The final term forces generation towards the conditioned properties.

Conditioned Generation/Screening:

- Inference: Sample a latent vector

zfrom the prior distribution. Concatenate with a user-defined target condition vectorc_target(e.g.,[0.8, 0.9]for high TOF and high selectivity). - Pass

[z, c_target]through the trained decoder to generate novel molecular graph structures. - Validation: Pass generated candidates through the high-fidelity predictor model or oracle simulation to verify property alignment.

- Inference: Sample a latent vector

Iterative Optimization Loop:

- Experimentally validate top in silico candidates.

- Add new experimental data (structure, TOF, selectivity) to the training set.

- Fine-tune the generative model on the expanded dataset to improve its predictive accuracy and generation quality in the target property region.

Visualization of Workflows

Diagram 1: Conditioning on Target Properties

(Title: Conditioning on Target Properties Workflow)

Diagram 2: Model Training Architecture

(Title: Conditional Generative Model Training)

Experimental Validation Protocol

Protocol Title: Validating Generated Catalysts for Asymmetric Hydrogenation

Objective: To experimentally test catalyst candidates generated for high enantioselectivity (>95% ee) in the hydrogenation of methyl benzoylformate.

Materials:

- Generated Catalysts: 3-5 Ru-BINAP derivative complexes from the generative model.

- Substrate: Methyl benzoylformate.

- Standard: Racemic methyl mandelate for GC calibration.

- Analytical: Chiral GC column (e.g., Cyclosil-B).

Procedure:

- Catalyst Preparation: Synthesize or procure generated Ru complexes (e.g., via ligand synthesis followed by metalation with Ru(cymene)Cl₂).

- Hydrogenation Reaction:

- In a glovebox, charge a Schlenk tube with catalyst (0.01 mmol, S/C=100) and methyl benzoylformate (1.0 mmol).

- Add degassed solvent (5 mL iPrOH).

- Seal the tube, remove from glovebox, and connect to a H₂ balloon at atmospheric pressure.

- Stir the reaction at 25°C for 12 hours.

- Analysis:

- Quench the reaction with triethylamine.

- Dilute an aliquot and analyze by Chiral Gas Chromatography (GC).

- Calculate conversion from substrate peak area.

- Calculate enantiomeric excess (ee%) using the formula:

ee% = |[R] - [S]| / ([R] + [S]) * 100, determined from integrated peak areas of the enantiomers.

- Data Integration: Report experimental TOF (calculated from conversion, time, and catalyst loading) and ee% back to the dataset for model refinement.

Table 2: Example Validation Results for Generated Catalysts

| Catalyst ID | Predicted ee% | Experimental ee% | Predicted TOF (h⁻¹) | Experimental TOF (h⁻¹) | Target Met? |

|---|---|---|---|---|---|

| Gen-Ru-01 | 97 | 95 | 1200 | 980 | Yes |

| Gen-Ru-02 | 99 | 99 | 800 | 1100 | Yes |

| Gen-Ru-03 | 88 | 75 | 2000 | 2100 | No (ee low) |

This application note, framed within a thesis on Applying property-guided generation for catalyst activity optimization research, details protocols for the rational design, high-throughput screening, and optimization of homogeneous palladium catalysts for Suzuki-Miyaura cross-coupling, a critical reaction in pharmaceutical development.

The optimization of phosphine ligand scaffolds in homogeneous Pd catalysts is paramount for achieving high activity and selectivity in cross-coupling, particularly for challenging substrates like sterically hindered or heteroaromatic partners. Traditional optimization is resource-intensive. This protocol integrates computational property prediction (e.g., %Vbur, Sterimol parameters) with high-throughput experimentation (HTE) to accelerate the discovery of optimal catalysts.

Key Quantitative Metrics & Data

Table 1: Key Descriptor Ranges for High-Performance Pd-PR₃ Catalysts in Suzuki-Miyaura Coupling

| Descriptor | Optimal Range for Aryl Halides | Role in Catalyst Performance | Measurement Method |

|---|---|---|---|

| Ligand Steric Bulk (%Vbur) | 35-55% | Facilitates reductive elimination; prevents Pd(0) dimerization. | Computational (Solid Angle) |

| Electronic Parameter (νCO / cm⁻¹) | 2040-2065 | Moderate π-acceptance stabilizes LPd(0) intermediate. | IR Spectroscopy of L-Pd-CO |

| Bite Angle (θ / °) | 85-105 (for bidentate) | Influences geometry & stability of transition states. | X-ray / Computational |

| Pd/PR₃ Stoichiometry | 1:1 to 1:2 | Balances catalyst stability vs. active site availability. | Reaction Calorimetry |

| Turnover Number (TON) | > 10,000 (Target) | Primary activity metric for cost-effectiveness. | GC/HPLC Analysis |

Table 2: HTE Screening Results for Model Reaction: 2-Chloropyridine + Aryl Boronic Acid

| Ligand Code | %Vbur | νCO (cm⁻¹) | Yield (%) at 0.1 mol% Pd | Yield (%) at 0.01 mol% Pd | TON |

|---|---|---|---|---|---|

| SPhos | 41.2 | 2051.2 | 99 | 85 | 8,500 |

| XPhos | 45.8 | 2054.7 | 99 | 92 | 9,200 |

| BrettPhos | 52.3 | 2058.1 | 98 | 94 | 9,400 |

| t-BuXPhos | 58.9 | 2062.5 | 95 | 65 | 6,500 |

| PPh₃ | 30.5 | 2068.9 | 45 | 5 | 500 |

Experimental Protocols

Protocol 1: Property-Guided Ligand Library Generation

- Define Property Space: Using a database (e.g., CSD), calculate steric (%Vbur, B1, B5) and electronic (HOMO/LUMO energy, Natural Charge on P) descriptors for known phosphines.

- Modeling: Train a QSAR model (e.g., Random Forest, GPR) correlating descriptors to catalytic turnover frequency (TOF) from historical data.

- In Silico Generation: Use a genetic algorithm to generate novel ligand structures within a defined synthetic accessibility (SA) score.

- Prediction & Filtering: Predict TOF for generated ligands using the QSAR model. Select top 50 candidates with high predicted activity and diverse descriptor coverage for synthesis/HTE.

Protocol 2: High-Throughput Screening of Pd/PR₃ Catalysts

Materials: Pre-weighed ligand library in 96-well plates, Pd source (e.g., Pd(OAc)₂, Pd₂(dba)₃), substrates (aryl halide & boronic acid), base (K₃PO₄, Cs₂CO₃), solvent (toluene/water 4:1 or dioxane). Workflow:

- Plate Preparation: In a nitrogen-filled glovebox, dispense ligands (0.022 µmol) and Pd(OAc)₂ (0.01 µmol) into wells to form in situ catalysts (L:Pd = 2.2:1).

- Reaction Initiation: Using an automated liquid handler, add substrate stock solution (aryl halide, 10 µmol; boronic acid, 12 µmol; base, 20 µmol in 100 µL solvent).

- Reaction Conditions: Seal plate, transfer to a pre-heated orbital shaker, and agitate at 80°C for 18 hours.

- Quenching & Analysis: Cool plate, add 100 µL of acetonitrile with internal standard (dodecane). Mix thoroughly. Analyze yields via UPLC-MS with a 3-minute fast gradient method.

Protocol 3: Kinetic Profiling for Optimal Catalyst

Procedure:

- Prepare a 10 mL reaction flask with magnetic stirrer under N₂. Charge with Pd/ligand complex (1 µmol), aryl halide (1 mmol), boronic acid (1.2 mmol), base (2 mmol), and solvent (5 mL).

- Immerse flask in pre-heated oil bath (desired T, e.g., 50°C). Start timer.

- At defined time intervals (1, 3, 5, 10, 15, 30, 60, 120 min), withdraw 50 µL aliquots via syringe.

- Immediately quench aliquots in 450 µL of chilled acetonitrile with internal standard.

- Analyze aliquot composition by GC-FID or HPLC. Plot [Product] vs. time to determine initial rate and TOF.

Diagrams

Title: Property-Guided Catalyst Optimization Workflow

Title: Key Steps in Pd-Catalyzed Suzuki-Miyaura Coupling

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for Catalyst Optimization

| Item | Function & Rationale | Example/Specification |

|---|---|---|

| Palladium Precursors | Source of active Pd(0). Choice affects initiation kinetics. | Pd(OAc)₂ (air-stable), Pd₂(dba)₃ (highly reactive), G3 XPhos Pd pre-catalyst. |

| Phosphine Ligand Library | Modular tunability of sterics/electronics. Core to optimization. | Buchwald biarylphosphines (SPhos, XPhos), N-heterocyclic carbenes (IMes·HCl). |

| Inert Atmosphere Equipment | Prevents oxidation of air-sensitive Pd(0) and phosphine ligands. | Glovebox (N₂, <0.1 ppm O₂) or Schlenk line with freeze-pump-thaw degassing. |

| HTE Reaction Blocks | Enables parallel synthesis for rapid empirical screening. | 96-well glass-coated or polymer blocks, with sealing pierceable lids. |

| Automated Liquid Handler | Ensures precision and reproducibility in reagent dispensing for HTE. | Positive displacement or syringe-based systems for µL-scale volumes. |

| Rapid Analysis System | High-throughput quantification of reaction yields. | UPLC-MS with autosampler and <3 min run methods, or GC with plate sampler. |

| Computational Software | Calculates molecular descriptors and runs property-guided generation. | Python with RDKit, Spartan or Gaussian for DFT, QSAR modeling libraries. |

| Deuterated Solvents for NMR | For detailed mechanistic studies and reaction monitoring. | Toluene-d₈, THF-d₈, with NMR tubes fitted with J. Young valves. |

This work presents a case study within a broader thesis on Applying Property-Guided Generation for Catalyst Activity Optimization. Traditional biocatalysis using native enzymes for synthesizing drug metabolites often faces limitations in stability, substrate scope, and cost. This study explores the de novo design and optimization of synthetic enzyme mimics—specifically, helical peptoid-based catalysts—for the oxidative metabolism of a model drug, Diclofenac. We employ a computational property-guided generation framework to design catalyst libraries predicted to enhance the yield of the primary 4'-hydroxylated metabolite.

Table 1: Performance Metrics of Top-Generated Peptoid Catalysts vs. Control

| Catalyst ID | Generation Cycle | Predicted Binding Affinity (ΔG, kcal/mol) | Experimental Conversion (%) | 4'-OH Selectivity (%) | Turnover Frequency (h⁻¹) |

|---|---|---|---|---|---|

| P450-BM3 (Wild-Type) | N/A | -8.2 | 92 | 85 | 280 |

| Peptoid-Control (P-C1) | 0 (Baseline) | -5.1 | 15 | 62 | 12 |

| Peptoid-Opt-24 | 3 | -9.5 | 88 | 94 | 210 |

| Peptoid-Opt-17 | 3 | -8.9 | 79 | 89 | 165 |

| Fe-Porphyrin (Heme Mimic) | N/A | N/A | 45 | 70 | 95 |

Table 2: Property-Guided Generation Optimization Parameters

| Parameter | Value/Range | Optimization Target |

|---|---|---|

| Generation Algorithm | VAE + Property Predictor | N/A |

| Guided Property 1 | Docking Score (ΔG) | Minimize (< -9.0 kcal/mol) |

| Guided Property 2 | Heme-Iron Coordination Geometry | Square Planar |

| Guided Property 3 | LogP (Peptoid Core) | 2.0 - 4.0 |

| Library Size per Generation | 500 designs | N/A |

| Experimental Validation Batch | Top 5 designs per cycle | N/A |

Experimental Protocols

Protocol 3.1: Computational Generation & Screening of Peptoid Catalysts

Objective: To generate and virtually screen peptoid sequences for optimal Diclofenac binding and reaction geometry. Materials: Property-guided generative model (software), molecular docking suite, peptoid building block library. Procedure:

- Initialization: Train a Variational Autoencoder (VAE) on a dataset of 10,000 known functional peptoid structures.

- Property Guidance: Integrate a multilayer perceptron (MLP) predictor trained to estimate binding ΔG from peptoid sequence and 3D conformation.

- Latent Space Sampling: Sample the VAE latent space, biasing sampling towards regions where the property predictor outputs ΔG < -8.5 kcal/mol.

- Sequence Decoding: Decode sampled latent vectors into novel peptoid sequences (length: 12 residues).

- Docking & Filtering: Dock each generated peptoid, modeled around a central Fe(III)-porphyrin cofactor, to Diclofenac. Filter for poses that position the 4'-carbon within 3.5 Å of the heme iron-oxo species.

Protocol 3.2: Solid-Phase Synthesis of Peptoid Catalysts

Objective: To synthesize the top-ranked peptoid catalysts identified from computational screening. Materials: Rink Amide resin, Bromoacetic acid, N,N'-Diisopropylcarbodiimide (DIC), Diverse primary amines, Dichloromethane (DCM), Dimethylformamide (DMF), Piperidine, Trifluoroacetic acid (TFA). Procedure:

- Resin Preparation: Swell 100 mg of Rink Amide resin (0.1 mmol) in DCM for 30 minutes.

- Deprotection: Remove Fmoc group with 20% piperidine in DMF (2 x 5 min).

- Bromoacetylation: Couple bromoacetic acid (5 eq) using DIC (5 eq) in DMF for 45 min.

- Amination: Displace bromide by adding a selected primary amine (5 eq) in DMF. React for 60 min.

- Iteration: Repeat steps 2-4 for each of the 12 designed residues.

- Cleavage & Purification: Cleave peptoid from resin with 95% TFA/2.5% H₂O/2.5% Triisopropylsilane for 2 hours. Precipitate in cold diethyl ether, purify via reverse-phase HPLC, and confirm by LC-MS.

Protocol 3.3: Catalytic Activity Assay for Diclofenac Hydroxylation

Objective: To experimentally test the hydroxylation activity and selectivity of synthesized peptoid catalysts. Materials: Synthesized peptoid catalyst (5 µM), Diclofenac sodium salt (100 µM), Fe(III)-protoporphyrin IX (5 µM), Sodium dithionite (1 mM), H₂O₂ (0.5 mM), Phosphate buffer (50 mM, pH 7.4), Acetonitrile (HPLC grade). Procedure:

- Assembly: In a 1 mL reaction vial, mix peptoid catalyst and Fe(III)-protoporphyrin IX in buffer. Incubate 15 min to allow cofactor incorporation.

- Reduction: Add sodium dithionite (from fresh stock) and incubate for 2 min under N₂ to reduce Fe(III) to Fe(II).

- Initiation: Add Diclofenac substrate, followed by H₂O₂ to initiate the reaction. Final volume: 500 µL.

- Quenching: After 30 min at 37°C, quench the reaction with 500 µL of ice-cold acetonitrile.

- Analysis: Centrifuge at 14,000 rpm for 10 min. Analyze supernatant via UPLC-MS/MS (C18 column, gradient elution with water/acetonitrile + 0.1% formic acid). Quantify Diclofenac consumption and 4'-OH-Diclofenac formation using standard curves.

Visualizations

Title: Property-Guided Optimization Cycle for Enzyme Mimics

Title: Solid-Phase Peptoid Synthesis Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Enzyme Mimic Synthesis & Assay

| Item | Function/Benefit | Example/Catalog Note |

|---|---|---|

| Fe(III)-Protoporphyrin IX | Core heme-mimetic cofactor; provides reactive iron-oxo center for O-atom transfer. | Sigma-Aldrich, 08544. Must be stored dark, -20°C. |

| Diverse Primary Amine Library | Building blocks for peptoid side chains; determines substrate binding pocket shape and hydrophobicity. | Commercially available sets (e.g., Sigma-Aldrich 743487). |

| Rink Amide Resin | Solid support for iterative peptoid synthesis; enables facile filtration and washing steps. | 100-200 mesh, loading 0.1-0.8 mmol/g. |

| Bromoacetic Acid & DIC | Activation/ coupling reagents for the 'submonomer' peptoid synthesis method. | High purity (>99%) required for efficient coupling. |

| Sodium Dithionite | Reducing agent to generate active Fe(II) state of the catalyst prior to reaction with oxidant. | Prepare fresh solution in degassed buffer for each use. |

| Diclofenac Sodium Salt | Model drug substrate for cytochrome P450-like C-H hydroxylation reactions. | Widely available. Prepare stock in methanol or buffer. |

| UPLC-MS/MS System w/ C18 Column | Essential analytical tool for quantifying substrate conversion and metabolite selectivity with high sensitivity. | e.g., Waters ACQUITY UPLC with Xevo TQ-S. |

Overcoming Practical Hurdles in AI-Driven Catalyst Discovery

Addressing Mode Collapse and Low Diversity in Generated Candidates

Within the broader thesis on Applying property-guided generation for catalyst activity optimization research, a critical challenge is the failure of generative models to explore the full chemical space, instead producing a limited set of similar candidates—a phenomenon known as mode collapse. This severely limits the discovery of novel, high-performance catalysts. These Application Notes provide protocols to diagnose, mitigate, and evaluate solutions to this problem, ensuring diverse and optimized candidate generation.

Diagnosis & Quantitative Assessment

Effective intervention requires robust metrics to quantify diversity and mode collapse. The following table summarizes key diagnostic metrics.

Table 1: Quantitative Metrics for Assessing Mode Collapse and Diversity

| Metric | Formula / Description | Ideal Range | Interpretation in Catalyst Context |

|---|---|---|---|

| Internal Diversity | (1/N(N-1)) Σᵢ Σⱼ (1 - Tanimoto(FPᵢ, FPⱼ)) | >0.3 (FP dependent) | Measures pairwise structural dissimilarity within a generated set. Low values indicate clustering. |

| Uniqueness Rate | (Number of Unique Structures / Total Generated) * 100% | ~100% | Percentage of non-duplicate molecules. Collapsed modes yield low rates. |

| Nearest Neighbor Tanimoto (NN-T) | Mean max Tanimoto similarity of each generated molecule to a reference set (e.g., training data). | <0.4 (for novelty) | High mean NN-T suggests replication of training data, not exploration. |

| Property Distribution Divergence | KL-divergence or Wasserstein distance between property distributions (e.g., MW, logP) of generated vs. training set. | ~0 (Matched) | Significant divergence may indicate failure to model all property modes. |

| Fréchet ChemNet Distance (FCD) | Distance between multivariate Gaussian fits of penultimate layer activations of ChemNet for generated and reference sets. | Lower is better | A comprehensive metric for both diversity and quality of biological activity profiles. |

Experimental Protocols

Protocol 1: Diagnostic Workflow for Mode Collapse

Objective: Systematically evaluate a generative model's output for signs of mode collapse and low diversity.

- Model Output Generation: Generate a large set of candidates (e.g., N=10,000) using the trained generative model.

- Standardization & Deduplication: Standardize SMILES and remove duplicates using canonicalization.

- Descriptor Calculation: Compute molecular fingerprints (e.g., ECFP4) and key physicochemical properties (Molecular Weight, LogP, Number of Rotatable Bonds) for the unique set.

- Metric Computation:

- Calculate Internal Diversity (Table 1) on the generated set.

- Calculate Uniqueness Rate.

- Using a held-out reference set (e.g., training data), compute NN-T and Property Distribution Divergence (Wasserstein distance for MW, LogP).

- Compute FCD score against a broad bioactive molecule database (e.g., GuacaMol benchmark set).

- Visualization: Plot kernel density estimates for key properties (generated vs. training). A multi-peaked training distribution reduced to a single peak in generated data is a clear indicator of mode collapse.

Protocol 2: Mitigation via Property-Guided Reinforcement Learning (RL)

Objective: Use predicted catalyst activity (property) as a reward to guide exploration and escape collapsed modes.

- Reward Model Training: Train a separate, accurate regressor to predict the target catalytic activity (e.g., turnover frequency, TOF) from molecular structure.

- RL Fine-Tuning Setup:

- Agent: The pre-trained generative model (e.g., RNN, GPT).

- Action: Selecting the next token in a SMILES sequence.

- State: The current sequence of tokens.

- Reward: Computed upon generating a valid, complete SMILES. The reward is a weighted sum:

R = w₁ * Activity_Prediction + w₂ * Diversity_Penalty- Diversity Penalty: Negative reward proportional to the Tanimoto similarity of the new molecule to the top-N molecules generated in the current batch.

- Training Loop: Employ a policy gradient method (e.g., REINFORCE, PPO) to update the generative model to maximize the expected reward, encouraging high-activity and diverse structures.

- Output Sampling: Use high-temperature sampling or nucleus sampling during and after fine-tuning to encourage broader exploration of the chemical space.

Protocol 3: Evaluation of Optimized Candidates

Objective: Validate the performance and diversity of the final generated catalyst candidates.

- Virtual Screening Filter: Apply relevant chemical filters (e.g., medicinal chemistry filters, synthetic accessibility score > 3.0) to the RL-optimized set.

- Clustering: Cluster the filtered candidates using Butina clustering based on fingerprint similarity to select representative molecules from distinct structural classes.

- Multi-Objective Ranking: Rank candidates within each cluster by a composite score balancing predicted activity, novelty (1 - NN-T), and desirable ADMET properties.

- Experimental Prioritization: Select the top 3-5 candidates from 3-5 different clusters for in silico docking with the catalyst substrate complex (if structure available) and subsequent experimental synthesis and testing.

Visualizations

Diagnosis and Mitigation Workflow for Mode Collapse

Property-Guided RL Loop for Diversity

The Scientist's Toolkit

Table 2: Key Research Reagent Solutions for Property-Guided Generation

| Item / Solution | Function in Catalyst Optimization | Example Vendor/Resource |

|---|---|---|

| GuacaMol Benchmark Suite | Provides standardized metrics (incl. FCD, uniqueness) and benchmarks to evaluate generative model performance and diversity. | DeepChem / Literature |

| RDKit | Open-source cheminformatics toolkit for fingerprint generation, molecular descriptors, standardization, and clustering. | RDKit.org |

| Junction Tree VAE (JT-VAE) | A generative model architecture specifically designed for molecules, often less prone to invalid structure generation. | Open-Source (GitHub) |

| DeepChem | Library providing hyperparameter-optimized molecular property prediction models for use as reward functions. | DeepChem.io |

| Proximal Policy Optimization (PPO) | A stable RL algorithm implementation suitable for fine-tuning sequence-based generative models. | OpenAI / Stable-Baselines3 |

| MOSES Benchmarking Platform | Provides datasets, metrics, and baselines specifically for molecular generation, including diversity assessments. | GitHub: "molecularsets/moses" |

| Synthetic Accessibility Score (SAscore) | A score to filter out unrealistically complex molecules, ensuring generated candidates are synthetically feasible. | Integrated in RDKit |

Balancing Exploration vs. Exploitation in the Chemical Space Search

Within the thesis on "Applying property-guided generation for catalyst activity optimization research," the strategic balance between exploring novel chemical regions and exploiting known high-performing areas is a central computational challenge. This document provides application notes and protocols for implementing this balance in virtual screening and generative model workflows for catalyst design.

Core Concepts & Quantitative Framework

The trade-off is often quantified using metrics from multi-armed bandit algorithms and molecular property distributions.

Table 1: Quantitative Metrics for Balancing Strategies

| Metric | Formula/Description | Interpretation in Chemical Search |

|---|---|---|

| Upper Confidence Bound (UCB) | Score = μi + c * √(ln N / ni) | μi: mean property of region *i*; N: total iterations; ni: samples from region i; c: exploration weight. |

| Thompson Sampling | Draw from posterior p(μ_i|Data), select max. | Bayesian; balances based on uncertainty. |

| Diversity Score | 1 - (Avg. pairwise Tanimoto similarity) | High score = high exploration of diverse scaffolds. |

| Exploitation Ratio | (Iterations on top-5% scaffolds) / (Total iterations) | >0.7 indicates heavy exploitation; <0.3 indicates heavy exploration. |

| Expected Improvement (EI) | E[ max(0, Pnew - Pbest) ] | Used in Bayesian optimization; guides exploitation of promising leads. |

Application Notes

Note 1: Adaptive Strategy in Generative Models

- Context: Using a recurrent neural network (RNN) or variational autoencoder (VAE) for molecular generation.

- Protocol: The probability of sampling from a "novel" region (exploration) versus a "refinement" region (exploitation) is adjusted every 1000 generations based on the improvement rate of the objective property (e.g., predicted catalytic turnover frequency).

- Implementation: If the 100-generation moving average of property improvement falls below 2%, the exploration weight c in UCB is increased by 20%.

Note 2: Hierarchical Search for Catalyst Space