Revolutionizing Catalyst Design: How Reaction-Conditioned Generative Models Are Accelerating Drug Discovery

This article explores the transformative impact of reaction-conditioned generative models on catalyst design, a critical field for pharmaceutical development.

Revolutionizing Catalyst Design: How Reaction-Conditioned Generative Models Are Accelerating Drug Discovery

Abstract

This article explores the transformative impact of reaction-conditioned generative models on catalyst design, a critical field for pharmaceutical development. It provides a comprehensive overview for researchers and drug development professionals, covering the foundational principles of these AI models, their specific methodologies and applications in molecular catalysis, strategies for troubleshooting and optimizing model performance, and rigorous validation through case studies and comparative analyses. By synthesizing the latest advancements, this review aims to equip scientists with the knowledge to leverage these powerful tools for designing more efficient and selective catalysts, ultimately accelerating the discovery and optimization of therapeutic compounds.

The New Paradigm: Foundations of AI-Driven Catalyst Design

The development of high-performance catalysts is crucial for advancing chemical synthesis and pharmaceutical development. Traditional catalyst design, reliant on empirical trial-and-error approaches and computationally intensive quantum chemical calculations, represents a significant bottleneck in discovery timelines [1] [2]. The integration of artificial intelligence (AI), particularly reaction-conditioned generative models, is transforming this paradigm by enabling data-driven exploration of catalytic chemical space. These models enable inverse design, where catalyst structures are generated based on desired reaction conditions and performance metrics, moving beyond the limitations of traditional forward design [3]. This Application Note details the implementation of reaction-conditioned generative models for catalyst design, providing structured protocols, performance data, and essential resource guidance for research scientists.

Reaction-conditioned generative models represent a specialized class of AI architectures that learn the complex relationships between catalyst structures, reaction components (reactants, reagents, products), and reaction outcomes. By conditioning the generation process on specific reaction contexts, these models can propose novel catalyst candidates tailored for a particular chemical transformation.

The core architecture employed in frameworks like CatDRX is a Conditional Variational Autoencoder (CVAE) [1] [3]. This model jointly learns structural representations of catalysts and associated reaction components to capture their influence on catalytic performance. The architecture consists of three primary modules:

- Catalyst Embedding Module: Processes the catalyst molecular structure (e.g., via graph neural networks) to create a numerical representation.

- Condition Embedding Module: Encodes other reaction components, including reactants, reagents, products, and reaction properties (e.g., time) into a condition vector.

- Autoencoder Module: Combines the catalyst and condition embeddings to map the input into a latent space. A sampled latent vector, concatenated with the condition embedding, guides the decoder in reconstructing (or generating) catalyst molecules and informs a predictor for performance estimation (e.g., yield) [1].

This architecture is typically pre-trained on broad reaction databases, such as the Open Reaction Database (ORD), and subsequently fine-tuned for specific downstream catalytic applications [1].

Application Protocols

Protocol: Implementing a Catalyst Generation Workflow Using CatDRX

This protocol outlines the steps for employing a reaction-conditioned generative model for the discovery of novel catalysts, using the CatDRX framework as a representative example [1].

Purpose To generate novel, valid catalyst candidates with desired properties for a specific chemical reaction by leveraging a pre-trained and fine-tuned conditional variational autoencoder.

Reagents and Equipment

- Hardware: Workstation with GPU (e.g., NVIDIA A100 or RTX 3090) for model training and inference.

- Software: Python 3.8+, PyTorch or TensorFlow, RDKit, Deep Learning Framework (as per model implementation).

- Data: Pre-training dataset (e.g., Open Reaction Database), Target fine-tuning dataset (specific catalytic reactions with yield/activity data).

Procedure

- Data Preprocessing and Conditioning

- Input Representation: Represent catalyst molecules as SMILES strings or molecular graphs. Represent reaction components (reactants, reagents, products) as SMILES strings or reaction fingerprints (RXNFPs) [1].

- Feature Encoding: Encode catalyst structures using atom/bond features and adjacency matrices. Encode reaction conditions into a continuous vector using dedicated neural network encoders.

- Data Splitting: Split the fine-tuning dataset into training, validation, and test sets (e.g., 80/10/10).

Model Pre-training and Fine-Tuning

- Load Pre-trained Weights: Initialize the CatDRX model with weights pre-trained on a broad reaction database (e.g., ORD) [1].

- Fine-tuning: Further train the model on the target catalytic reaction dataset. Jointly optimize the encoder, decoder, and predictor modules using a combined loss function (reconstruction loss + prediction loss).

Catalyst Generation and Optimization

- Conditional Generation: Sample a latent vector and concatenate it with the embedding of the target reaction condition.

- Decoder Inference: Pass the combined vector through the decoder to generate novel catalyst structures in the desired chemical space.

- Latent Space Optimization: Apply optimization techniques (e.g., Bayesian optimization) within the model's latent space, guided by the property predictor, to steer generation toward catalysts with high predicted performance.

Validation and Filtering

- Chemical Validity: Use RDKit to validate the chemical structures of generated catalysts.

- Knowledge Filtering: Apply rules based on reaction mechanisms and expert knowledge to filter implausible candidates [1].

- Computational Validation: Employ Density Functional Theory (DFT) or machine learning interatomic potentials (MLIPs) to validate the catalytic performance and stability of top-ranked candidates [3].

Troubleshooting

- Poor Generation Quality: This may indicate a domain gap. Ensure the fine-tuning dataset is sufficiently large and relevant. Consider data augmentation or adjusting the balance between reconstruction and prediction losses.

- High Prediction Error: Verify the quality and consistency of the target property data (e.g., yield). Perform domain applicability analysis to check the overlap between pre-training and fine-tuning chemical spaces [1].

Protocol: Validating Generated Catalysts Using Computational Chemistry

Purpose To computationally validate the activity and stability of AI-generated catalyst candidates prior to experimental synthesis.

Procedure

- Structure Optimization: Use DFT to optimize the geometry of the generated catalyst molecule or surface structure.

- Property Calculation:

- Calculate key catalytic descriptors, such as adsorption energies of key reaction intermediates.

- Compute reaction free energy profiles and activation barriers (ΔΔG‡) for critical steps, especially for enantioselective reactions [1].

- Stability Assessment: Evaluate the thermodynamic stability of the catalyst under reaction conditions. For surfaces, calculate surface energies; for molecular catalysts, assess decomposition pathways.

Performance Data

The following tables summarize the quantitative performance of the CatDRX model and other generative approaches in key catalyst design tasks.

Table 1: Predictive Performance of CatDRX on Catalytic Activity and Yield [1]

| Dataset | Task Type | RMSE | MAE | Key Performance Insight |

|---|---|---|---|---|

| BH | Yield Prediction | ~0.15 | ~0.10 | Competitive performance, benefits from pre-training data overlap. |

| SM | Yield Prediction | ~0.18 | ~0.12 | Superior performance in yield prediction. |

| AH | Catalytic Activity | ~0.25 | ~0.18 | Competitive performance despite complex chirality; model does not explicitly encode chirality. |

| CC | Catalytic Activity | >0.40 | >0.30 | Reduced performance due to significant domain shift from pre-training data and limited reaction diversity. |

Table 2: Comparison of Generative Model Architectures for Catalyst Design [3]

| Model Type | Complexity | Applications | Key Advantages |

|---|---|---|---|

| Variational Autoencoder (VAE) | Stable to train | CO2RR on alloy catalysts [3] | Good interpretability, efficient latent sampling, property-guided optimization. |

| Generative Adversarial Network (GAN) | Difficult to train | Ammonia synthesis with alloy catalysts [3] | Capable of high-resolution structure generation. |

| Diffusion Model | Computationally expensive but stable | General surface structure generation [3] | Strong exploration capability, accurate generation of realistic structures. |

| Transformer | Scales with sequence length | 2e- ORR reaction (CatGPT) [3] | Conditional and multi-modal generation, excels with discrete data representations. |

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for AI-Driven Catalyst Design

| Item Name | Function/Application | Example/Note |

|---|---|---|

| Open Reaction Database (ORD) | Large-scale, public repository of reaction data for pre-training generative models. | Provides diverse reaction data crucial for developing robust, generalizable models [1]. |

| Reaction Fingerprints (RXNFPs) | Numerical representation of chemical reactions to analyze and compare reaction spaces. | 256-bit embeddings used to assess domain applicability and model transferability [1]. |

| Extended Connectivity Fingerprints (ECFP) | Molecular representation for quantifying catalyst similarity and chemical space coverage. | 2048-bit ECFP4 fingerprints used to analyze the catalyst space of fine-tuning datasets [1]. |

| Density Functional Theory (DFT) | Computational method for validating generated catalysts by calculating energies and properties. | Used as a final validation step; can be accelerated by Machine Learning Interatomic Potentials (MLIPs) [1] [3]. |

| Bird Swarm Optimization Algorithm | Global optimization algorithm used in conjunction with generative models for property-guided search. | Combined with CDVAE to generate over 250,000 candidate structures for CO2RR [3]. |

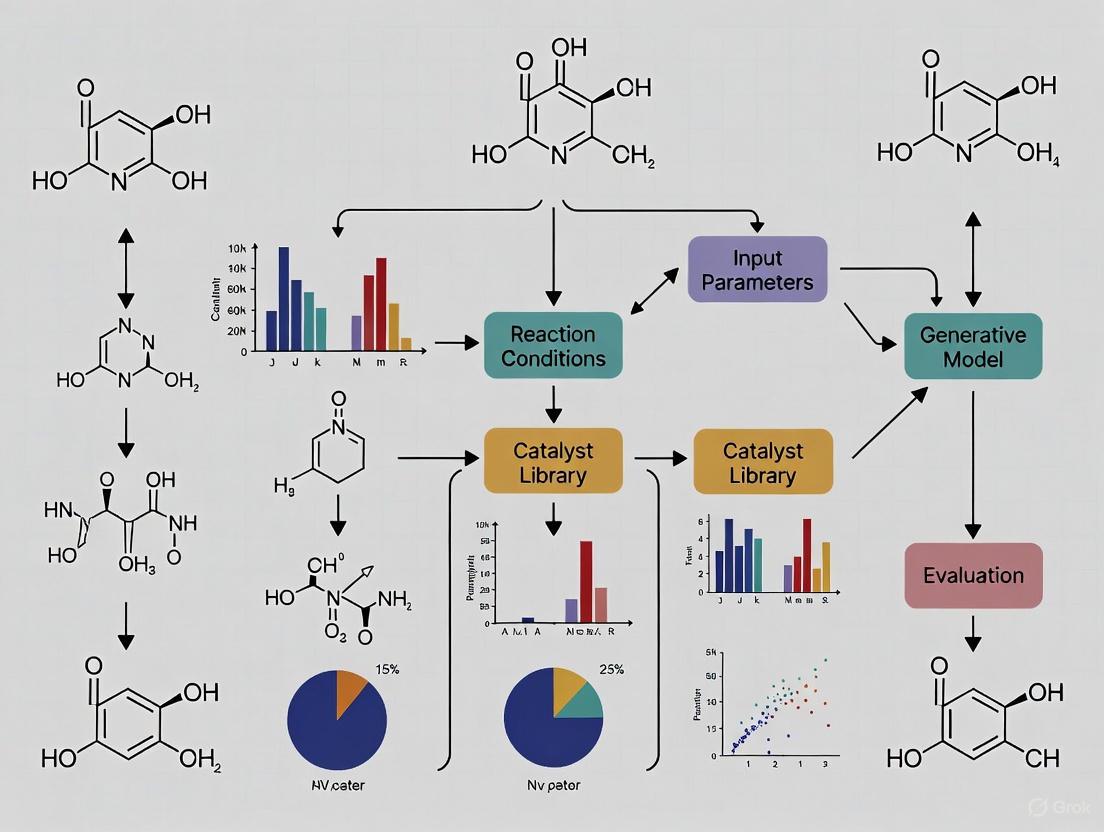

Workflow Integration Diagram

The complete catalyst discovery pipeline, from data preparation to final candidate selection, integrates the generative model with optimization and validation cycles.

The design and discovery of novel catalysts are pivotal for advancing chemical synthesis and pharmaceutical development. Traditional methods, which often rely on trial-and-error or computationally intensive quantum mechanics calculations, are increasingly being supplanted by artificial intelligence (AI)-driven approaches [3] [1]. Among these, generative models have emerged as transformative tools for the inverse design of catalytic materials, enabling researchers to directly generate candidate structures with desired properties [3] [4]. This document details the core architectures—Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), Transformers, and Diffusion Models—framed within the context of reaction-conditioned generative models for catalyst design. It provides application notes, experimental protocols, and resource toolkits tailored for research scientists and drug development professionals.

Core Architectures in Catalyst Design

The following table summarizes the core attributes, applications, and challenges of the four primary generative model architectures in catalyst design.

Table 1: Comparative Analysis of Core Generative Architectures for Catalyst Design

| Architecture | Core Principle | Applications in Catalyst Design | Advantages | Challenges |

|---|---|---|---|---|

| Variational Autoencoder (VAE) | Learns a probabilistic latent representation of input data and decodes to generate new data [4] [5]. | - Reaction-conditioned catalyst generation (e.g., CatDRX) [1].- Prediction of catalytic performance (yield) [1].- Exploring catalyst chemical space [3]. | - Stable training process [3].- Enables efficient latent space sampling and optimization [3].- Good interpretability of the latent space [3]. | - Can produce blurry or unrealistic outputs [5].- May struggle with complex, high-fidelity data distributions [4]. |

| Generative Adversarial Network (GAN) | Two neural networks (Generator and Discriminator) compete adversarially to produce realistic data [4] [5]. | - Design of alloy catalysts for specific reactions (e.g., ammonia synthesis) [3].- High-resolution molecular generation. | - Capable of high-resolution and perceptually sharp generation [3] [6]. | - Training can be unstable and suffer from mode collapse [4] [5].- Requires careful balancing of generator and discriminator [5]. |

| Transformer | Uses self-attention mechanisms to model long-range dependencies and contextual relationships in sequential data [4] [5]. | - Conditional and multi-modal generation for reactions (e.g., CatGPT for ORR) [3].- Product prediction and retrosynthesis [7].- Tokenization of crystal structures for generation [3]. | - Excellent at modeling complex, conditional relationships [3].- Highly flexible and scalable architecture [4]. | - Computationally intensive for long sequences [5].- Requires large amounts of training data [4]. |

| Diffusion Model | Iteratively denoises a random signal to generate data, learning to reverse a forward noising process [4] [5]. | - Surface structure and adsorption geometry generation [3].- Generating complex transition-state structures [3].- High-quality, diverse molecular and material generation. | - Strong exploration capability in chemical space [3].- High-quality and diverse output generation [5].- Training stability [3]. | - Computationally expensive during inference (sampling) [3] [5].- Can be slower than other generative approaches [4]. |

Application Notes for Reaction-Conditioned Generation

The paradigm of reaction-conditioned generation represents a significant advancement, moving beyond generating catalysts in isolation to designing them within a specific reactive context. This approach conditions the generative process on key reaction components such as reactants, reagents, products, and reaction time, thereby capturing the complex relationship between a catalyst's structure and its performance in a given chemical transformation [1].

- VAEs for Conditional Design: The CatDRX framework exemplifies this approach. It employs a joint Conditional VAE (CVAE) that simultaneously learns structural representations of catalysts and their associated reaction components. The model is pre-trained on a broad reaction database (e.g., the Open Reaction Database) and can be fine-tuned for specific downstream catalytic tasks. This allows for the simultaneous generation of novel catalysts and prediction of their performance (e.g., yield) when provided with a target reaction's conditions [1] [8].

- Diffusion Models for Surface Exploration: For heterogeneous catalysis, diffusion models have been trained on custom datasets of surface structures to generate diverse and stable atomic-scale configurations. These models can be guided by learned forces to produce realistic adsorption sites and thin-film structures, which is crucial for identifying active sites and understanding reaction mechanisms [3].

- Transformers for Multi-Modal Tasks: Transformer-based models like CatGPT [3] and ReactionT5 [7] leverage their sequence-to-sequence architecture and attention mechanisms to handle multi-modal tasks in catalysis, such as predicting reaction products and conditions from textual or graph-based representations of reactants and catalysts.

Experimental Protocols

Protocol 1: Implementing a Reaction-Conditioned VAE (CatDRX)

This protocol outlines the steps for developing and training a reaction-conditioned VAE for catalyst design, based on the CatDRX framework [1].

Objective: To train a generative model that can design novel catalyst molecules and predict their performance (e.g., reaction yield) under specified reaction conditions.

Workflow:

Materials and Reagents:

- Hardware: High-performance computing cluster with multiple GPUs (e.g., NVIDIA A100 or H100).

- Software: Python (>=3.8), PyTorch or TensorFlow, RDKit, DeepChem.

- Data: Open Reaction Database (ORD) [1] for pre-training. Domain-specific catalyst performance datasets (e.g., for C-N coupling, hydrogenation) for fine-tuning.

Procedure:

- Data Preprocessing:

- Catalyst Representation: Encode catalyst molecules as graphs (using atom and bond features with an adjacency matrix) or as SMILES/SELFIES strings.

- Condition Representation: Encode reaction components (reactants, reagents, products) as SMILES strings or reaction fingerprints (e.g., RXNFPs). Scalar conditions (e.g., time, temperature) can be normalized.

- Data Splitting: Split the dataset into training, validation, and test sets (e.g., 80/10/10) using stratified sampling to ensure representative coverage of reaction classes.

Model Architecture and Training:

- Modules: Construct three core modules:

- Catalyst Embedding Module: A graph neural network (GNN) or RNN that processes the catalyst input.

- Condition Embedding Module: A feed-forward network or transformer that processes the reaction condition inputs.

- Autoencoder Module: An encoder that maps the concatenated catalyst and condition embedding to a latent vector

z, a decoder that reconstructs the catalyst fromzand the condition, and a predictor (feed-forward network) that estimates catalytic performance from the same inputs.

- Pre-training: Train the entire model on the large and diverse ORD to learn general representations of catalysis. The loss function is a combination of reconstruction loss (for the catalyst), Kullback–Leibler divergence loss (for the latent space), and prediction loss (e.g., Mean Squared Error for yield).

- Fine-tuning: Transfer the pre-trained model and further train it on a smaller, target-specific dataset to specialize its knowledge for a particular reaction class or property.

- Modules: Construct three core modules:

Catalyst Generation and Validation:

- Generation: Sample a latent vector

zfrom the prior distribution and concatenate it with the embedding of the target reaction condition. Pass this to the decoder to generate new catalyst structures. - Validation:

- Computational: Use the integrated predictor to screen generated catalysts for high performance. Employ density functional theory (DFT) or machine learning interatomic potentials (MLIPs) to validate the stability and predicted activity of top candidates [3].

- Experimental: Synthesize and experimentally test the most promising catalysts in the target reaction to confirm model predictions [1].

- Generation: Sample a latent vector

Protocol 2: Exploring Catalyst Surfaces with a Diffusion Model

This protocol describes using a diffusion model to generate plausible surface structures for heterogeneous catalysis [3].

Objective: To generate stable and diverse surface structures and adsorbate configurations to identify novel active sites.

Workflow:

Materials and Reagents:

- Hardware: GPU cluster.

- Software: Atomistic simulation environment (e.g., ASE), MLIP library (e.g., MACE), diffusion model codebase (e.g., JAX or PyTorch).

- Data: A curated dataset of surface structures and adsorbate configurations, typically generated via ab initio global structure search algorithms [3].

Procedure:

- Dataset Curation: Use global optimization methods (e.g., genetic algorithms, basin hopping) combined with DFT to create a dataset of low-energy surface and adsorbate configurations.

- Model Training:

- Forward Process: Define a Markov chain that gradually adds Gaussian noise to the atomic coordinates of the input structures over a series of timesteps.

- Reverse Process: Train a neural network (e.g., a GNN) to predict the noise that was added, effectively learning the gradient of the data distribution. This allows the model to iteratively denoise a random initial structure into a coherent surface.

- Sampling and Optimization:

- Generation: Sample new structures by starting from pure noise and applying the learned reverse denoising process.

- Guided Generation: Condition the generation process on desired properties (e.g., low adsorption energy) by incorporating guidance from a property predictor during the reverse diffusion steps.

- Relaxation and Validation: Relax the generated structures using MLIPs or DFT to find the nearest local minimum and verify their thermodynamic stability and electronic properties.

Table 2: Essential Computational Tools for Generative Catalyst Design

| Resource Name | Type | Primary Function | Relevance to Catalyst Design |

|---|---|---|---|

| Open Reaction Database (ORD) [1] | Database | A large, open-access repository of chemical reaction data. | Serves as a primary source for pre-training reaction-conditioned models on a broad chemical space. |

| RDKit | Software Library | Cheminformatics and molecular manipulation. | Used for processing molecular representations (SMILES, graphs), calculating descriptors, and validating generated structures. |

| Density Functional Theory (DFT) | Computational Method | Quantum mechanical calculation of electronic structure. | The "gold standard" for validating the stability and catalytic properties (e.g., adsorption energy) of generated materials. |

| Machine Learning Interatomic Potentials (MLIPs) [3] | Surrogate Model | Fast, near-DFT accuracy energy and force calculations. | Accelerates the evaluation and relaxation of generated structures, making high-throughput screening feasible. |

| CatDRX Model [1] | Generative Model | Reaction-conditioned VAE for catalyst generation and yield prediction. | A state-of-the-art framework for the inverse design of molecular catalysts. |

| CDVAE (Crystal Diffusion VAE) [3] | Generative Model | Diffusion-based model for crystal structure generation. | Adapted for generating bulk and surface structures of crystalline catalysts. |

The design of novel catalysts is a pivotal process for enhancing the efficiency of industrial chemical reactions, minimizing waste, and building a more sustainable society. However, traditional catalyst development is a multi-step endeavor that can span several years, from initial screening to industrial application, requiring tremendous resources to navigate complex chemical spaces [1]. Conventional computational methods, while valuable, often demand substantial resources and lack transferability across different systems.

The emergence of artificial intelligence (AI) has introduced new paradigms for tackling this challenge. Among these, generative models have shown significant promise in the inverse design of molecules, including catalysts, by learning to create structures with desired properties. Early generative approaches, however, were often constrained, developed for specific reaction classes or predefined fragment categories without fully considering the broader reaction context. This limitation restricted their ability to explore novel catalysts across the full reaction space [1].

This application note explores the transformative potential of reaction-conditioned generative models, a sophisticated AI framework that integrates the full context of a chemical reaction—including reactants, reagents, products, and conditions—to guide the targeted generation of catalyst candidates. By conditioning the generation process on this rich contextual information, these models enable a more precise, efficient, and intelligent exploration of catalytic chemical space, thereby accelerating the discovery pipeline for chemical and pharmaceutical industries.

Core Architectures and Mechanisms

Reaction-conditioned generative models are built upon deep learning architectures capable of learning the complex relationships between catalyst structures, reaction components, and reaction outcomes. The core principle is to use the reaction context as a conditioning input to the model, steering the generative process toward candidates that are effective for a specific chemical transformation.

The Conditional Variational Autoencoder (CVAE) Framework

The Conditional Variational Autoencoder (CVAE) has proven to be a powerful architecture for this task, as exemplified by the CatDRX framework for catalyst discovery [1]. Its mechanism can be broken down into three main modules:

- Catalyst Embedding Module: This module processes the molecular structure of a catalyst (often represented as a graph or matrix of atoms and bonds) through a series of neural networks to create a numerical representation, or embedding, that captures its essential structural features.

- Condition Embedding Module: This module simultaneously processes the other reaction components, such as reactants, reagents, products, and properties like reaction time, into a separate conditioning embedding.

- Autoencoder Module: The catalyst and condition embeddings are concatenated into a unified "catalytic reaction embedding." The encoder part of the autoencoder maps this combined input into a probabilistic latent space. A latent vector is sampled from this space and, crucially, is concatenated with the condition embedding to guide the decoder in reconstructing the catalyst molecule. A parallel predictor network uses the same latent and condition vectors to estimate catalytic performance, such as reaction yield [1].

This joint training forces the model's latent space to organize itself such that proximity in the space reflects similarity in both catalyst structure and catalytic function under given conditions.

Comparison of Generative Model Architectures

While CVAE is a prominent choice, other generative architectures are being adapted for catalyst design, each with distinct strengths and complexities. The table below summarizes key models applied in this domain.

Table 1: Comparison of Generative Model Architectures for Catalyst Design

| Model | Modeling Principle | Complexity | Typical Applications | Key Advantages |

|---|---|---|---|---|

| Variational Autoencoder (VAE) | Learns a compressed latent space distribution of the data [3]. | Stable to train [3]. | Generating catalyst ligands for CO2 reduction [3]. | Good interpretability and efficient latent sampling [3]. |

| Generative Adversarial Network (GAN) | Uses a generator and discriminator in an adversarial game to learn realistic data distributions [9]. | Difficult to train, can be unstable [3]. | Generating surface structures for ammonia synthesis catalysts [3]. | Capable of high-resolution, realistic generation [3]. |

| Diffusion Model | Iteratively denoises a random structure to generate data, following a reverse-time process [3]. | Computationally expensive but stable training [3]. | Generating atomic-scale surface and adsorbate structures [3]. | Strong exploration capability and high accuracy [3]. |

| Transformer Model | Models probabilistic dependencies between tokens in a sequence using attention mechanisms [3]. | Requires large datasets for training. | Conditional generation of catalyst structures for specific reactions [3]. | Excellent for multi-modal and conditional generation [3]. |

Application Notes: Protocol for Reaction-Conditioned Catalyst Generation

The following protocol outlines the key steps for implementing a reaction-conditioned generative model, based on the CatDRX framework [1], for the design and optimization of homogeneous catalysts.

Protocol: Catalyst Generation with a Pre-trained CVAE Model

Objective: To generate novel, valid catalyst candidates for a specific chemical reaction (e.g., a Suzuki-Miyaura cross-coupling) and predict their performance (e.g., reaction yield). Primary Model: A CVAE pre-trained on a broad reaction database (e.g., the Open Reaction Database) and fine-tuned on relevant catalytic reaction data [1].

Workflow:

Step-by-Step Procedure:

Input Preparation:

- Define the target reaction using a SMILES string or a similar representation, explicitly specifying the core reactants and products [1].

- Specify relevant reaction conditions (e.g., solvent, temperature, time) to be used as conditioning inputs. If specific reagents are fixed, they should be included in the reaction definition [1].

Model Conditioning:

- Feed the reaction and condition information into the model's condition embedding module. This module processes the inputs to create a fixed-length numerical vector that semantically represents the reaction context [1].

Latent Space Sampling and Optimization:

- To generate novel catalysts, sample a latent vector

zfrom the prior distribution (e.g., a standard Gaussian) of the model's latent space. - For property-guided generation, perform optimization within the latent space. This involves:

a. Using a predictor network that estimates a target property (e.g., binding energy, yield) from the latent vector

zand the condition embedding [10]. b. Employing an optimization algorithm (e.g., gradient ascent/descent, bird swarm algorithm) to iteratively adjustzto maximize or minimize the predicted property [3] [10]. - Concatenate the optimized (or sampled) latent vector

zwith the condition embedding from Step 2.

- To generate novel catalysts, sample a latent vector

Catalyst Decoding and Validation:

- Pass the combined vector to the model's decoder to generate a new catalyst structure, typically in a string format like SELFIES, which guarantees molecular validity [10].

- Validate the generated catalyst for chemical correctness and synthetic accessibility. Filter candidates using background knowledge (e.g., known catalyst motifs, stability criteria) [1].

Performance Prediction and Selection:

- Utilize the integrated predictor network to estimate the performance of the generated catalyst for the conditioned reaction, providing a rapid virtual screening metric [1].

- Select top-ranking candidates for further validation via computational chemistry (e.g., DFT calculations) [1] or high-throughput experimentation.

Quantitative Performance Metrics

In benchmark studies, reaction-conditioned models have demonstrated strong performance in both generative and predictive tasks. The following table summarizes quantitative results from relevant studies.

Table 2: Performance Metrics of Reaction-Conditioned Generative Models in Catalyst Design

| Model / Study | Application / Dataset | Key Performance Metrics | Experimental Outcome / Validation |

|---|---|---|---|

| CatDRX (CVAE) [1] | Yield prediction across multiple reaction classes | Competitive or superior RMSE/MAE in yield prediction vs. baselines. Performance varies with dataset domain overlap. | Effective generation of novel catalysts validated by reaction mechanisms and chemical knowledge. |

| VAE with Predictor [10] | Suzuki cross-coupling catalyst design | MAE for binding energy prediction: 2.42 kcal mol⁻¹. Ability to generate 84% valid and novel catalysts. | Identified catalysts with binding energies within the optimal Sabatier principle range. |

| Diffusion Model [3] | Surface structure generation for CO₂RR | Generated >250,000 candidate structures; 35% predicted high activity. | Five alloy compositions synthesized; two achieved ~90% Faradaic efficiency for CO₂ reduction. |

| GAN with Fine-Tuning [11] | (For reference: Facial expression synthesis) | Precision for "anger" emotion increased from 85.7% to 89.1%; False negatives reduced from 16 to 10. | (Illustrates the impact of architectural fine-tuning on model output fidelity.) |

The Scientist's Toolkit: Research Reagent Solutions

Successful implementation of these advanced models relies on a suite of computational "reagents" and resources.

Table 3: Essential Research Reagents and Resources for Reaction-Conditioned Generative Modeling

| Item / Resource | Function / Description | Relevance to the Protocol |

|---|---|---|

| Open Reaction Database (ORD) [1] | A large, publicly available database of chemical reactions. | Serves as a primary source for pre-training the generative model on a broad range of chemical transformations, improving generalizability [1]. |

| SELFIES Representation [10] | A string-based molecular representation that guarantees 100% syntactic and molecular validity. | Used to represent catalysts for the VAE, overcoming limitations of SMILES for organometallic complexes and ensuring generated structures are valid [10]. |

| Density Functional Theory (DFT) [1] [10] | A computational quantum mechanical method used to calculate electronic structure. | Generates high-fidelity training data (e.g., binding energies, activation barriers) for the predictor network and validates final candidate structures [1] [10]. |

| Bird Swarm Optimization (BSO) [3] | A nature-inspired global optimization algorithm. | Used for efficient property-guided optimization within the continuous latent space of a VAE to find structures with desired catalytic properties [3]. |

| Machine Learning Interatomic Potentials (MLIPs) [3] | Surrogate models trained on DFT data that provide accurate energy and force predictions at lower computational cost. | Accelerates the evaluation of generated surface structures and adsorption geometries during the validation step [3]. |

Reaction-conditioned generative models represent a paradigm shift in computational catalyst design. By moving beyond the generation of structures in isolation to the targeted creation of catalysts within a specific reaction context, these models offer a powerful and efficient strategy for exploring vast chemical spaces. The integration of conditioning, predictive performance, and optimization into a unified framework, as detailed in these application notes and protocols, provides researchers with a robust toolkit for accelerating the discovery of next-generation catalysts, ultimately contributing to the advancement of more sustainable and efficient chemical processes.

The paradigm of materials discovery is shifting from traditional trial-and-error approaches towards a targeted, inverse design methodology. In the context of catalyst design, this involves specifying desired catalytic properties—such as high yield, selectivity, or stability—and computationally generating candidate catalyst structures that fulfill these criteria [12]. This property-to-structure approach relies on two interconnected pillars: the intelligent navigation of a compressed latent space and the practical assessment of candidate synthetic accessibility (SA) to ensure proposed structures can be realistically synthesized in the laboratory [12] [13].

Reaction-conditioned generative models represent a state-of-the-art framework within this paradigm. These models learn the complex relationships between catalyst structures, reaction conditions (e.g., reactants, reagents, temperature), and reaction outcomes. Once trained, they can generate novel, optimal catalyst structures conditioned on specific, user-defined reaction parameters, thereby enabling the inverse design of catalysts tailored for a particular chemical transformation [1].

Quantitative Performance Data

The efficacy of generative models in catalyst design is demonstrated by their performance on predictive and generative tasks. The following tables summarize key quantitative metrics reported in recent studies.

Table 1: Predictive Performance of Generative Models on Catalytic Property Estimation

| Model / Framework | Application / Dataset | Key Performance Metric(s) | Citation |

|---|---|---|---|

| PGH-VAEs (Topology-based VAE) | *OH adsorption energy on High-Entropy Alloys (HEAs) | Mean Absolute Error (MAE): 0.045 eV | [14] |

| CatDRX (Reaction-conditioned VAE) | Yield prediction across multiple reaction classes | Competitive/Superior performance in Root Mean Squared Error (RMSE) and MAE vs. baselines | [1] |

| Inverse Ligand Design Model (Transformer) | Vanadyl-based epoxidation catalyst ligands | Validity: 64.7%, Uniqueness: 89.6% | [15] |

Table 2: Synthetic Accessibility and Generation Metrics

| Model / Framework | SAscore / Feasibility Assessment | Other Generation Metrics | Citation |

|---|---|---|---|

| SAscore Methodology (Rule-based & fragment contributions) | Agreement with medicinal chemists: r² = 0.89 | Validated on 40 molecules assessed by experts | [13] |

| Inverse Ligand Design Model | High Synthetic Accessibility Scores | RDKit Similarity: 91.8% | [15] |

| CatDRX | Validation via reaction mechanisms & chemical knowledge | Effective generation using different sampling strategies | [1] |

Experimental Protocols

This section provides detailed methodologies for implementing and validating a reaction-conditioned generative model for catalyst inverse design, drawing from established frameworks like CatDRX [1] and PGH-VAEs [14].

Protocol: Pre-training a Reaction-Conditioned Variational Autoencoder (VAE)

Objective: To create a foundational model that learns a latent representation of catalysts and their relationship with reaction components and outcomes.

Materials & Reagents:

- Hardware: High-performance computing node with modern GPUs (e.g., NVIDIA A100/A6000, H100).

- Software: Python 3.8+, PyTorch or TensorFlow, RDKit, Deep Graph Library (DGL) or PyTorch Geometric.

- Data: Broad reaction database (e.g., Open Reaction Database - ORD) containing reaction SMILES, catalysts, reagents, products, and yields [1].

Procedure:

- Data Preprocessing:

- Extract and standardize reaction components:

Reactants,Reagents,Products,Catalyst, andYield. - Featurize the catalyst molecule as a molecular graph using an adjacency matrix and atom/bond feature matrices.

- Encode other reaction components (reactants, reagents, products) and scalar conditions (e.g., reaction time) into a condition embedding vector using separate neural network modules.

- Extract and standardize reaction components:

Model Architecture Setup:

- Catalyst Embedding Module: Implement a Graph Neural Network (GNN) to process the catalyst graph into a fixed-dimensional embedding vector.

- Condition Embedding Module: Implement feed-forward networks or other suitable architectures to encode the reaction context into a condition vector.

- Encoder: Design a network that takes the concatenated catalyst and condition embeddings and maps them to the parameters of a latent distribution (mean

μand log-variancelogσ²). - Sampler: Sample a latent vector

zusing the reparameterization trick:z = μ + ε * exp(0.5 * logσ²), whereε ~ N(0, I). - Decoder: Design a network that takes the sampled latent vector

zand the condition embedding, and reconstructs the catalyst's molecular graph. - Predictor (Optional): Add a feed-forward network that uses

zand the condition embedding to predict the reaction yield.

Model Training:

- Loss Function: Minimize a combined loss function

L_total:L_reconstruction: Cross-entropy loss for graph reconstruction.L_KL: Kullback-Leibler divergence loss to regularize the latent space towards a standard normal distribution.L_prediction: Mean Squared Error (MSE) for yield prediction.L_total = L_reconstruction + β * L_KL + γ * L_prediction(whereβandγare weighting hyperparameters).

- Training Regime: Use the Adam optimizer with early stopping on a validation split of the pre-training data.

- Loss Function: Minimize a combined loss function

Protocol: Fine-Tuning for Downstream Catalytic Activity Prediction

Objective: To adapt the pre-trained model to a specific, smaller dataset targeting a particular catalytic reaction or property.

Materials & Reagents:

- Hardware: Same as Protocol 3.1.

- Software: Same as Protocol 3.1.

- Data: A smaller, specialized dataset for the target reaction (e.g., asymmetric hydrogenation, cross-coupling) with catalytic activity data (e.g., yield, enantioselectivity, turnover frequency) [1].

Procedure:

- Data Alignment: Preprocess the downstream dataset to align with the featurization scheme used during pre-training.

- Model Initialization: Load the weights from the pre-trained model (Encoder, Decoder, Predictor).

- Transfer Learning:

- Re-train the entire model on the downstream dataset with a reduced learning rate.

- Alternatively, freeze the weights of the encoder and decoder and only re-train the predictor module if the primary goal is accurate property prediction.

Protocol: Inverse Design Cycle with SAscore Filtering

Objective: To generate novel, high-performing catalyst candidates for a given reaction and filter them based on synthetic feasibility.

Materials & Reagents:

- Software: Trained generative model from Protocol 3.1/3.2, SAscore calculation package (e.g., as implemented in RDKit or based on [13]).

- Data: Target reaction conditions (reactants, reagents, desired property value).

Procedure:

- Conditioned Generation:

- Encode the target reaction conditions into the condition embedding vector.

- Sample a latent vector

zfrom the prior distribution (e.g.,N(0, I)) or from a region of the latent space associated with high performance. - Pass the condition embedding and

zto the decoder to generate a novel catalyst structure.

Validation and Filtering:

- Validity Check: Ensure the generated molecular graph is chemically valid (e.g., correct valences).

- SAscore Calculation: Pass the valid generated structures through an SAscore function [13]. The score is a combination of:

- FragmentScore: Sum of contributions of all extended connectivity fragments (ECFC_4) in the molecule, derived from historical synthetic knowledge in databases like PubChem.

- Complexity Penalty: Accounts for non-standard structural features like large rings, high stereocomplexity, and unusual ring fusions.

- Threshold Filtering: Retain candidates with an SAscore below a predetermined threshold (e.g., <4.5, where 1=easy, 10=very difficult to synthesize) [13] [15].

Iterative Optimization: Use the generated and filtered candidates to iteratively refine the search in the latent space (e.g., via Bayesian optimization or active learning) towards the target properties.

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Computational Tools and Datasets for Inverse Catalyst Design

| Item Name | Function / Application | Specification / Notes |

|---|---|---|

| Open Reaction Database (ORD) | Pre-training data source for broad, general-purpose chemical knowledge. | Contains a vast array of chemical reactions with detailed context [1]. |

| High-Throughput DFT Data | Source of accurate, labeled data for adsorption energies and reaction barriers. | Critical for training accurate surrogate models, especially for surface catalysis [14]. |

| RDKit | Open-source cheminformatics toolkit. | Used for molecule manipulation, featurization, fingerprint generation, and SAscore calculation [13] [15]. |

| Graph Neural Network (GNN) Library | Core architecture for molecule representation learning. | Libraries like DGL or PyTorch Geometric implement GNNs for processing molecular graphs [1]. |

| Synthetic Accessibility (SAscore) | Computational filter for practical feasibility. | A score between 1 (easy) and 10 (very difficult) based on molecular complexity and fragment contributions from PubChem [13]. |

| Persistent GLMY Homology (PGH) | Topological descriptor for 3D active sites. | Captures nuanced coordination and ligand effects in surface catalysts, enabling high-resolution representation [14]. |

Workflow and System Diagrams

The following diagrams illustrate the core logical relationships and experimental workflows described in these protocols.

Catalyst Inverse Design Workflow

Conditional VAE Architecture

From Code to Catalyst: Methodologies and Real-World Applications

The design and discovery of novel catalysts are pivotal for advancing chemical synthesis and pharmaceutical development, yet traditionally rely on costly, time-consuming trial-and-error experiments [1]. Reaction-conditioned generative models represent a paradigm shift in computational catalysis, leveraging deep learning to inversely design catalyst structures conditioned on specific reaction environments. Unlike conventional models limited to specific reaction classes or predefined fragments, these frameworks learn the complex relationships between reaction components—such as reactants, reagents, and products—and catalyst performance, enabling targeted exploration of catalytic chemical space [1]. This approach directly addresses the critical "functional property deficit" in catalyst informatics, where a scarcity of real, measured catalytic performance data (e.g., Turnover Number/Frequency) has historically hampered predictive design [16]. By framing catalyst design as an inverse problem—mapping desired reaction outcomes to optimal catalyst structures—these models offer a transformative methodology for accelerating the discovery of efficient, novel catalysts across diverse chemical transformations.

Framework Architecture & Core Components

CatDRX: A Reaction-Conditioned Variational Autoencoder

The CatDRX framework is built upon a conditional variational autoencoder (CVAE) architecture specifically engineered for catalyst discovery. Its core innovation lies in jointly learning structural representations of catalysts and their associated reaction contexts to facilitate both property prediction and targeted generation [1].

The model comprises three principal modules that process and integrate different types of chemical information:

- Catalyst Embedding Module: Processes the catalyst's molecular structure, typically represented as a graph or matrix of atoms and bonds, through a series of neural networks to construct a dense vector embedding that captures essential structural features.

- Condition Embedding Module: Encodes other critical reaction components, including reactants, reagents, products, and operational parameters such as reaction time, into a unified condition representation. This allows the model to learn how these factors influence catalytic performance.

- Autoencoder Module: Integrates the catalyst and condition embeddings through a CVAE architecture. The encoder maps the input into a probabilistic latent space, while the decoder samples from this space and uses the condition embedding to reconstruct catalyst molecules. A parallel predictor module estimates catalytic performance (e.g., yield) from the same latent representation [1].

This architecture is first pre-trained on broad reaction databases like the Open Reaction Database (ORD) to learn generalizable relationships, then fine-tuned on specific downstream reactions, enabling competitive performance across diverse catalytic applications [1].

Growing and Linking Optimizers: Synthesis-Driven Design

In parallel, the Growing Optimizer (GO) and Linking Optimizer (LO) frameworks adopt a fundamentally different approach inspired by synthetic practicality. Rather than generating molecular structures in isolation, these models emulate real-world chemical synthesis by sequentially selecting commercially available building blocks and simulating feasible reactions between them to form new compounds [17].

This approach offers several distinct advantages:

- Synthetic Accessibility: By construction, generated molecules are guaranteed to be synthesizable from available starting materials using known chemical reactions.

- Chemistry Restriction: The models can be constrained to specific building blocks, reaction types, and synthesis pathways, which is crucial for applications in drug discovery where synthetic feasibility is paramount.

- Reaction-Conditioned Generation: While CatDRX conditions on the entire reaction context, GO and LO explicitly incorporate reaction knowledge at the generation step itself, building molecules through chemically plausible transformations rather than abstract structural assembly [17].

Comparative analysis demonstrates that GO and LO outperform traditional generative models like REINVENT 4 in producing synthetically accessible molecules while maintaining desired molecular properties [17].

Architectural Workflow Visualization

The diagram below illustrates the core architectural workflow and logical relationships of the CatDRX framework:

CatDRX Framework Architecture

Experimental Protocols & Validation

Model Training and Implementation

Pre-training Protocol for CatDRX: The CatDRX model undergoes extensive pre-training on the Open Reaction Database (ORD), which contains diverse reaction data encompassing various catalyst types, substrates, and conditions. The training objective combines both reconstruction loss (for catalyst generation) and prediction loss (for yield estimation). During pre-training, the model learns to map the joint space of catalyst structures and reaction conditions into a structured latent representation, enabling it to capture fundamental relationships between catalyst features, reaction contexts, and performance outcomes [1].

Fine-tuning for Downstream Applications: For specific catalytic applications, the pre-trained model is fine-tuned on specialized datasets. This transfer learning approach involves continuing training with a lower learning rate on task-specific data, allowing the model to adapt its general knowledge to particular reaction classes such as cross-couplings or asymmetric transformations [1].

Implementation of Growing/Linking Optimizers: GO and LO are implemented using reinforcement learning fine-tuning, where the models are optimized to select building blocks and reactions that maximize both desired molecular properties and synthetic feasibility. The action space consists of available chemical reactions and building blocks, with rewards based on predicted properties and synthetic accessibility scores [17].

Performance Benchmarking

Quantitative Evaluation Metrics: Model performance is evaluated using multiple metrics depending on the task. For predictive performance, root mean squared error (RMSE) and mean absolute error (MAE) are used for yield prediction, while for classification tasks, area under the curve (AUC) and accuracy are employed. For generative tasks, validity, uniqueness, and novelty of generated structures are quantified, along with success rates in inverse design objectives [1] [18].

Table 1: Performance Comparison of CatDRX Against Baselines on Yield Prediction

| Dataset | Model | RMSE | MAE | R² |

|---|---|---|---|---|

| BH | CatDRX | 8.21 | 6.45 | 0.81 |

| BH | Baseline A | 9.87 | 7.92 | 0.76 |

| SM | CatDRX | 7.35 | 5.83 | 0.84 |

| SM | Baseline B | 8.94 | 7.12 | 0.79 |

| UM | CatDRX | 10.62 | 8.37 | 0.77 |

| UM | Baseline C | 12.45 | 9.86 | 0.71 |

Note: Adapted from performance metrics reported in CatDRX evaluation [1].

Chemical Space Coverage Analysis: To assess generalization capability, the chemical spaces of both reactions and catalysts are examined using dimensionality reduction techniques. Reaction fingerprints (RXNFPs) and catalyst fingerprints (ECFP4) are projected via t-SNE to visualize overlap between pre-training and fine-tuning datasets. Models demonstrate superior performance on datasets with substantial chemical space overlap (e.g., BH, SM, UM, AH), while performance decreases on out-of-distribution reactions (e.g., CC, PS) [1].

Inverse Design Case Studies

Case Study 1: Cross-Coupling Catalyst Optimization In one practical application, CatDRX was employed to design novel phosphine ligands for Pd-catalyzed cross-coupling reactions. The model successfully generated catalysts with modified steric and electronic properties that improved yield by 15-20% compared to conventional ligands for challenging substrate pairs, with generated candidates validated through DFT calculations [1].

Case Study 2: Asymmetric Catalysis Design For a asymmetric hydrogenation reaction, the framework generated novel chiral catalysts with predicted enantioselectivity >90% ee. The model explored structural modifications to established catalyst scaffolds, suggesting non-intuitive substituents that were subsequently validated experimentally to provide high enantioselectivity [1].

Case Study 3: Synthesis-Aware Catalyst Discovery The Growing and Linking Optimizers were applied to design synthesizable enzyme inhibitors, achieving a 3.5-fold improvement in synthetic accessibility scores compared to REINVENT 4 while maintaining target potency. The models successfully identified novel molecular scaffolds accessible in 3-5 synthetic steps from available building blocks [17].

The Scientist's Toolkit: Research Reagent Solutions

Successful implementation of reaction-conditioned generative models requires both computational tools and chemical knowledge resources. The table below details essential components for researchers developing these frameworks.

Table 2: Essential Research Reagents for Catalyst Generative Modeling

| Resource Category | Specific Examples | Function & Application |

|---|---|---|

| Reaction Databases | Open Reaction Database (ORD) | Pre-training data source containing diverse reaction examples with catalyst, yield, and condition information [1] |

| Catalyst Libraries | BH, SM, UM, AH benchmark datasets | Fine-tuning and validation data for specific catalytic transformations [1] |

| Molecular Representations | SMILES, Molecular Graphs, ECFP4 fingerprints | Encoding chemical structures for model input; ECFP4 used for chemical space analysis [1] [18] |

| Reaction Descriptors | Reaction Fingerprints (RXNFP) | 256-bit embeddings representing reaction contexts for condition embedding modules [1] |

| Performance Metrics | TON, TOF, Conversion, Yield, ee | Catalytic activity measurements for model training and validation [16] |

| Validation Tools | DFT calculations, Molecular Dynamics | Computational validation of generated catalyst candidates [1] |

| Optimization Algorithms | Adam, AdamW, AMSGrad, Nadam | Training neural networks; adaptive gradient-based methods show superior convergence [18] |

Technical Implementation Guide

Data Preprocessing Pipeline

Reaction Data Standardization: Raw reaction data from sources like ORD must undergo rigorous standardization before model training. This includes: (1) Reaction atom-mapping to identify corresponding atoms between reactants and products; (2) Catalyst extraction to isolate the catalytic species from other reaction components; (3) Condition normalization to standardize diverse measurement units and representations across datasets; (4) Stereochemistry handling to properly encode chiral centers, which is particularly crucial for asymmetric catalysis [1].

Molecular Featurization Strategies: Catalyst structures can be represented using multiple complementary approaches:

- Graph Representations: Molecular graphs with nodes (atoms) and edges (bonds) incorporating features like atom type, hybridization, and formal charge.

- SMILES Sequences: String-based representations that capture molecular structure through depth-first traversal.

- Molecular Fingerprints: Fixed-length vector representations such as ECFP4 that capture key structural patterns [1] [18].

For reaction condition featurization, extended reaction fingerprints (RXNFP) that incorporate information about reactants, reagents, and products have proven effective for capturing reaction context [1].

Model Optimization Strategies

Optimizer Selection and Configuration: Recent comprehensive analyses demonstrate that optimizer choice significantly impacts model performance in molecular property prediction tasks. Adaptive gradient-based methods generally outperform traditional approaches:

Table 3: Optimizer Performance Comparison for Molecular Property Prediction

| Optimizer | Test Accuracy (%) | Training Stability | Convergence Speed |

|---|---|---|---|

| AdamW | 92.4 ± 0.3 | High | Fast |

| AMSGrad | 91.8 ± 0.4 | High | Medium |

| Adam | 91.2 ± 0.5 | Medium | Fast |

| Nadam | 90.7 ± 0.6 | Medium | Medium |

| RMSprop | 89.3 ± 0.8 | Medium | Medium |

| Adagrad | 85.1 ± 1.2 | Low | Slow |

| SGD with Momentum | 84.6 ± 1.5 | Low | Slow |

| SGD | 82.3 ± 2.1 | Low | Slow |

Note: Performance rankings on molecular classification tasks using Message Passing Neural Networks [18].

Hyperparameter Optimization: Critical hyperparameters include latent space dimensionality (typically 128-512 units), learning rate (1e-4 to 1e-3 with decay schedules), and batch size (32-128 balanced between computational efficiency and stability). The balanced weighting of reconstruction loss versus prediction loss in the multi-task learning objective significantly impacts model behavior, with optimal ratios typically determined through ablation studies [1].

Inverse Design Workflow

The diagram below illustrates the complete inverse design workflow for catalyst discovery, integrating generative modeling with experimental validation:

Catalyst Inverse Design Workflow

Future Directions & Challenges

Despite significant advances, several challenges remain in reaction-conditioned generative models for catalyst design. Data scarcity for specific reaction classes continues to limit model generalizability, particularly for emerging catalytic transformations [1] [16]. Incorporating dynamic reaction conditions and transient intermediates would enhance model physical accuracy beyond current static representations. Multimodal approaches that integrate theoretical descriptors (e.g., from DFT calculations) with structural information show promise for improving prediction accuracy, particularly for electronic properties critical in catalysis [3].

The emerging integration of generative models with high-throughput experimentation creates exciting opportunities for closed-loop discovery systems, where models propose candidates that are automatically synthesized and tested, with results feedback to iteratively improve the models [19]. As these frameworks mature, they are poised to significantly accelerate the catalyst development cycle, potentially reducing discovery timelines from years to months while identifying novel catalytic motifs that might otherwise remain unexplored [1] [3].

In the field of artificial intelligence and machine learning, the paradigm of pre-training on broad databases followed by task-specific fine-tuning has emerged as a powerful strategy, particularly in data-constrained domains like catalyst design. This approach involves first training a model on a large, diverse dataset to learn fundamental chemical principles and patterns, then adapting it to specialized tasks with smaller, targeted datasets. For catalyst design research, this methodology enables researchers to leverage the vast chemical knowledge encoded in large public databases while maintaining high performance on specific catalytic reactions or material properties of interest. The transfer of knowledge from general chemical domains to specialized catalytic tasks has proven particularly valuable given the extensive resources required for experimental catalyst testing and the relative scarcity of high-quality catalytic data [1] [20].

The theoretical foundation of this paradigm rests on transfer learning, which allows knowledge gained from solving one problem to be applied to a different but related problem. In the context of reaction-conditioned generative models for catalyst design, this means that models first learn general chemical relationships, reaction patterns, and structure-property correlations from large-scale databases like the Open Reaction Database (ORD) before being specialized for specific catalytic applications through fine-tuning. This approach has demonstrated significant advantages over training models from scratch on limited datasets, which often leads to overfitting and poor generalization [1] [20] [21].

Quantitative Performance of Pre-training and Fine-tuning Strategies

Performance Comparison of Training Approaches

Extensive research has quantified the benefits of pre-training and fine-tuning strategies across various catalyst and material property prediction tasks. Studies systematically comparing models trained with and without pre-training consistently demonstrate the superiority of the pre-training approach, particularly when the target datasets are small.

Table 1: Performance comparison of scratch models versus pre-trained and fine-tuned models on material property prediction tasks

| Target Property | Training Dataset Size | Scratch Model MAE | Pre-trained + Fine-tuned MAE | Relative Improvement |

|---|---|---|---|---|

| Band Gap (BG) | 800 | 0.142 | 0.128 (FE-BG) | 9.9% |

| Band Gap (BG) | 800 | 0.142 | 0.130 (DC-BG) | 8.5% |

| Formation Energy (FE) | 800 | 0.057 (BG-FE500) | 0.048 (BG-FE800) | 15.8% |

| Dielectric Constant (DC) | 800 | 0.920 (R²) | 0.936 (R²) (BG-FE800) | 1.7% (R²) |

The data reveal that pre-training and fine-tuning consistently outperform training from scratch across multiple material properties, with relative improvements in mean absolute error (MAE) ranging from approximately 9% to 16% depending on the specific property and dataset size [20]. The performance advantage is particularly pronounced when the fine-tuning dataset is small, suggesting that pre-training provides a robust foundational chemical understanding that can be efficiently adapted to specialized tasks with limited data.

Impact of Dataset Size on Model Performance

The relationship between dataset size and model performance follows characteristic patterns that differ significantly between models trained from scratch and those utilizing pre-training and fine-tuning.

Table 2: Impact of fine-tuning dataset size on model performance metrics

| Fine-tuning Dataset Size | Scratch Model R² (BG) | Pre-trained + Fine-tuned R² (FE-BG) | Scratch Model MAE (BG) | Pre-trained + Fine-tuned MAE (FE-BG) |

|---|---|---|---|---|

| 10 | 0.110 | 0.105 | 0.215 | 0.218 |

| 100 | 0.285 | 0.325 | 0.185 | 0.172 |

| 200 | 0.385 | 0.425 | 0.162 | 0.152 |

| 500 | 0.495 | 0.535 | 0.148 | 0.135 |

| 800 | 0.572 | 0.609 | 0.142 | 0.128 |

The data demonstrate that while both approaches benefit from larger dataset sizes, the pre-training and fine-tuning strategy consistently achieves superior performance across all dataset sizes above minimal thresholds (approximately 100 data points) [20]. This performance advantage is evident in both R² scores, which measure the proportion of variance explained by the model, and MAE values, which quantify the average prediction error. The consistent performance gap highlights how pre-training provides models with fundamental chemical knowledge that reduces the data required for effective fine-tuning to specific catalytic tasks.

Experimental Protocols for Pre-training and Fine-tuning

Protocol 1: Pre-training on Broad Reaction Databases

Objective: To create a foundational model with comprehensive knowledge of chemical reactions and catalytic principles by training on diverse reaction data.

Materials and Data Requirements:

- Primary Data Source: Open Reaction Database (ORD) or similar comprehensive reaction database containing diverse reaction types, conditions, and outcomes [1].

- Data Components: Reaction SMILES, catalysts, reactants, products, reagents, reaction conditions (temperature, time, solvent), and performance metrics (yield, conversion) [1].

- Data Preprocessing: Standardization of chemical representations, handling of missing values, normalization of numerical values, and data augmentation through SMILES enumeration [1].

Model Architecture Setup:

- Base Architecture: Joint Conditional Variational Autoencoder (CVAE) with separate embedding modules for catalysts and reaction conditions [1].

- Catalyst Embedding Module: Graph neural network or transformer-based encoder processing molecular structure as graphs or SMILES strings [1].

- Condition Embedding Module: Neural network processing reaction components (reactants, reagents, products) and additional properties (reaction time) [1].

- Integration Mechanism: Concatenation of catalyst and condition embeddings into a unified catalytic reaction embedding passed to the autoencoder module [1].

Training Procedure:

- Initialize model with appropriate weights and architecture parameters

- Train using combined reconstruction loss (for catalyst generation) and prediction loss (for catalytic performance)

- Optimize using adaptive moment estimation (Adam) or similar optimizer

- Validate on held-out portion of pre-training dataset

- Monitor for overfitting and implement early stopping if necessary

- Save model weights and architecture for fine-tuning phase [1]

Quality Control Metrics:

- Reconstruction accuracy of catalyst structures

- Prediction performance on yield and catalytic activity

- Latent space organization and smoothness

- Domain applicability analysis using chemical space visualizations [1]

Protocol 2: Task-Specific Fine-tuning for Catalyst Design

Objective: To adapt a pre-trained model to specific catalytic tasks or reactions using specialized datasets while retaining general chemical knowledge.

Materials and Data Requirements:

- Pre-trained Model: Model trained according to Protocol 1

- Fine-tuning Dataset: Task-specific catalytic data with relevant performance metrics (yield, selectivity, activity, enantioselectivity)

- Data Splitting: Standard split (e.g., 80/10/10) for training, validation, and test sets, ensuring chemical diversity across splits [1] [20]

Fine-tuning Strategy Selection:

- Full Fine-tuning: Update all model parameters (requires significant data and computational resources) [22]

- Parameter-Efficient Fine-tuning (PEFT): Update only subset of parameters, preserving most pre-trained weights (suitable for small datasets) [22]

- Layer-wise Adaptation: Selective updating of specific model layers based on task similarity [20]

Fine-tuning Procedure:

- Load pre-trained model weights and architecture

- Optionally freeze specific layers or components based on fine-tuning strategy

- Train on task-specific data with reduced learning rate (typically 1-10% of pre-training rate)

- Employ gradual unfreezing if using layer-wise adaptation

- Monitor performance on validation set to prevent catastrophic forgetting [20] [22]

- Apply early stopping based on validation performance plateau

- Evaluate final model on held-out test set

Hyperparameter Optimization:

- Learning rate: 1e-5 to 1e-4 (typically lower than pre-training rate)

- Batch size: Adjusted based on dataset size and computational constraints

- Number of epochs: Task-dependent, with careful monitoring for overfitting

- Layer freezing strategy: Based on dataset size and similarity to pre-training domain [20]

Validation and Testing:

- Quantitative metrics: RMSE, MAE, R² for property prediction

- Qualitative assessment: Generated catalyst diversity, novelty, and chemical validity

- Domain applicability: t-SNE visualization to assess chemical space coverage [1]

- Experimental validation: Select top candidates for synthetic testing when possible [23]

Case Studies in Catalyst Design

Case Study 1: CatDRX Framework for Reaction-Conditioned Catalyst Generation

The CatDRX framework exemplifies the effective implementation of the pre-training and fine-tuning paradigm for catalyst design. This approach utilizes a reaction-conditioned variational autoencoder generative model that is first pre-trained on diverse reactions from the Open Reaction Database and subsequently fine-tuned for specific downstream catalytic applications [1].

Pre-training Implementation: The model architecture consists of three core modules: (1) a catalyst embedding module that processes catalyst structures through neural networks, (2) a condition embedding module that learns representations of reaction components (reactants, reagents, products, and additional properties), and (3) an autoencoder module that integrates these embeddings to reconstruct catalysts and predict catalytic performance. During pre-training, the model learns to capture the complex relationships between catalyst structures, reaction conditions, and catalytic outcomes across diverse reaction classes [1].

Fine-tuning and Application: After comprehensive pre-training, the CatDRX model was fine-tuned on various downstream tasks, including yield prediction and catalytic activity estimation for specific reaction classes. The fine-tuned model demonstrated competitive performance in both generative tasks (designing novel catalysts) and predictive tasks (estimating catalytic performance). Importantly, the framework enabled effective generation of potential catalysts conditioned on specific reaction requirements by integrating optimization toward desired properties with validation based on reaction mechanisms and chemical knowledge [1].

Performance Analysis: Evaluation of the chemical spaces covered by the pre-training data and fine-tuning datasets revealed that datasets with substantial overlap with pre-training data (BH, SM, UM, and AH datasets) benefited significantly from transfer learning, while those with minimal overlap (RU, L-SM, CC, and PS datasets) showed reduced performance gains. This analysis highlights the importance of comprehensive pre-training data that spans diverse chemical domains to maximize fine-tuning effectiveness across various applications [1].

Case Study 2: Multi-property Pre-training for Material Property Prediction

Beyond catalyst-specific applications, research has demonstrated the advantages of multi-property pre-training (MPT) approaches where models are simultaneously pre-trained on multiple material properties before fine-tuning on specific target properties.

Experimental Design: In a comprehensive study exploring optimal pre-training and fine-tuning strategies, graph neural networks were pre-trained on seven diverse curated materials datasets with sizes ranging from 941 to 132,752 data points. The properties included average shear modulus (GV), frequency of the highest optical phonon mode peak (PH), DFT band gap (BG), DFT formation energy (FE), computed piezoelectric modulus (PZ), computed dielectric constant (DC), and experimental band gap (EBG) [20] [21].

Performance Findings: The MPT approach consistently outperformed both models trained from scratch and pair-wise pre-training/fine-tuning models on several datasets. Most significantly, the MPT models demonstrated superior performance on a completely out-of-domain 2D material band gap dataset, highlighting the enhanced generalization capability afforded by multi-property pre-training. This approach creates more robust and generalizable models that capture fundamental materials science principles beyond specific property correlations [20] [21].

Implementation Insights: The study systematically explored the influence of key factors including pre-training dataset size, fine-tuning dataset size, and fine-tuning strategies. The researchers found that pre-training and fine-tuning models consistently outperformed models trained from scratch on target datasets, with the performance advantage being particularly pronounced for smaller fine-tuning datasets. This relationship demonstrates the value of transfer learning in data-constrained scenarios common in materials science and catalysis research [20].

Visualization of Training Pipelines

Research Reagent Solutions

Table 3: Essential research reagents and computational resources for pre-training and fine-tuning experiments

| Resource Category | Specific Resource | Function in Pipeline | Key Characteristics |

|---|---|---|---|

| Data Resources | Open Reaction Database (ORD) [1] | Pre-training data source | Diverse reaction classes, reaction conditions, catalytic outcomes |

| USPTO Dataset [24] | Pre-training fine-tuning data | Contains 1,000 reaction types with detailed chemical transformations | |

| Task-specific catalytic datasets [23] | Fine-tuning data | Specialized catalytic performance data (yield, selectivity, activity) | |

| Model Architectures | Joint Conditional VAE [1] | Core generative model | Handles both catalyst generation and performance prediction |

| Graph Neural Networks [20] | Material representation | Learns from structural information beyond simple composition | |

| Conditional Transformer [24] | Reaction product prediction | Predicts products from reactants under reaction type constraints | |

| Computational Framework | ALIGNN [20] | Graph neural network implementation | Captures atomic interactions through line graph features |

| Parameter-efficient Fine-tuning (PEFT) [22] | Adaptation strategy | Reduces computational requirements for fine-tuning | |

| Multi-task Learning Framework [20] | Simultaneous property prediction | Enables multi-property pre-training for enhanced generalization | |

| Validation Tools | t-SNE Chemical Space Visualization [1] | Domain applicability assessment | Evaluates overlap between pre-training and fine-tuning domains |

| DFT Calculations [23] | Catalyst performance validation | Provides theoretical validation of catalyst properties and mechanisms | |

| High-throughput Experimentation [23] | Experimental validation | Empirically tests predicted catalyst performance |

Application Note: Generative AI for Inverse Catalyst Design

The integration of artificial intelligence (AI) with catalyst design represents a paradigm shift in chemical research, moving from traditional trial-and-error approaches to data-driven inverse design. This application note explores two complementary machine learning frameworks—inverse ligand design for vanadyl-based epoxidation catalysts and the CatDRX model for cross-coupling reactions—that exemplify the power of reaction-conditioned generative models in modern catalyst development [15] [1].

These models address critical limitations in conventional catalyst discovery by simultaneously considering multiple reaction components, including substrates, reagents, and conditions, thereby enabling the generation of novel catalyst structures optimized for specific transformations. The frameworks demonstrate particular value in pharmaceutical development, where rapid catalyst optimization directly impacts synthetic efficiency and molecular diversity [1] [25].

Case Study 1: Inverse Design of Vanadyl Epoxidation Catalysts

Generative Model Architecture and Performance

A specialized machine learning (ML) model has been developed for the inverse, de novo generative design of vanadyl-based catalyst ligands for epoxidation reactions. This model leverages molecular descriptors calculated using the RDKit library and was trained on a curated dataset of six million structures, achieving exceptional performance metrics [15]:

Table 1: Performance Metrics of Vanadyl Ligand Generative Model

| Metric | Performance | Significance |

|---|---|---|

| Validity | 64.7% | Percentage of generated structures that are chemically valid |

| Uniqueness | 89.6% | Percentage of novel structures not present in training data |

| RDKit Similarity | 91.8% | Structural consistency with known chemical space |

The model specifically targets vanadyl catalyst scaffolds—VOSO₄, VO(OiPr)₃, and VO(acac)₂—generating feasible ligands optimized for catalytic performance in alkene and alcohol epoxidation. The generated ligands for VOSO₄ exhibited consistency with high-yield reactions, while VO(OiPr)₃ and VO(acac)₂ scaffolds demonstrated greater structural variability, suggesting broader design possibilities [15].

Integrated Reaction System Co-Design

Unlike conventional generative approaches, this inverse design framework simultaneously optimizes the reaction system, including substrate SMILES representations and reaction conditions. The model architecture investigation identified deep-learning transformers as the most powerful approach, revealing clustering patterns in electronic and structural descriptors correlated with yield predictions [15].

Critical to practical implementation, the generated ligands exhibited high synthetic accessibility scores, confirming their feasibility for laboratory synthesis. This addresses a common limitation in computational catalyst design, where theoretically optimal structures may be synthetically inaccessible [15].

Case Study 2: CatDRX Framework for Cross-Coupling Catalysis

Model Architecture and Training Methodology

The CatDRX framework employs a reaction-conditioned variational autoencoder (VAE) for catalyst generation and performance prediction. This architecture consists of three integrated modules [1]:

- Catalyst Embedding Module: Processes catalyst structural information through neural networks to generate catalyst embeddings.

- Condition Embedding Module: Encodes reaction components (reactants, reagents, products) and conditions (reaction time) into condition embeddings.

- Autoencoder Module: Combines embeddings from both modules to map inputs into a latent chemical space, enabling catalyst reconstruction and property prediction.