The Catalyst AI Dilemma: Mastering Exploration vs. Exploitation for Next-Gen Drug Discovery

This article provides a comprehensive guide for researchers and drug development professionals on navigating the critical trade-off between exploration and exploitation within catalyst generative AI.

The Catalyst AI Dilemma: Mastering Exploration vs. Exploitation for Next-Gen Drug Discovery

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on navigating the critical trade-off between exploration and exploitation within catalyst generative AI. We begin by establishing the foundational concepts and real-world urgency of this balance in molecular discovery. We then delve into the core methodological approaches and practical applications, including specific AI architectures and search algorithms. A dedicated troubleshooting section addresses common pitfalls, data biases, and strategies for optimization. Finally, we examine validation frameworks and comparative analyses of leading methods, equipping teams with the knowledge to rigorously evaluate and deploy these systems. The conclusion synthesizes key strategies and outlines future implications for accelerating biomedical innovation.

The Core Conundrum: Why Balancing Exploration and Exploitation is Critical for Catalyst AI

Technical Support Center

Troubleshooting Guides

Guide 1: Handling a Generative AI Model that Only Produces Known Catalyst Derivatives

Symptoms: The model's output diversity is low. Over 90% of proposed structures are minor variations (e.g., single methyl group changes) of known high-performing catalysts from the training set. Success rate for truly novel scaffolds falls below 2%.

| Potential Cause | Diagnostic Check | Corrective Action |

|---|---|---|

| Exploitation Bias in Training Data | Analyze training set distribution. Calculate Tanimoto similarity between new proposals and the top 50 training set actives. | Rebalance dataset. Augment with diverse, lower-activity compounds. Apply generative model techniques like Activity-Guided Sampling (AGS). |

| Loss Function Over-penalizing Novelty | Review loss function components. Is the reconstruction loss term disproportionately weighted vs. the prediction (activity) reward term? | Adjust loss function weights. Increase reward for predicted high activity in structurally dissimilar regions (e.g., reward * (1 - similarity)). |

| Sampling Temperature Too Low | Check the temperature parameter in the sampling layer (e.g., in a VAE or RNN). A value ≤ 0.7 encourages exploitation. |

Gradually increase sampling temperature to 1.0-1.2 during inference to increase stochasticity and exploration. |

Protocol for Diagnostic Similarity Analysis:

- Input: Generated molecule set {G}, training set actives {A}.

- Fingerprint Generation: Compute ECFP4 fingerprints for all molecules in {G} and {A}.

- Similarity Calculation: For each molecule in {G}, find its maximum Tanimoto similarity to any molecule in {A}.

- Distribution Plotting: Plot the histogram of these maximum similarities. An exploitation-heavy model will show a strong peak >0.8.

Guide 2: Generative AI Proposes Chemically Unrealistic or Unsynthesizable Catalysts

Symptoms: Proposed molecules contain forbidden valences, unstable ring systems (e.g., cyclobutadiene cores in transition metal complexes), or require >15 synthetic steps according to retrosynthesis analysis.

| Potential Cause | Diagnostic Check | Corrective Action |

|---|---|---|

| Insufficient Chemical Rule Constraints | Run a valency/ring strain check (e.g., using RDKit's SanitizeMol or a custom metallocene stability filter). |

Integrate rule-based post-generation filters. Employ a reinforcement learning agent with a synthesizability penalty (e.g., based on SAScore or SCScore). |

| Training on Non-Experimental (Theoretical) Data | Verify data source. Are all training complexes experimentally reported? Cross-check with ICSD or CSD codes. | Fine-tune the generative model on a smaller, high-quality dataset of experimentally characterized catalysts. Use transfer learning. |

| Decoding Error in Sequence-Based Models | For SMILES-based RNN/Transformers, check for invalid SMILES string generation rates (>5% is problematic). | Implement a Bayesian optimizer for the decoder or switch to a graph-based generative model which inherently respects chemical connectivity. |

Frequently Asked Questions (FAQs)

Q1: Our generative AI model is effective at exploration but its proposals are often low-activity. How can we improve the "hit rate" without sacrificing diversity? A: Implement a multi-objective Bayesian optimization (MOBO) loop. The AI generates a diverse initial set (exploration). These are scored by a surrogate activity model. MOBO then balances the trade-off between predicted activity (exploitation) and uncertainty/novelty (exploration) to select the next batch for actual testing. This creates a focused exploitation within explored regions.

Q2: What quantitative metrics should we track to ensure we are balancing exploration and exploitation in our catalyst discovery pipeline? A: Monitor these key metrics per campaign cycle:

| Metric | Formula / Description | Target Range (Guideline) |

|---|---|---|

| Structural Diversity | Average pairwise Tanimoto dissimilarity (1 - similarity) within a generation batch. | 0.6 - 0.8 (Higher = more exploration) |

| Novelty | Percentage of generated catalysts with similarity <0.4 to any known catalyst in the training database. | 20-40% |

| Success Rate | Percentage of AI-proposed catalysts meeting/exceeding target activity threshold upon experimental validation. | Aim to increase over cycles. |

| Performance Improvement | ∆Activity (e.g., % yield, TOF) of best new catalyst vs. previous best. | Positive, ideally >10% relative improvement. |

Q3: We have a small, high-quality experimental dataset. How can we use generative AI without overfitting? A: Use a pre-trained and fine-tuned approach. Start with a model pre-trained on a large, diverse chemical library (e.g., ZINC, PubChem) to learn general chemical rules. Then, fine-tune this model on your small, proprietary catalyst dataset. This grounds the model in real chemistry while biasing it towards your relevant chemical space. Always use a held-out test set from your proprietary data for validation.

Q4: Can you provide a standard protocol for a single "Explore-Exploit" cycle in catalyst discovery? A: Protocol for a Generative AI-Driven Catalyst Discovery Cycle

- Initialization (Exploration Seed): Assemble a diverse training set of catalysts with associated performance data (e.g., turnover number, yield).

- Model Training & Exploration Phase:

- Train a generative model (e.g., Graph Neural Network-based variational autoencoder) on the dataset.

- Generate a large virtual library (e.g., 50,000 candidates) using explorative sampling (higher temperature, random seed sampling).

- Filter for chemical feasibility and synthetic accessibility.

- Candidate Selection (Balancing Act):

- Use a acquisition function (e.g., Upper Confidence Bound - UCB) that combines a surrogate model's predicted activity and its uncertainty to rank candidates.

Acquisition(x) = μ(x) + β * σ(x), where μ is predicted performance, σ is uncertainty, and β is a tunable exploration parameter.- Select the top 20-50 candidates for in silico or experimental testing.

- Experimental Validation (Exploitation Check):

- Synthesize and test the selected candidates using a high-throughput experimentation protocol.

- Data Integration & Model Update:

- Add the new experimental results (successes and failures) to the training dataset.

- Retrain or fine-tune the generative model with the expanded dataset.

- Return to Step 2 for the next cycle.

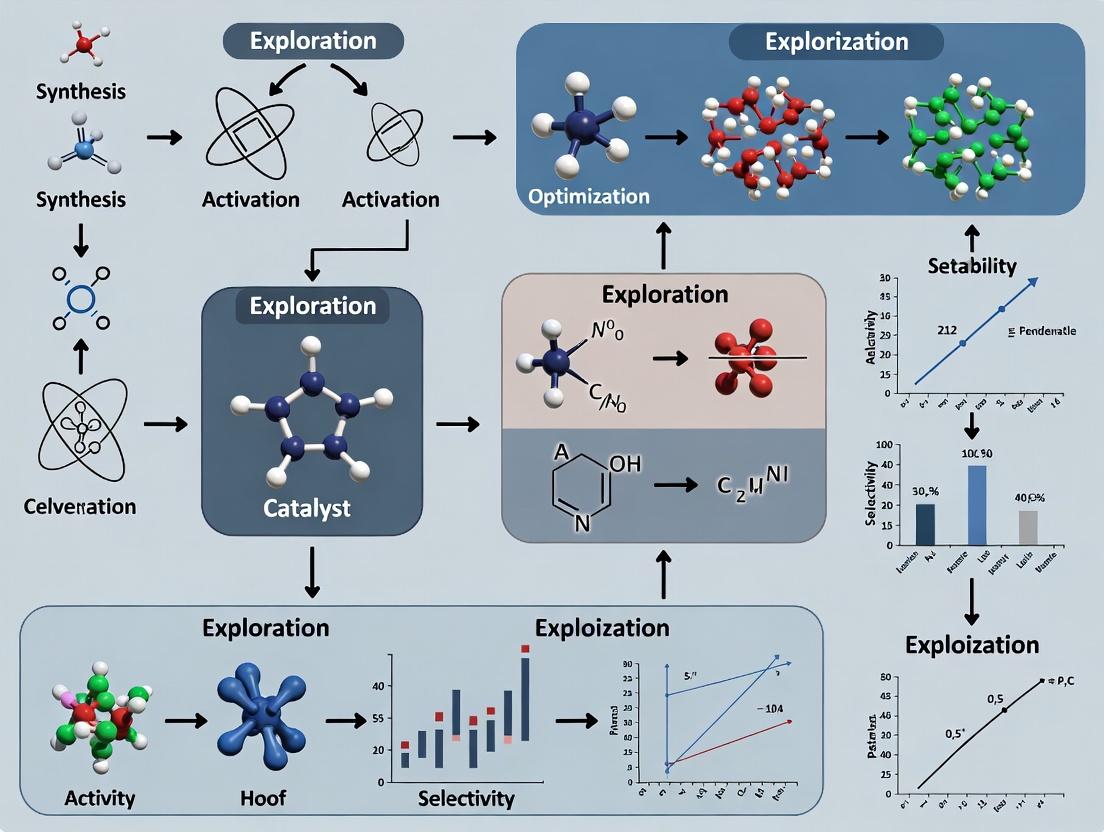

Diagrams

Title: Generative AI Catalyst Discovery Cycle

Title: Acquisition Function Decision Logic

The Scientist's Toolkit: Key Research Reagent Solutions

| Item / Solution | Function in Catalyst Generative AI Research | Example / Specification |

|---|---|---|

| High-Throughput Experimentation (HTE) Kits | Enables rapid experimental validation of AI-generated catalyst candidates, feeding crucial data back into the AI loop. | 96-well or 384-well plate-based screening kits with pre-dosed ligands & metal precursors for cross-coupling reactions. |

| Chemical Feasibility Filter Software | Post-processes AI-generated structures to remove chemically invalid or unstable molecules, ensuring exploration is grounded in reality. | RDKit with custom valence/ring strain rules; molvs library for standardization. |

| Synthetic Accessibility (SA) Scorer | Quantifies the ease of synthesizing a proposed catalyst, guiding the exploitation of viable leads. | SAScore (1-10, easy-hard) or SCScore (trained on retrosynthetic complexity). |

| Surrogate (Proxy) Model | A fast, predictive machine learning model (e.g., Random Forest, GNN) that estimates catalyst performance, used to screen the virtual library before costly experiments. | A graph-convolution model trained on DFT-calculated binding energies or historical assay data. |

| Molecular Fingerprint or Descriptor Set | Encodes molecular structures into numerical vectors, enabling similarity calculations crucial for defining novelty and diversity. | ECFP4 (Extended Connectivity Fingerprints), Mordred descriptors (2D/3D). |

| Multi-Objective Bayesian Optimization (MOBO) Platform | Algorithmically balances the trade-off between exploring uncertain regions and exploiting high-performance regions. | Software like BoTorch or Dragonfly with custom acquisition functions (e.g., Expected Hypervolume Improvement). |

Technical Support Center: Troubleshooting Generative AI for Catalyst & Drug Discovery

FAQs & Troubleshooting Guides

Q1: Our generative AI model for catalyst design keeps proposing similar, incremental modifications to known lead compounds. How can we force it to explore more novel chemical space? A: This is a classic "exploitation vs. exploration" imbalance. Implement the following protocol:

- Adjust Sampling Temperature: Increase the sampling temperature (e.g., from 0.7 to 1.2) in your model's output layer to increase the randomness of generated structures.

- Incorporate a Novelty Reward: Add a term to your reinforcement learning objective function that penalizes similarity to a known compound library. Use Tanimoto similarity based on Morgan fingerprints (radius=2, 1024 bits) and set a threshold (e.g., reward compounds with similarity < 0.3).

- Diversity Sampling Batch: Use a max-min algorithm to select the final batch of compounds from the generated pool, ensuring maximum diversity.

Q2: When fine-tuning a pre-trained molecular generative model on a specific target, the performance on the validation set degrades after a few epochs—likely overfitting to a local minimum. How do we recover? A: Implement an early stopping regimen with exploration checkpoints.

- Protocol: Split your data into Train/Validation/Test (70/15/15). Train for 100 epochs.

- Monitor: Track the unique valid scaffolds (Bemis-Murcko) generated in each epoch on the validation set.

- Action: If scaffold diversity drops by >20% for 3 consecutive epochs, revert to the model checkpoint from 5 epochs prior. Reduce the learning rate by a factor of 10 and re-introduce 10% of a general drug-like molecule dataset (e.g., ZINC15 fragments) into the training batch for the next 5 epochs to reintroduce broader chemical knowledge.

Q3: Our AI proposes a novel chemotype with good predicted binding affinity, but our synthetic chemistry team deems it non-synthesizable with available routes. How can we integrate synthesizability earlier? A: Integrate a real-time synthesizability filter into the generation loop.

- Tool: Use the AI-based retrosynthesis tool ASKCOS or the RAscore synthesizability score.

- Workflow Integration: Configure your generative model to only propose structures where the RAscore is >0.5 or where ASKCOS suggests at least one route with a confidence > 0.4. This creates a constrained generation environment focused on exploitable, realistic chemistry.

Q4: How do we quantitatively balance exploring new chemotypes versus optimizing a promising lead series? A: Establish a multi-armed bandit framework with clear metrics. The table below summarizes a proposed scoring system to guide the allocation of resources (e.g., computational cycles, synthesis efforts).

Table 1: Scoring Framework for Exploration vs. Exploitation Decisions

| Metric | Exploration (Novel Chemotype) | Exploitation (Lead Optimization) | Weight |

|---|---|---|---|

| Predicted Activity (pIC50/Affinity) | > 7.0 (High Threshold) | Incremental improvement from baseline (Δ > 0.3) | 0.35 |

| Synthetic Accessibility (SAscore) | 1-3 (Easy to Moderate) | 1-2 (Trivial to Easy) | 0.25 |

| Novelty (Tanimoto to DB) | < 0.35 | N/A | 0.20 |

| ADMET Risk (QED/SAscore) | QED > 0.5, No critical alerts | Focused optimization of 1-2 specific ADMET parameters | 0.20 |

Protocol: Weekly, score all proposed compounds from both the exploration and exploitation pipelines using this weighted sum. Allocate 70% of resources to the top 50% of scores, but mandate that 30% of resources are reserved for the top-ranked pure exploration candidates (Novelty < 0.35).

Experimental Protocols

Protocol 1: Evaluating Generative Model Output for Diversity and Local Minima Trapping Objective: Quantify whether a generative AI model is stuck in a local minimum or exploring effectively. Materials: Output of 1000 generated SMILES from your model, a reference set of 10,000 known active molecules for your target. Method:

- Compute Fingerprints: Generate ECFP4 fingerprints (1024 bits) for all generated and reference molecules.

- Calculate Intra-set Diversity: For the generated set, compute the average pairwise Tanimoto distance (1 - similarity) across 1000 randomly sampled pairs.

- Calculate Inter-set Similarity: For each generated molecule, find its maximum Tanimoto similarity to the reference set. Compute the average.

- Interpretation: Low intra-set diversity (< 0.4) and high inter-set similarity (> 0.6) indicate trapping in a local minimum near known chemotypes. High intra-set diversity (> 0.6) and moderate inter-set similarity (0.3-0.5) indicate healthy exploration.

Protocol 2: Reinforcement Learning Fine-Tuning with a Dual Objective Objective: Fine-tune a pre-trained molecular generator (e.g., GPT-Mol) to optimize for both activity and novelty. Materials: Pre-trained model, target-specific activity predictor (QSAR model), computing cluster with GPU. Method:

- Define Reward R:

R = 0.7 * R_activity + 0.3 * R_noveltyR_activity: Normalized predicted pIC50 from your QSAR model (scale 0 to 1).R_novelty:1 - (Max Tanimoto similarity to a large database like ChEMBL).

- Training: Use Proximal Policy Optimization (PPO). Start with a learning rate of 0.0001. Generate 500 molecules per epoch.

- Validation: Every 10 epochs, evaluate the top 100 molecules by R score on a separate validation set from your QSAR model. Stop if the average R_activity for the top 100 decreases for 3 validation cycles.

Pathway & Workflow Visualizations

Title: Dual-Path RL for Exploration & Exploitation in Molecular AI

Title: Generative AI Molecule Prioritization Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for AI-Driven Catalyst & Drug Discovery

| Tool/Reagent | Category | Primary Function in Experiment |

|---|---|---|

| Pre-trained Model (e.g., ChemBERTa, GPT-Mol) | Software | Provides foundational chemical language understanding for transfer learning and generation. |

| Reinforcement Learning Framework (e.g., RLlib, custom PPO) | Software | Enables fine-tuning of generative models using custom multi-objective reward functions. |

| Molecular Fingerprint Library (e.g., RDKit ECFP4) | Software | Encodes molecular structures into numerical vectors for similarity and diversity calculations. |

| Synthesizability Scorer (e.g., RAscore, SAscore) | Software | Filters AI-generated molecules by estimated ease of synthesis, grounding proposals in reality. |

| High-Throughput Virtual Screening Suite (e.g., AutoDock Vina, Glide) | Software | Rapidly evaluates the predicted binding affinity of generated molecules to the target. |

| ADMET Prediction Platform (e.g., QikProp, admetSAR) | Software | Provides early-stage pharmacokinetic and toxicity risk assessment for prioritization. |

| Diverse Compound Library (e.g., Enamine REAL, ZINC) | Data | Serves as a source of known chemical space for novelty calculation and as a training set supplement. |

| Target-specific Assay Kit | Wet Lab | Provides the ultimate experimental validation of AI-generated candidates (e.g., kinase activity assay). |

Troubleshooting Guide & FAQs

This technical support center addresses common issues encountered when implementing multi-armed bandit (MAB) frameworks for balancing exploration and exploitation in catalyst generative AI research for drug development.

FAQ 1: Algorithm Selection & Convergence

Q1: My MAB algorithm (e.g., Thompson Sampling, UCB) fails to converge on a promising catalyst candidate, persistently exploring low-reward options. What parameters should I audit? A: This typically indicates an imbalance in the exploration-exploitation trade-off hyperparameters. Key metrics to check and standard adjustment protocols are summarized below.

| Parameter | Typical Default Value (Thompson Sampling) | Recommended Audit Range | Symptom of Incorrect Setting | Correction Protocol |

|---|---|---|---|---|

| Prior Distribution (α, β) | α=1, β=1 (Uniform) | α, β = [0.5, 5] | Excessive exploration of poor performers | Increase α for successes, β for failures based on initial domain knowledge. |

| Sampling Temperature (τ) | τ=1.0 | τ = [0.01, 10.0] | Low diversity in exploitation phase | Gradually decay τ from >1.0 (explore) to <1.0 (exploit) over iterations. |

| Minimum Iterations per Arm | 10 | [5, 50] | Erratic reward estimates, premature pruning | Increase minimum trials to stabilize mean/variance estimates. |

| Reward Scaling Factor | 1.0 | [0.1, 100] | Algorithm insensitive to performance differences | Scale rewards so that the standard deviation of initial batch is ~1.0. |

Experimental Protocol for Parameter Calibration:

- Baseline Run: Execute the MAB algorithm with default parameters for N=1000 iterations on a simulated reward environment mimicking your catalyst property landscape (e.g., yield, selectivity).

- Metric Collection: Log cumulative regret, % of iterations spent on top-3 arms, and rate of discovery of new high-performing arms.

- Iterative Adjustment: Systematically vary one parameter (see table) per experiment while holding others constant.

- Validation: Run the optimized parameter set on a held-out simulation or a small-scale real experimental batch (≤ 20 reactions) to confirm improved convergence.

FAQ 2: Reward Function Design

Q2: How should I formulate the reward function when optimizing for multiple, conflicting catalyst properties (e.g., high yield, low cost, enantioselectivity)? A: A scalarized, weighted sum reward is most common, but requires careful normalization. Use the following table as a guide.

| Property (Example) | Measurement Range | Normalization Method | Recommended Weight (Initial) | Adjustment Trigger |

|---|---|---|---|---|

| Reaction Yield | 0-100% | Linear: (Yield%/100) | 0.50 | Decrease if yield plateaus >90% to prioritize other factors. |

| Enantiomeric Excess (ee) | 0-100% | Linear: (ee%/100) | 0.30 | Increase if lead candidates fail purity thresholds. |

| Catalyst Cost (per mmol) | $10-$500 | Inverse Linear: 1 - [(Cost - Min)/(Max-Min)] | 0.20 | Increase in later stages for commercialization feasibility. |

Experimental Protocol for Reward Function Tuning:

- Define Objective:

R = w₁*Norm(Yield) + w₂*Norm(ee) + w₃*Norm(Cost). Ensure ∑wᵢ = 1. - Pareto Frontier Analysis: Run a grid search over weights in simulation (or a high-throughput computational screen) for 100 candidate catalysts.

- Sensitivity Analysis: For each weight, vary ±0.15 while holding others proportional. Select the weight set that maximizes the number of candidates in the desired region of the property space.

- Dynamic Reward Update: Program the MAB system to recalculate reward normalization factors (Min, Max) every 100 experimental iterations based on observed data to prevent drift.

FAQ 3: Integration with Generative AI

Q3: The generative AI model proposes catalyst structures, but the MAB algorithm selects reaction conditions. How do I manage this two-tiered decision loop efficiently? A: Implement a hierarchical MAB framework. The primary "bandit" selects a region of chemical space (or a specific generative model prompt), and secondary bandits select experimental conditions for that region.

Diagram Title: Hierarchical MAB-Generative AI Feedback Loop

Protocol for Synchronizing Hierarchical MAB:

- Primary Loop (Slow, Exploration): The generative AI proposes 5-10 distinct catalyst families. The primary MAB (using Thompson Sampling) selects one family to investigate for the next batch of 20 experiments.

- Secondary Loop (Fast, Exploitation): For the selected family, a contextual bandit (e.g., LinUCB) selects specific ligand/ solvent/ temperature conditions for each of the 20 experiments, using molecular descriptors as context.

- Feedback & Update: Rewards from the 20 experiments update the secondary bandit's model immediately. The aggregated reward (e.g., mean top-3 performance) updates the primary bandit's prior for the chosen catalyst family. This aggregated reward is also fed back to fine-tune the generative AI model.

The Scientist's Toolkit: Research Reagent Solutions

| Item / Solution | Function in MAB-Driven Catalyst Research | Example / Specification |

|---|---|---|

| High-Throughput Experimentation (HTE) Robotic Platform | Enables rapid parallel synthesis and testing of catalyst candidates selected by the MAB algorithm, providing the essential data stream for reward calculation. | Chemspeed Accelerator SLT II, Unchained Labs Junior. |

| Automated Chromatography & Analysis System | Provides rapid, quantitative measurement of reaction outcomes (yield, ee) which form the numeric basis of the reward function. | HPLC-UV/ELSD with auto-samplers, SFC for chiral separation. |

| Chemical Featurization Software | Generates numerical descriptors (Morgan fingerprints, DFT-derived properties) for catalyst structures, serving as "context" for contextual bandit algorithms. | RDKit, Dragon, custom Python scripts. |

| Multi-Armed Bandit Simulation Library | Allows for offline testing and calibration of MAB algorithms (UCB, Thompson Sampling, Exp3) on historical data before costly wet-lab deployment. | MABWiser (Python), Contextual (Python), custom PyTorch implementations. |

| Reward Tracking Database | Centralized log to store (candidate ID, conditions, measured properties, calculated reward) for each MAB iteration, ensuring reproducibility and model retraining. | SQLite, PostgreSQL with custom schema, or ELN integration (e.g., Benchling). |

Troubleshooting Guides & FAQs

Q1: Our generative AI for novel catalyst discovery has stagnated, producing only minor variations of known active sites. Are we over-exploiting? A: This is a classic symptom of excessive exploitation. The model is trapped in a local optimum of the chemical space.

- Diagnosis: Calculate the Tanimoto similarity index between newly generated molecular structures and your known actives library. A median similarity >0.85 suggests over-exploitation.

- Solution: Temporarily increase the "temperature" parameter (e.g., from 0.7 to 1.2) in your sampling algorithm to encourage exploration. Implement a "novelty penalty" in the reward function that penalizes structures too similar to the training set.

- Protocol: For a 4-week cycle, dedicate Week 1 to purely exploratory generation (high temp, novelty focus) without immediate validation. Use Weeks 2-4 to exploit and validate the most promising scaffolds from the exploratory batch.

Q2: We generated thousands of novel, structurally diverse catalyst candidates, but wet-lab validation found zero hits. Is this failed exploration? A: Yes. This indicates exploration was unguided and disconnected from physicochemical reality.

- Diagnosis: Audit your generative model's filters and primary reward function. Over-reliance on simple metrics like QED (Quantitative Estimate of Drug-likeness) for catalysts is insufficient.

- Solution: Integrate a fast, approximate quantum mechanics (QM) simulation (e.g., DFTB) into the reward pipeline to pre-screen for plausible stability and electronic properties. Balance the reward between novelty and a minimum feasibility score.

- Protocol: Implement a iterative refinement loop: Generate batch → Pre-screen with cheap QM (DFTB) → Select top 100 by composite score → Validate with higher-fidelity QM (DFT) → Retrain model on results.

Q3: Our campaign cycles between wild exploration and narrow exploitation, failing to converge. How do we stabilize the balance? A: You lack a dynamic scheduling mechanism.

- Diagnosis: Plot the "exploration rate" (e.g., % of structures with similarity <0.7 to any known active) versus campaign iteration. It should show a controlled decay, not oscillation.

- Solution: Implement an ε-greedy or decay schedule. Start with a high exploration probability (ε=0.8) and reduce it by 10% each major iteration where a validated hit is found.

- Protocol:

- Define iteration = one full generate-screen-validate cycle.

- Initialize ε = 0.8.

- After each iteration: If ΔActivity > 10% (improvement), keep ε. If ΔActivity ≤ 10%, set ε = max(0.1, ε * 0.9).

Q4: The AI suggests catalysts with synthetically intractable motifs. How do we fix this? A: The exploitation of activity predictions is not tempered by synthetic feasibility constraints.

- Diagnosis: Use a retrosynthesis model (e.g., IBM RXN, ASKCOS) to analyze the last batch of proposed structures. A success rate <30% indicates a problem.

- Solution: Add a "synthetic accessibility" (SA) score as a mandatory, weighted term in the final selection filter. Use a graph-based model trained on reaction databases.

- Protocol: Integrate the SA score before expensive simulation. Workflow: Generation → SA Filter (pass if SA Score > 0.6) → QM Pre-screen → Selection.

Table 1: Analysis of Two Failed Campaigns Demonstrating Imbalance

| Campaign | Exploration Metric (Novelty Score) | Exploitation Metric (Predicted Activity pIC₅₀) | Wet-Lab Hit Rate | Root Cause Diagnosis |

|---|---|---|---|---|

| Alpha | 0.15 ± 0.05 (Low) | 8.5 ± 0.3 (High) | 5% (Known analogs) | Severe Over-Exploitation: Model converged too early on a narrow chemotype. |

| Beta | 0.92 ± 0.03 (Very High) | 5.1 ± 1.2 (Low/Noisy) | 0% | Blind Exploration: No guiding constraints for catalytic feasibility or synthesis. |

Table 2: Impact of Dynamic ε-Greedy Scheduling on Campaign Performance

| Iteration | Fixed ε=0.2 (Over-Exploit) | Fixed ε=0.8 (Over-Explore) | Dynamic ε (Start=0.8, Decay=0.9) |

|---|---|---|---|

| 1 | 0 Novel Hits | 2 Novel Hits | 2 Novel Hits |

| 5 | 0 Novel Hits (Converged) | 1 Novel Hit (Erratic) | 6 Novel Hits |

| 10 | 0 Novel Hits | 3 Novel Hits | 9 Novel Hits (Converging) |

| Total Validated Hits | 1 (Known scaffold) | 6 | 15 |

Experimental Protocols

Protocol A: Correcting Over-Exploitation with Directed Exploration

- Pause active model training.

- Sample 5000 structures from the generative model with temperature parameter T=1.5.

- Filter this set using a dissimilarity filter: retain only structures with Tanimoto similarity <0.65 to all top 20 known actives.

- Score the filtered set with a fast, pre-trained proxy model for a different but related property (e.g., ligand binding affinity for a related substrate) to add new guidance.

- Select the top 200 by this new proxy score.

- Validate selected candidates through primary assay. Use results to augment training data.

- Resume training with a balanced loss function (e.g., 0.7 * Activity Loss + 0.3 * Novelty Reward).

Protocol B: Establishing a Feasibility-First Screening Funnel

- Generation: Produce 10,000 candidate structures per cycle.

- Step 1 - Synthetic Accessibility (SA): Process all through a retrosynthesis AI. Assign SA Score (0-1). Discard all with SA Score < 0.55. (~60% pass).

- Step 2 - Structural Stability: Perform conformer search and MMFF94 minimization. Discard structures with high strain energy (>50 kcal/mol). (~85% pass).

- Step 3 - Quantum Mechanical Pre-screen: Run semi-empirical QM (DFTB) to calculate key descriptors (HOMO/LUMO gap, adsorption energy). Filter based on plausible ranges. (~20% pass).

- Step 4 - High-Fidelity Prediction: Run DFT calculation on the ~100 remaining candidates for accurate activity prediction.

- Step 5 - Selection & Validation: Select top 20 for wet-lab synthesis and testing.

Visualizations

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Catalyst AI Research |

|---|---|

| Generative Model (e.g., G-SchNet, G2G) | Core AI to propose new molecular structures by exploring chemical space. |

| Fast QM Calculator (DFTB/xtb) | Provides rapid, approximate quantum mechanical properties for pre-screening thousands of candidates. |

| High-Fidelity QM Suite (Gaussian, ORCA) | Delivers accurate electronic structure calculations (DFT) for final candidate selection. |

| Retrosynthesis AI (ASKCOS, IBM RXN) | Evaluates synthetic feasibility and routes, crucial for realistic exploitation. |

| Conformer Generator (RDKit, CONFAB) | Produces realistic 3D geometries for stability checks and descriptor calculation. |

| Automated Reaction Platform (Chemspeed, Unchained Labs) | Enables high-throughput experimental validation of AI-proposed catalysts. |

| Descriptor Database (CatBERTa, OCELOT) | Pre-trained models or libraries for mapping structures to catalytic properties. |

Troubleshooting Guides & FAQs

FAQ 1: How do I improve generative model performance when training data for novel catalyst compositions is extremely sparse (e.g., < 50 data points)?

- Answer: Sparse data is a fundamental challenge. The recommended approach is a hybrid strategy combining physics-informed data augmentation and transfer learning.

- Physics-Informed Data Augmentation: Use Density Functional Theory (DFT) or semi-empirical methods (e.g., PM7) to generate auxiliary descriptors (e.g., adsorption energies, d-band centers, formation energies) for your known compositions. These calculated features, while not perfect, expand the feature space with physically meaningful data.

- Transfer Learning from a Proxy Domain: Pre-train your generative model (e.g., a Variational Autoencoder or a Graph Neural Network) on a large, computationally generated dataset (like the Open Catalyst Project OC20 dataset) that shares underlying principles (e.g., elemental properties, bonding types). Fine-tune the final layers on your small, sparse experimental dataset.

- Exploit Known Heuristics: Constrain the generative model's latent space using known scaling relationships (e.g., Brønsted–Evans–Polanyi principles) to penalize unrealistic candidate structures.

FAQ 2: My generative AI proposes catalyst candidates in a vast chemical space (high-dimensional). How can I efficiently validate and prioritize these for experimental synthesis?

- Answer: Prioritization requires a multi-fidelity filtering pipeline to balance exploration (novelty) and exploitation (predicted performance).

- First-Pass Computational Screening: Apply rapid, low-fidelity calculations (e.g., Machine Learning Force Fields, MLFFs) to filter for stability. Eliminate candidates with predicted negative formation energy or unrealistic bond lengths.

- Synthesis Feasibility Check: Interface with a database of known synthesis routes (e.g., from the ICSD or literature-mined procedures). Use a retrosynthesis model (e.g., based on the MIT ASKCOS framework) to score the feasibility of proposed precursors and pathways. Prioritize candidates with known or analogous synthesis protocols.

- High-Fidelity Evaluation Subset: For the top 0.1% of candidates passing filters (1) and (2), run high-fidelity DFT calculations on key reaction descriptors to refine activity predictions before committing to lab synthesis.

FAQ 3: The AI-suggested catalyst has a promising computed activity, but the proposed complex nanostructure seems impossible to synthesize. How should I proceed?

- Answer: This is a core synthesis feasibility challenge. Implement a "Synthesis-Aware" Discriminator within your generative AI's training loop.

- Method: Train a separate classifier model (the discriminator) to distinguish between "synthesizable" and "non-synthesizable" materials based on historical synthesis data (e.g., text-mined from scientific literature). During the generative model's training, the discriminator penalizes the generation of candidates it deems non-synthesizable. This actively shapes the generative output towards regions of chemical space with higher practical viability.

FAQ 4: How can I quantify the trade-off between exploring entirely new catalyst families and exploiting known, promising leads?

- Answer: Implement and track an Explicit Exploration-Exploitation Metric during your active learning cycles.

Table: Key Metrics for Balancing Exploration and Exploitation

| Metric | Formula / Description | Target Range (Guideline) | Interpretation |

|---|---|---|---|

| Exploration Ratio | (Novel Candidates Tested) / (Total Candidates Tested). A "novel" candidate is defined as >X Å from any training data in a relevant descriptor space (e.g., using SOAP). | 20% - 40% per batch | Maintains search diversity and avoids local optima. |

| Exploitation Confidence | Mean predicted uncertainty (e.g., standard deviation from an ensemble model) for the top 10% of exploited candidates. | Decreasing trend over cycles | Indicates improved model confidence in promising regions. |

| Synthesis Success Rate | (Successfully Synthesized Candidates) / (Attempted Synthesized Candidates). | Aim for >15% in exploratory batches | A pragmatic measure of feasibility constraints. |

| Performance Improvement | ∆ in key figure of merit (e.g., turnover frequency, TOF) of best new candidate vs. previous champion. | Positive, sustained increments | Measures the efficacy of the overall search. |

Experimental Protocols

Protocol 1: Implementing a Multi-Fidelity Candidate Filtering Pipeline

- Input: List of 10,000 AI-generated catalyst compositions (e.g., ternary alloys, doped perovskites).

- Step 1 - Stability Filter (Low-Fidelity): Use M3GNet or CHGNet MLFFs via the

matglpackage to compute predicted formation energy per atom. Discard all candidates with E_form > 0 eV/atom (or a domain-specific threshold). Expected yield: ~30%. - Step 2 - Similarity & Novelty Check: Compute the Smooth Overlap of Atomic Positions (SOAP) descriptor for remaining candidates against a database of known synthesized materials. Tag candidates with a similarity score. Separate into "exploit" (high similarity, high predicted performance) and "explore" (low similarity) streams.

- Step 3 - Synthesis Pathway Scoring (Medium-Fidelity): For the "exploit" stream and a random subset of the "explore" stream, query a local instance of the ASKCOS API for retrosynthesis pathways. Candidates with a pathway confidence score > 50% are prioritized for the next step.

- Step 4 - High-Fidelity DFT Validation: Perform DFT calculations (using VASP, Quantum ESPRESSO) on the top 50 prioritized candidates to compute accurate adsorption energies and activation barriers.

Protocol 2: Active Learning Loop for Catalyst Discovery

- Initialization: Train a preliminary generative model and a separate property predictor (e.g., for adsorption energy) on all available sparse data.

- Candidate Generation: Use the generative model to propose 1,000 new candidates, using a tuned acquisition function (e.g., Upper Confidence Bound, UCB) to balance predicted performance (mean) and uncertainty (variance).

- Batch Selection: From the 1,000, select a batch of 50 for the next cycle using the D-optimality criterion to maximize diversity in the selected batch, while ensuring at least 40% meet the synthesis feasibility score from Protocol 1, Step 3.

- Evaluation & Update: Obtain ground truth data for the batch via Protocol 1. Augment the training dataset. Retrain both the generative model and the property predictor.

- Iteration: Repeat steps 2-4 for 10-20 cycles, monitoring the metrics in the table above.

Visualizations

Title: Overcoming Sparse Data with Hybrid Training

Title: Multi-Fidelity Filtering Pipeline

Title: Active Learning Loop for Catalyst AI

The Scientist's Toolkit: Research Reagent & Computational Solutions

Table: Essential Resources for AI-Driven Catalyst Discovery

| Item / Solution | Function / Purpose | Example (Reference) |

|---|---|---|

| OC20 Dataset | Large-scale dataset of DFT relaxations for catalyst surfaces; essential for pre-training (transfer learning) to combat sparse data. | Open Catalyst Project (https://opencatalystproject.org/) |

| M3GNet/CHGNet | Graph Neural Network-based Machine Learning Force Fields (MLFFs); enables rapid, low-fidelity stability screening of thousands of candidates. | matgl Python package (https://github.com/materialsvirtuallab/matgl) |

| ASKCOS Framework | Retrosynthesis planning software; provides synthesis feasibility scores and suggested pathways for organic molecules and, increasingly, inorganic complexes. | MIT ASKCOS (https://askcos.mit.edu/) |

| DScribe Library | Calculates advanced atomic structure descriptors (e.g., SOAP, MBTR) crucial for quantifying material similarity and novelty in high-dimensional space. | Python dscribe (https://singroup.github.io/dscribe/) |

| VASP / Quantum ESPRESSO | High-fidelity DFT software for final-stage validation of electronic properties and reaction energetics on prioritized candidates. | Commercial (VASP) & Open-Source (QE) |

| Active Learning Manager | Orchestrates the exploration-exploitation loop (batch selection, model retraining, data management). Custom scripts or platforms like deepchem or modAL. |

Python modAL framework (https://modal-python.readthedocs.io/) |

Strategic Frameworks: AI Architectures and Algorithms for Optimal Balance

Troubleshooting Guides & FAQs

Q1: My VAE-generated molecular structures are invalid or violate chemical rules. How can I improve validity rates? A: This is a common issue where the decoder exploits the latent space without chemical constraint. Implement a Validity-Constrained VAE (VC-VAE) by integrating a rule-based penalty term into the reconstruction loss. The penalty term can be calculated using open-source toolkits like RDKit to check for valency errors and unstable ring systems. Additionally, pre-process your training dataset to remove all invalid SMILES strings to prevent the model from learning corrupt patterns.

Q2: The generator in my GAN for protein sequence design collapses, producing limited diversity (mode collapse). What are the mitigation strategies? A: Mode collapse indicates the generator is exploiting a few successful patterns. Employ the following experimental protocol:

- Switch to a Wasserstein GAN with Gradient Penalty (WGAN-GP): This uses a critic (not a discriminator) with a Lipschitz constraint enforced via gradient penalty, leading to more stable training and better gradient signals.

- Implement Mini-batch Discrimination: Allow the discriminator to assess an entire batch of samples, making it harder for the generator to fool it with a single mode.

- Use Unrolled GANs: Optimize the generator against multiple future steps of the discriminator, preventing it from over-adapting to the discriminator's current state.

Q3: Training my diffusion model for small molecule generation is extremely slow. How can I accelerate the process? A: The iterative denoising process is computationally expensive. Utilize a Denoising Diffusion Implicit Model (DDIM) schedule, which allows for a significant reduction in sampling steps (e.g., from 1000 to 50) without a major loss in sample quality. Furthermore, employ a Latent Diffusion Model (LDM): train a VAE to compress molecules into a smaller latent space, then train the diffusion process on these latent representations. This reduces dimensionality and speeds up both training and inference.

Q4: How can I quantitatively balance exploration (diversity) and exploitation (property optimization) when using these models for catalyst discovery? A: Implement a Bayesian Optimization (BO) loop around your generative model. Use the model (e.g., a Conditional VAE or Diffusion Model) to generate a candidate pool (exploration). A surrogate model (e.g., Gaussian Process) predicts their properties, and an acquisition function (e.g., Upper Confidence Bound) selects the most promising candidates for evaluation (exploitation). The new experimental data is then fed back to retrain the generative model.

Table 1: Quantitative Comparison of Generative Model Performance on Molecular Generation Tasks (MOSES Benchmark)

| Model Type | Validity (%) | Uniqueness (%) | Novelty (%) | Reconstruction Accuracy (%) | Training Stability |

|---|---|---|---|---|---|

| VAE (Standard) | 85.2 | 94.1 | 80.5 | 76.3 | High |

| GAN (WGAN-GP) | 95.7 | 100.0 | 99.9 | N/A | Medium |

| Diffusion (DDPM) | 99.8 | 99.5 | 95.2 | 90.1 | Very High |

Q5: What is a practical experimental protocol for iterative catalyst design using a diffusion model? A: Protocol for Latent Diffusion-Driven Catalyst Optimization

- Data Curation: Assemble a dataset of known catalyst structures (e.g., as SMILES or 3D graphs) paired with key performance metrics (e.g., turnover frequency, yield).

- Model Training: Train a Latent Diffusion Model (LDM):

- Encoder: A graph neural network (GNN) compresses each molecular graph into a latent vector

z. - Diffusion: Train a noise prediction network (e.g., a U-Net) to denoise

z_tover timestepst. - Decoder: The GNN decoder reconstructs the molecule from the denoised latent vector.

- Encoder: A graph neural network (GNN) compresses each molecular graph into a latent vector

- Conditional Generation: Fine-tune the model for conditional generation by feeding the performance metric as an additional input to the noise prediction network.

- Inverse Design Loop: For a target property, sample from the conditional diffusion model to generate novel candidate structures.

- Experimental Validation: Synthesize and test top-predicted candidates. Add the new data (structure & property) to the training set and retrain the model iteratively.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools for Generative AI in Molecular Research

| Item / Software | Function & Explanation |

|---|---|

| RDKit | Open-source cheminformatics toolkit used for converting SMILES to molecules, calculating descriptors, enforcing chemical validity, and visualizing structures. |

| PyTorch / TensorFlow | Deep learning frameworks essential for building, training, and deploying custom VAE, GAN, and Diffusion model architectures. |

| JAX | Increasingly used for high-performance numerical computing and efficient implementation of diffusion model sampling loops. |

| DeepChem | Library that provides out-of-the-box implementations of molecular graph encoders (GNNs) and datasets for drug discovery tasks. |

| GuacaMol / MOSES | Benchmarking frameworks and datasets specifically designed for evaluating generative models on molecular generation tasks. |

| Open Catalyst Project | A dataset and benchmark for catalyst discovery, containing DFT relaxations of adsorbates on surfaces, useful for training property prediction models. |

Visualizations

Title: VAE Training and Latent Space Encoding Workflow

Title: Adversarial Training Feedback Loop in GANs

Title: Diffusion Model Forward and Reverse Process

Title: AI-Driven Catalyst Discovery Exploration-Exploitation Loop

Bayesian Optimization and Thompson Sampling for Intelligent Experiment Selection

Troubleshooting Guides and FAQs

Q1: Why does my Bayesian Optimization (BO) loop appear to get "stuck," repeatedly suggesting similar experiments instead of exploring new regions of the catalyst space?

A1: This is a classic sign of an overly exploitative search, often due to inappropriate hyperparameters in the acquisition function or kernel.

- Check the acquisition function. If using Expected Improvement (EI), the internal xi parameter controls exploration; increase its value (e.g., from 0.01 to 0.1) to encourage more exploration.

- Check the kernel length scales. Overly large length scales in the Gaussian Process (GP) kernel cause the model to generalize too much, missing local features. Use automatic relevance determination (ARD) or manually reduce length scales to make the model more sensitive to parameter changes.

- Verify your noise setting. An underestimated GP noise parameter (alpha) can lead to overfitting, causing the algorithm to over-trust predictions and exploit excessively.

Q2: My Thompson Sampling (TS) algorithm shows high performance variance between runs on the same catalyst discovery problem. Is this normal, and how can I stabilize it?

A2: Yes, inherent stochasticity in TS can cause variance. To reduce it:

- Increase the number of samples. When sampling from the GP posterior, draw more than one sample (e.g., 5-10) per iteration and select the point with the best average score across samples.

- Implement a hybrid approach. Use TS for the first N exploration-heavy iterations, then switch to a more exploitative BO acquisition function like EI or Probability of Improvement (PI) to refine the best candidates.

- Use a common random seed. For reproducibility and run comparison, fix the random seed for the sampling step.

Q3: How do I handle categorical or mixed-type parameters (e.g., catalyst dopant type and temperature) in my experimental setup?

A3: Standard GP kernels require numerical inputs. You must encode categorical parameters.

- One-Hot Encoding: Transform a categorical parameter with k choices into k binary parameters. This works best with a dedicated kernel (e.g.,

Hammingkernel) or by combining a categorical kernel with a continuous kernel. - Bayesian Optimization with Tree-structured Parzen Estimator (BO-TPE): Consider using TPE, which natively handles mixed search spaces, as an alternative to GP-based BO for complex parameter types.

Q4: The computational cost of refitting the Gaussian Process model is becoming prohibitive as my experiment history grows. What are my options?

A4: This is a common scalability challenge.

- Use sparse Gaussian Process approximations. Implement variational free energy (VFE) or inducing point methods to approximate the full GP using a subset of "inducing" data points, drastically reducing cost from O(n³) to O(n·m²), where m is the number of inducing points.

- Implement a moving window. If older experiments are less relevant, refit the GP only on the most recent N experiments (e.g., the last 100).

- Switch to a bandit algorithm. For very high-dimensional spaces, consider simpler contextual bandit algorithms as an alternative to full BO.

Key Quantitative Data in Catalyst Optimization

Table 1: Performance Comparison of Experiment Selection Algorithms

| Algorithm | Avg. Best Yield Found (%) | Experiments to Reach 90% Optimum | Computational Overhead | Best for Phase |

|---|---|---|---|---|

| Random Search | 78.2 ± 5.1 | 150+ | Very Low | Initial Exploration |

| Bayesian Optimization (EI) | 94.7 ± 2.3 | 45 | High | Balanced Search |

| Thompson Sampling (GP Posterior) | 92.1 ± 4.8 | 38 | Medium-High | Explicit Exploration |

| Grid Search | 90.5 ± 1.5 | 120 | Low | Low-Dimensional Spaces |

Table 2: Impact of Acquisition Function Hyperparameters on Catalyst Discovery

| Acquisition Function | xi (Exploration) Value | Avg. Regret (Lower is Better) | % of Experiments in Top 5% Yield Region |

|---|---|---|---|

| Expected Improvement (EI) | 0.01 | 12.5 | 65% |

| Expected Improvement (EI) | 0.10 | 8.2 | 42% |

| Probability of Improvement (PI) | 0.01 | 15.1 | 78% |

| Upper Confidence Bound (UCB) | 2.0 | 9.8 | 48% |

Experimental Protocols

Protocol 1: Standard Bayesian Optimization Loop for Catalyst Screening

- Define Search Space: Parameterize catalyst variables (e.g., molar ratios, doping concentrations, synthesis temperature ranges).

- Initialize with Design of Experiments (DoE): Perform 5-10 initial experiments using Latin Hypercube Sampling to seed the model.

- Model Training: Fit a Gaussian Process (GP) regression model with a Matern 5/2 kernel to the experimental data (inputs: parameters, target: yield/activity).

- Acquisition Optimization: Compute the Expected Improvement (EI) across the search space. Use a multi-start L-BFGS-B optimizer to find the parameter set that maximizes EI.

- Experiment Execution: Synthesize and test the catalyst suggested in step 4.

- Iterate: Append the new result to the dataset. Repeat steps 3-5 for a fixed budget (e.g., 50-100 total experiments).

Protocol 2: Thompson Sampling for High-Throughput Exploration

- Prior Model: Start with a GP prior defined over the catalyst parameter space.

- Posterior Sampling: At each iteration, draw a random function sample from the current GP posterior.

- Selection: Identify the catalyst parameters that maximize the drawn sample function.

- Parallel Experimentation: For a batch of k experiments, draw k independent samples from the posterior and select the top k maximizing parameters.

- Batch Evaluation: Conduct all k catalyst experiments in parallel.

- Model Update: Update the GP model with the results from the entire batch. Repeat from step 2.

Visualizations

BO-TS Experiment Selection Loop

Balancing Feedback Loop in Catalyst AI

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for Catalyst AI Research Workflow

| Item | Function/Application in AI-Driven Experiments |

|---|---|

| High-Throughput Synthesis Robot | Enables automated, parallel preparation of catalyst libraries as defined by BO/TS parameter suggestions. |

| Multi-Channel Microreactor System | Allows for simultaneous testing of multiple catalyst candidates under controlled, identical conditions. |

| In-Line GC/MS or HPLC | Provides rapid, quantitative analysis of reaction products for immediate feedback into the AI model's dataset. |

| Metal Salt Precursors & Ligand Libraries | Diverse, well-characterized chemical building blocks for constructing the catalyst search space. |

| GPyTorch or GPflow Library | Software for building and training scalable Gaussian Process models as the surrogate model in BO. |

| Ax/Botorch or scikit-optimize Platform | Integrated frameworks providing implementations of BO, TS, and various acquisition functions. |

| Laboratory Information Management System (LIMS) | Critical for tracking experimental metadata, ensuring data integrity, and linking parameters to outcomes for the AI model. |

Technical Support Center: Troubleshooting Guides & FAQs

FAQ: Fundamental Concepts & Application

Q1: How does reward shaping specifically address the exploration-exploitation dilemma in catalyst generative AI research? A1: In catalyst discovery, exhaustive search of chemical space is infeasible. Reward shaping provides intermediate, guided rewards to bias the RL agent’s policy. This reduces random (high-cost) exploration and accelerates the exploitation of promising catalyst regions. Shaped rewards can incorporate domain knowledge (e.g., favorable molecular descriptors) to make exploration more informed, directly balancing the need to try novel structures (exploration) with refining known high-performing ones (exploitation).

Q2: What are common pitfalls when designing shaped reward functions that lead to suboptimal or biased policies? A2: Common pitfalls include:

- Positive Reward Cycles (Agent Gaming): The agent discovers a loop to accumulate shaped rewards without improving the true objective (e.g., optimizing a proxy property instead of actual catalytic activity).

- Over-Justified Exploration: Shaping rewards are too large, causing the agent to over-explore shaped reward sources and converge to local maxima.

- Loss of Optimal Policy Guarantee (Potential-Based Violation): If shaping is not potential-based, the optimal policy for the shaped rewards may not align with the optimal policy for the true objective.

Q3: Can you provide a quantitative comparison of key reward shaping strategies? A3: The table below summarizes key approaches based on recent literature.

| Strategy | Core Mechanism | Primary Advantage | Key Disadvantage | Suitability for Catalyst Discovery |

|---|---|---|---|---|

| Potential-Based Shaping | Adds Φ(s') - Φ(s) to reward. | Preserves optimal policy guarantees. | Requires domain expertise to design good potential function Φ. | High: Safe for expensive simulations. |

| Dynamically Weighted Shaping | Adjusts shaping weight during training. | Can emphasize exploration early, exploitation later. | Introduces hyperparameters for schedule tuning. | Medium-High: Adapts to different search phases. |

| Intrinsic Motivation (e.g., Curiosity) | Adds reward for visiting novel/uncertain states. | Promotes robust exploration of state space. | Can lead to "noisy TV" problem—focus on randomness. | Medium: Good for initial space exploration. |

| Proxy Reward Shaping | Uses computationally cheap property predictors as reward. | Dramatically reduces cost per evaluation. | Risk of optimizer gaming if proxy poorly correlates. | High: Essential for iterative generative design. |

| Human-in-the-Loop Shaping | Expert feedback incorporated as reward adjustments. | Leverages implicit expert knowledge. | Not scalable; introduces subjective bias. | Low-Medium: For small-scale, high-value targets. |

Troubleshooting Guide: Common Experimental Issues

Issue T1: Agent Performance Plateaus Rapidly, Ignoring Large Regions of Chemical Space

- Symptoms: The generative model produces minor variations of the same molecular scaffold early in training. Quantitative diversity metrics (e.g., internal diversity, unique valid %) drop.

- Diagnosis: Overly aggressive exploitation due to poorly scaled or dominant shaped rewards.

- Solution Protocol:

- Audit Reward Scale: Ensure the magnitude of the shaped reward does not exceed 10-20% of the primary reward (e.g., predicted catalytic activity). Use

R_total = R_primary + β * R_shaped. Start with β=0.1. - Implement Dynamic Weighting: Apply a decay schedule to β. For example:

β(t) = β_initial * exp(-t / τ), wheretis training step andτis a decay constant (e.g., 5000 steps). - Integrate Diversity Bonus: Add a small intrinsic reward based on Tanimoto dissimilarity to a rolling buffer of recent molecules:

R_diversity = α * (1 - avg_similarity). Set α low (e.g., 0.05). - Validate: Monitor the per-batch diversity metric. It should stabilize, not monotonically decrease.

- Audit Reward Scale: Ensure the magnitude of the shaped reward does not exceed 10-20% of the primary reward (e.g., predicted catalytic activity). Use

Issue T2: Agent Exploits Shaped Reward Loophole, Degrading Primary Objective

- Symptoms: Shaped reward (e.g., for synthetic accessibility) rises, but the primary reward (catalytic activity) stagnates or falls. Generated molecules may become trivially simple.

- Diagnosis: The shaped reward function is not potential-based, altering the optimal policy.

- Solution Protocol:

- Convert to Potential-Based Shape: Reformulate your shaping reward

F(s, a, s')to the formγΦ(s') - Φ(s), where γ is the RL discount factor. - Example: If you shaped based on molecular weight (MW), aiming for 300 g/mol. Instead of

F = -abs(MW(s') - 300), define potentialΦ(s) = -abs(MW(s) - 300). The shaped reward becomesγ * abs(MW(s) - 300) - abs(MW(s') - 300). - Test Policy Invariance Theorem: Run a short test comparing policy gradients with and without the transformed shaping. They should point in the same direction for the true objective.

- Recalibrate: Retrain with the corrected potential-based shaping function.

- Convert to Potential-Based Shape: Reformulate your shaping reward

Experimental Protocols for Cited Key Experiments

Protocol P1: Validating Potential-Based Reward Shaping for a QM/RL Catalyst Pipeline

- Objective: Demonstrate that potential-based shaping improves sample efficiency without altering the final optimal catalyst candidate.

- Methodology:

- Baseline Setup: Train a PPO agent with a primary reward

R_primary = -MAE(predicted_activity, target). - Shaping Setup: Train an identical agent with

R_total = R_primary + (γΦ(s') - Φ(s)). DefineΦ(s)as the negative squared deviation of the molecule's HOMO-LUMO gap from an ideal target (pre-calculated via fast ML model). - Control: Train a third agent with a non-potential-based shaping

R_total = R_primary - abs(HOMO-LUMO_gap(s') - target). - Evaluation: Over 5 random seeds, compare the mean primary reward (not total reward) convergence curve and the final top-5 molecule sets. The potential-based and baseline should converge to similar primary reward maxima, while the non-potential-based may diverge.

- Baseline Setup: Train a PPO agent with a primary reward

- Key Metrics: Sample efficiency (steps to reach 90% of max reward), policy invariance success rate (5/5 seeds converging to same region).

Protocol P2: Dynamic Weighting for Exploration-Exploitation Phasing

- Objective: Optimize the transition from broad exploration to focused exploitation in a generative molecular design run.

- Methodology:

- Phase Detection: Implement a simple phase detector based on moving average of primary reward improvement (

ΔR).Phase = Exploration if std_dev(ΔR_last_100) > threshold. - Reward Formulation:

R_total = R_primary + w(t) * R_curiosity, whereR_curiosityis prediction error of a dynamics model. - Weight Schedule:

w(t) = w_max if Phase=Exploration, else w_min. Usew_max=0.5,w_min=0.05. - Run Experiment: Compare against fixed-weight (

w=0.2) and no-curiosity baselines over 20k training steps.

- Phase Detection: Implement a simple phase detector based on moving average of primary reward improvement (

- Key Metrics: Coverage of relevant chemical space (using PCA of Morgan fingerprints), time to discover first high-reward candidate (>95%ile activity).

Diagrams

The Scientist's Toolkit: Research Reagent Solutions

| Item / Solution | Function in RL Catalyst Research | Example / Specification |

|---|---|---|

| RL Frameworks | Provides algorithms (PPO, DQN, SAC) and training loops. | Stable-Baselines3, Ray RLlib. Use with custom environment. |

| Molecular Simulation Environment | Defines state/action space and calculates primary reward. | OpenAI Gym-like wrapper for RDKit or Schrödinger. |

| Fast Property Predictors | Serves as proxy for shaped reward or primary reward during pre-screening. | Quantum Mechanics (DFT) pre-trained graph neural network (e.g., MGNN). |

| Potential Function Library | Pre-defined, validated potential functions Φ(s) for common objectives. | Custom library including functions for QED, SA Score, HOMO-LUMO gap, logP. |

| Diversity Metrics Module | Calculates intrinsic rewards or monitors exploration health. | Functions for internal & external diversity using Tanimoto similarity on fingerprints. |

| Dynamic Weight Scheduler | Algorithm to adjust shaping weight β over time. | Cosine annealer or phase-based scheduler integrated into training loop. |

| Chemistry-Action Spaces | Defines valid molecular transformations for the RL agent. | RationaleRL-style fragment addition/removal, SMILES grammar mutations. |

Technical Support Center

Frequently Asked Questions (FAQs)

Q1: My generative AI model consistently proposes catalyst structures with excellent predicted activity and selectivity but very poor synthetic accessibility scores. How can I guide the model towards more realistic candidates? A1: This is a classic exploitation-vs-exploitation challenge where the model is over-exploiting the activity/selectivity objective. Implement a weighted multi-objective scoring function. Adjust the penalty for poor synthesizability (e.g., using SAscore or RAScore) by increasing its weight in the overall cost function. Furthermore, incorporate a reaction-based generation algorithm (like a retrosynthesis-aware model) instead of a purely property-based one, ensuring the generative process is grounded in known chemical transformations.

Q2: During the optimization loop, how do I prevent the ADMET property predictions (e.g., solubility, hERG inhibition) from becoming the dominant factor, causing a collapse in chemical diversity? A2: To balance this exploration-exploitation trade-off, use a Pareto-frontier optimization strategy. Instead of a single combined score, treat Activity, Selectivity, and each key ADMET property as separate objectives. Employ algorithms like NSGA-II (Non-dominated Sorting Genetic Algorithm II) to find a set of non-dominated optimal solutions. This maintains a population of diverse candidates that represent different trade-offs, preventing early convergence on a single property.

Q3: The computational cost of running high-fidelity DFT calculations for every generated candidate for activity/selectivity is prohibitive. What is a feasible protocol? A3: Implement a tiered evaluation workflow. Use fast, low-fidelity ML models (e.g., graph neural networks) for initial screening and exploration of the chemical space. Only the top-performing candidates from this stage (the exploitation phase) are promoted to more accurate, costly computational methods (like DFT) or synthesis for validation. This hierarchical filtering efficiently balances broad exploration with precise exploitation of promising leads.

Q4: How can I quantitatively track whether my multi-objective optimization is successfully balancing all objectives and not ignoring one? A4: Monitor the evolution of the Pareto front. Calculate and log hypervolume metrics for each generation of your optimization. Create a table to compare key metrics across optimization runs:

Table 1: Multi-Objective Optimization Run Diagnostics

| Optimization Cycle | Hypervolume | # of Pareto Solutions | Avg. Activity (pIC50) | Avg. Synthesizability (SAscore) | Avg. Solubility (LogS) |

|---|---|---|---|---|---|

| Initial Population | 1.00 | 15 | 6.2 | 4.5 | -4.5 |

| Generation 50 | 2.45 | 22 | 7.8 | 3.8 | -4.0 |

| Generation 100 | 3.10 | 18 | 8.5 | 2.9 | -3.5 |

A consistently increasing hypervolume indicates balanced improvement. Stagnation suggests recalibration of objective weights or algorithm parameters is needed.

Troubleshooting Guides

Issue: Catastrophic Forgetting in the Generative Model

- Symptoms: The AI model "forgets" how to generate molecules with good ADMET properties after being fine-tuned heavily on activity data.

- Diagnosis: This is an exploration-exploitation imbalance in the training data. The model over-exploits the new activity data distribution.

- Solution: Use experience replay or elastic weight consolidation (EWC) techniques. Maintain a buffer of previously generated molecules with good multi-objective scores and intermittently retrain the model on this buffer along with new data to preserve prior knowledge.

Issue: Optimization Stuck in a Local Pareto Front

- Symptoms: The set of best candidates does not improve in diversity or quality over multiple generations.

- Diagnosis: The algorithm is over-exploiting a small region of chemical space and lacks exploration mechanisms.

- Solution: Introduce diversity-preserving operators:

- Niching: Implement fitness sharing in the genetic algorithm to reduce the selection probability of overcrowded candidates in property space.

- Novelty Search: Add a bonus to the reward function for candidates that are structurally distinct from the population average.

- Periodic "Heat" Increase: Temporarily increase the mutation rate or the sampling temperature of the generative model to jump to new regions.

Experimental Protocols

Protocol 1: Iterative Multi-Objective Optimization Cycle for Catalyst AI

Objective: To discover novel catalyst candidates optimizing activity (turnover frequency, TOF), selectivity, and synthesizability. Materials: See "The Scientist's Toolkit" below. Method:

- Initialization: Use a pre-trained molecular generative model (e.g., a Transformer or GVAE) to create a diverse seed population of 10,000 candidate structures.

- Low-Fidelity Screening (Exploration): Pass all candidates through fast ML predictors for TOF, selectivity (regio-/enantioselectivity), and SAscore. Filter to the top 20%.

- Pareto Ranking: Apply NSGA-II to the filtered set to identify the non-dominated Pareto front (approx. 100-200 structures).

- High-Fidelity Validation (Exploitation): Select 50 representative structures from the Pareto front for DFT-based transition state calculation to refine TOF and selectivity predictions.

- Model Retraining & Generation: Use the high-fidelity results (and any experimental synthesis data if available) to fine-tune the generative model. Generate a new population of candidates, biasing sampling towards the high-performing regions of the multi-objective space.

- Iteration: Repeat steps 2-5 for 10-20 cycles, monitoring hypervolume and diversity metrics.

Protocol 2: Tiered ADMET Risk Assessment Workflow

Objective: To efficiently eliminate compounds with poor drug-like properties while preserving chemical diversity. Method:

- Tier 1 - Rule-Based Filter (Rapid): Apply hard filters (e.g., PAINS, REOS) to remove structures with reactive or undesirable substructures.

- Tier 2 - QSAR Prediction (Medium Throughput): Use ensemble QSAR models to predict key ADMET endpoints: solubility (LogS), permeability (Caco-2/MDCK), metabolic stability (Cyp450 inhibition), and cardiac toxicity (hERG).

- Tier 3 - Experimental Validation (Low Throughput): For compounds passing all predictive tiers, proceed to in vitro assays in microsomal stability, solubility, and early cytotoxicity panels. Data Integration: Results from Tiers 2 and 3 are fed back as labeled data to continuously improve the predictive models, closing the AI feedback loop.

The Scientist's Toolkit

Table 2: Key Research Reagent Solutions for Multi-Objective Optimization

| Item / Solution | Function in Workflow | Example / Provider |

|---|---|---|

| Generative AI Model | Core engine for proposing novel molecular structures. | MolGPT, REINVENT, GFlowNet, ChemBERTa (fine-tuned). |

| Property Prediction APIs | Fast, batch calculation of molecular properties for screening. | RDKit (SAscore, descriptors), OCHEM platforms, proprietary ADMET predictors. |

| DFT Software Suite | High-fidelity computation of electronic structure, reaction barriers, and selectivity descriptors. | Gaussian, ORCA, VASP with transition state search modules (NEB, Dimer). |

| Multi-Objective Opt. Library | Implements algorithms for Pareto optimization and diversity maintenance. | pymoo (Python), Platypus (Python), JMetal. |

| Chemical Database | Source of training data and for checking novelty/similarity of generated candidates. | PubChem, ChEMBL, Cambridge Structural Database (CSD), proprietary catalogs. |

| Automation & Workflow Manager | Orchestrates the iterative cycle between AI generation, prediction, and analysis. | KNIME, Nextflow, Snakemake, custom Python scripts with Airflow/Luigi. |

Technical Support Center

FAQs & Troubleshooting Guides

Q1: Our high-throughput virtual screening (HTVS) pipeline is generating an unmanageably large number of candidate molecules (>10^6). How do we effectively triage these for physical screening within a limited lab capacity?

A: Implement a multi-stage, AI-driven filtering funnel. The core strategy is to balance broad exploration with focused exploitation.

- Stage 1 (Exploration): Apply rapid, computationally cheap filters (e.g., rule-of-five, PAINS filters, synthetic accessibility score). This typically reduces the list by 80-90%.

- Stage 2 (Balanced Scoring): Rank the remaining candidates using a consensus scoring function that combines:

- Exploitation: A high-fidelity, pre-trained model (e.g., a graph neural network) fine-tuned on known active molecules for your target.

- Exploration: A diversity-picking algorithm (e.g., MaxMin, k-Medoids) to ensure structural novelty and cover chemical space.

- Protocol: Use a weighted score:

Final_Score = (α * AI_Prediction_Score) + (β * Diversity_Score). Adjust α and β based on your project phase (early: higher β for exploration; late: higher α for exploitation).

Quantitative Triage Example: Table 1: Example Output of a Three-Stage Funnel for Catalyst Candidate Selection

| Stage | Filter Method | Candidates In | Candidates Out | Reduction (%) | Primary Goal |

|---|---|---|---|---|---|

| 1 | Descriptors & Rules | 1,200,000 | 150,000 | 87.5% | Remove obvious failures |

| 2 | Fast ML Model (Random Forest) | 150,000 | 15,000 | 90.0% | Prioritize predicted activity |

| 3 | Diversity Selection & Expert Review | 15,000 | 384 | 97.4% | Ensure novelty & lab feasibility |

Q2: We observe a significant performance gap (Simulation-to-Real, Sim2Real) where molecules predicted to be highly active in simulation show no activity in the physical assay. What are the primary checkpoints?

A: This is a critical failure point. Systematically troubleshoot your workflow.

Check the Simulation Model:

- Retrain/Finetune: Ensure your generative AI or scoring model was trained on data relevant to your specific assay conditions (e.g., pH, temperature). Retrain a layer on a small set of in-house physical screening data if available.

- Domain Shift: Evaluate if your virtual library contains chemistries or scaffolds outside the training domain of your model. Use applicability domain algorithms.

Check the Physical Assay Protocol:

- Validate Assay Controls: Are your positive and negative controls performing as expected? A drift in control values invalidates the comparison.

- Compound Integrity: Verify the solubility and stability of your delivered compounds in the assay buffer. Use LC-MS to check for precipitation or degradation.

- Concentration Error: Confirm the concentration of the compound in the assay plate via direct measurement (e.g., UV absorbance).

Q3: How do we design an effective active learning loop to iteratively improve our generative AI model based on physical screening results?

A: Establish a closed-loop workflow where physical data directly refines the digital model.

Experimental Protocol for an Active Learning Cycle:

- Initial Batch: Generate and physically test 384 candidates selected by the model's initial predictions.

- Data Incorporation: Format the physical results (e.g., IC50, yield, conversion) and add them to the training dataset.

- Model Retraining: Retrain or fine-tune the generative AI model (e.g., a variational autoencoder or a transformer) on the augmented dataset. Use a transfer learning approach to preserve prior knowledge.

- Next-Batch Selection: The updated model generates new candidates, focusing on regions of chemical space near physical hits (exploitation) but with controlled variations (exploration via noise injection or latent space sampling).

- Iterate: Repeat every 4-6 weeks, tracking the "hit rate" improvement per cycle.

Visualized Workflows

Hybrid Catalyst Discovery Workflow

Sim2Real Gap Troubleshooting Guide

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Hybrid Catalyst Screening Workflows

| Item Name | Function | Key Consideration for Hybrid Workflows |

|---|---|---|

| LC-MS Grade Solvents | Compound solubilization, assay execution, and analytical verification. | Batch-to-batch consistency is critical for replicating simulation conditions (e.g., dielectric constant). |

| Solid-Phase Synthesis Kits | Rapid physical synthesis of prioritized virtual candidates. | Compatibility with automated platforms for high-throughput parallel synthesis. |

| qPCR or Plate Reader Assay Kits | High-throughput physical measurement of catalytic activity or inhibition. | Dynamic range and sensitivity must match the prediction range of the AI model. |

| Stable Target Protein | The biological or chemical entity for screening. | Purity and stability must be ensured to align with static structure used in simulations. |

| Automated Liquid Handling System | Executing physical assays with precision and throughput. | Minimizes manual error, ensuring physical data quality for AI retraining. |

| Cloud Computing Credits | Running large-scale virtual screens and model training. | Necessary for iterative active learning cycles; scalability is key. |

| Chemical Diversity Library | A foundational set of physically available compounds for initial model training and validation. | Should be well-characterized to establish a baseline for Sim2Real correlation. |

Technical Support Center

Troubleshooting Guides & FAQs

Q1: During the AI proposal phase, the generative model consistently suggests catalyst structures that are chemically implausible or impossible to synthesize on our robotic platform. How can we correct this?

- A: This indicates a disconnect between the AI's exploration space and the platform's exploitation capabilities. Implement a dual-filter system:

- Integrate a Real-Time Validity Checker: Embed a rule-based or ML-based chemical feasibility filter (e.g., using valency rules, stability predictors) within the AI's proposal generation loop to pre-filter suggestions.

- Platform-Aware Constraint Encoding: Before training/generation, encode your robotic synthesizer's capabilities (e.g., maximum pressure/temperature, forbidden solvents, available building blocks) as hard or soft constraints in the AI model's objective function. This biases exploration towards the exploitable domain.

- A: This indicates a disconnect between the AI's exploration space and the platform's exploitation capabilities. Implement a dual-filter system:

Q2: Our automated testing platform returns high variance in catalytic activity data for the same compound, confusing the AI's learning loop. What are the primary checks?

- A: High variance undermines the exploitation feedback. Follow this protocol:

- Liquid Handling Calibration: Use a fluorescent dye (e.g., fluorescein) to verify pipetting precision across all liquid handler tips. Acceptable CV should be <5%.

- Catalyst Bed Preparation: For heterogeneous catalysts, implement automated sonication and slurry mixing protocols pre-dispensing to ensure uniform particle suspension.

- In-Line Analytics Validation: Run a standard catalyst (e.g., 5 wt% Pd/C for a hydrogenation) with every experimental batch as an internal control. If its activity falls outside the established range (e.g., ±15% conversion), flag the batch for review.

- A: High variance undermines the exploitation feedback. Follow this protocol:

Q3: The AI proposes a promising catalyst, but the robotic synthesizer fails at the purification step (e.g., filtration, crystallization). How can we handle this?

- A: This is a common hardware-software integration gap. Implement a "Synthetic Accessibility Score" that includes post-reaction processing.

- Workaround Protocol: Program the platform to default to a robust, if slower, purification method (e.g., centrifugal filtration followed by solid-phase drying) for all initial AI proposals.

- Feedback for AI: Log the failure mode (e.g., "fine particulate clogged filter"). Use this log to fine-tune the AI's scoring function, penalizing proposals likely to generate problematic physical forms.

- A: This is a common hardware-software integration gap. Implement a "Synthetic Accessibility Score" that includes post-reaction processing.

Q4: How do we balance the AI's desire to explore novel, complex structures with the robotic platform's need for simple, high-yield synthesis protocols?

- A: This is the core thesis challenge. Adopt a multi-armed bandit strategy within your workflow.

- Protocol: Set a fixed ratio (e.g., 70:30) for each experimental campaign. 70% of robotic runs are dedicated to exploiting and optimizing the top-performing synthesizable candidates from previous rounds. 30% are allocated to testing higher-risk AI proposals for exploration. Adjust this ratio based on project phase (more exploration early, more exploitation late).

- A: This is the core thesis challenge. Adopt a multi-armed bandit strategy within your workflow.

Experimental Protocol: Closed-Loop Catalyst Optimization

Title: One Cycle of AI-Driven Robotic Catalyst Discovery.

Methodology:

- AI Proposal Generation: A generative model (e.g., a constrained variational autoencoder) proposes a batch of 50 candidate catalyst compositions, drawing 70% from a region of high predicted performance (exploitation) and 30% from a lower-confidence novel space (exploration).